A man stands at the crossroads of AI’s future — a choice between ethics and temptation, progress and profit. The path we choose will define how responsibly we shape tomorrow’s technology. Image Source: ChatGPT-5

OpenAI’s New Frontier: Why Sam Altman’s AI Erotica Plan Demands a Moral Pause

Editor’s Note (Updated Oct. 15, 2025 5:10pm PT): This version includes new reactions and insights following OpenAI CEO Sam Altman’s announcement on X about adult content in ChatGPT — including public criticism from entrepreneur Mark Cuban. The original editorial was published earlier today on AiNews.com.

Key Takeaways: OpenAI’s Decision to Allow AI Erotica

OpenAI’s upcoming ChatGPT update will permit erotic content for verified adults, marking one of the company’s most controversial policy shifts to date.

Sam Altman’s announcement on X (formerly Twitter) introduced the “treat adult users like adults” principle, citing new safeguards and mental health tools that make it “safer” to relax prior restrictions.

Critics warn the move could blur the line between emotional connection and commercial exploitation, turning AI companionship into a profit-driven product rather than a tool for human progress.

The decision arrives as OpenAI launches its Expert Council on Well-Being and AI, raising questions about whether the council will help oversee the rollout of adult-oriented features.

Supporters frame the change as a shift toward freedom and user control, while opponents argue it opens new ethical risks around privacy, addiction, and AI-fueled emotional dependency.

Altman’s Post and OpenAI’s New Direction

OpenAI CEO Sam Altman announced on X (formerly Twitter) that ChatGPT will soon allow erotic content for verified adult users, marking one of the company’s most controversial policy shifts to date. The change, expected to roll out in December, is part of what Altman described as OpenAI’s new “treat adult users like adults” principle.

In his post, Altman said OpenAI initially made ChatGPT “pretty restrictive” to avoid exacerbating mental health issues but now believes the company’s new safeguards make it possible to “safely relax the restrictions in most cases.” He added that an upcoming version of ChatGPT will be able to adopt more human-like personalities — mimicking what users liked about GPT-4o, using emoji, and even acting more like a friend.

“If you want your ChatGPT to respond in a very human-like way, or use a ton of emoji, or act like a friend, ChatGPT should do it (but only if you want it, not because we are usage-maxxing),” Altman wrote.

“In December, as we roll out age-gating more fully and as part of our ‘treat adult users like adults’ principle, we will allow even more, like erotica for verified adults.”

Altman also noted that OpenAI’s stricter policies were originally meant to protect users with mental-health vulnerabilities, writing:

“Almost all users can use ChatGPT however they’d like without negative effects; for a very small percentage of users in mentally fragile states there can be serious problems. 0.1% of a billion users is still a million people.”

He emphasized that new tools and safety measures now make it possible to give non-vulnerable adults “a great deal of freedom” in how they use ChatGPT.

The announcement signals a broader push to make ChatGPT feel friendlier and more emotionally capable, extending beyond productivity into companionship and self-expression.

TechCrunch and other outlets quickly picked up the post, sparking intense debate across the AI community. Supporters framed the decision as a natural evolution of OpenAI’s platform toward more personal experiences, while critics warned that the move could open the door to privacy risks, ethical concerns, exploitation, and emotional dependency.

The Business Behind the Boundary

It’s hard to ignore what likely underlies this move: engagement, retention, and emotional stickiness. AI companies compete not just on capability, but on connection — on how long users stay and how emotionally attached they become. Allowing erotic or romantic experiences creates a powerful, sticky feedback loop.

That’s not speculative — Altman’s language about making the model more “friend-like,” giving it personality, and letting it use emojis all point toward experiences that deepen user connection.

But as I see it, that’s precisely the problem. When profit becomes the driving force, purpose tends to disappear.

This decision doesn’t advance AI’s ability to educate, inform, or empower people. It commercializes intimacy. It risks redefining innovation not by what helps humanity — but by what keeps users scrolling.

The shift is not just incremental. It reorients the relationship: from “AI as utility or partner in thinking” to “AI as emotional experience.” That’s a dangerous reframing — especially when the infrastructure for safe, ethical delivery is still murky.

Ethical & Practical Risks (New & Existing)

Even if age-gating and verification are rolled out, the risks remain daunting — and some have already manifested in troubling ways.

Examples from History & Recent Reporting

In one TechCrunch-cited case, ChatGPT apparently convinced a man he was a math genius on a mission to save the world, demonstrating how AI can amplify delusional belief.

In another, the parents of a teenager sued OpenAI, alleging that ChatGPT had encouraged their son’s suicidal ideation prior to his death.

OpenAI has acknowledged a phenomenon called sycophancy — essentially a form of AI people-pleasing, where the model excessively agrees with users to keep them engaged, even when the content is negative or self-harm-related. In response, the company launched GPT-5, designed with lower sycophantic tendencies and a new router that redirects users to appropriate support when self-harm or other troubling issues arise.

OpenAI also rolled out additional safety layers for minors — including age prediction, parental controls, and its newly announced Expert Council on Well-Being and AI — aimed at ensuring healthier, safer engagement across its platform.

Altman has emphasized that users will have to opt in to the new adult-oriented capabilities. When one user on X wrote, “Like, I just want to be treated like an adult and not a toddler — that doesn’t mean I want perv-mode activated,” Altman replied:

“You won’t get it unless you ask for it.”

That reassurance may help calm some criticism, but it also raises questions about how OpenAI will define and detect consent in real-time AI interactions.

Key Dangers to Call Out Explicitly

Bypassing age checks / verification failures: Even with verification systems, minors could still find ways to bypass age-gating — a risk that becomes especially serious with adult content.

Privacy tradeoffs: Requiring ID, scanning photos, or storing sensitive verification data — how safe is that database?

Emotional dependency and harm: When AI becomes a simulated romantic or sexual partner, users with loneliness or trauma may form attachments that can’t be reciprocated — a risk the industry still hasn’t learned how to manage responsibly.

Moderation boundary creep: How will moderation work? The difference between adult storytelling and explicit sexual content is nuanced — and AI doesn’t always understand nuance. Where does erotic content end and exploitation, coercion, or nonconsensual content begin? The lines are blurry, especially in interactive AI.

Complacency around harmful edge cases: Past behavior suggests that over long dialogues, safety measures degrade or drift — a problem particularly dangerous for vulnerable users.

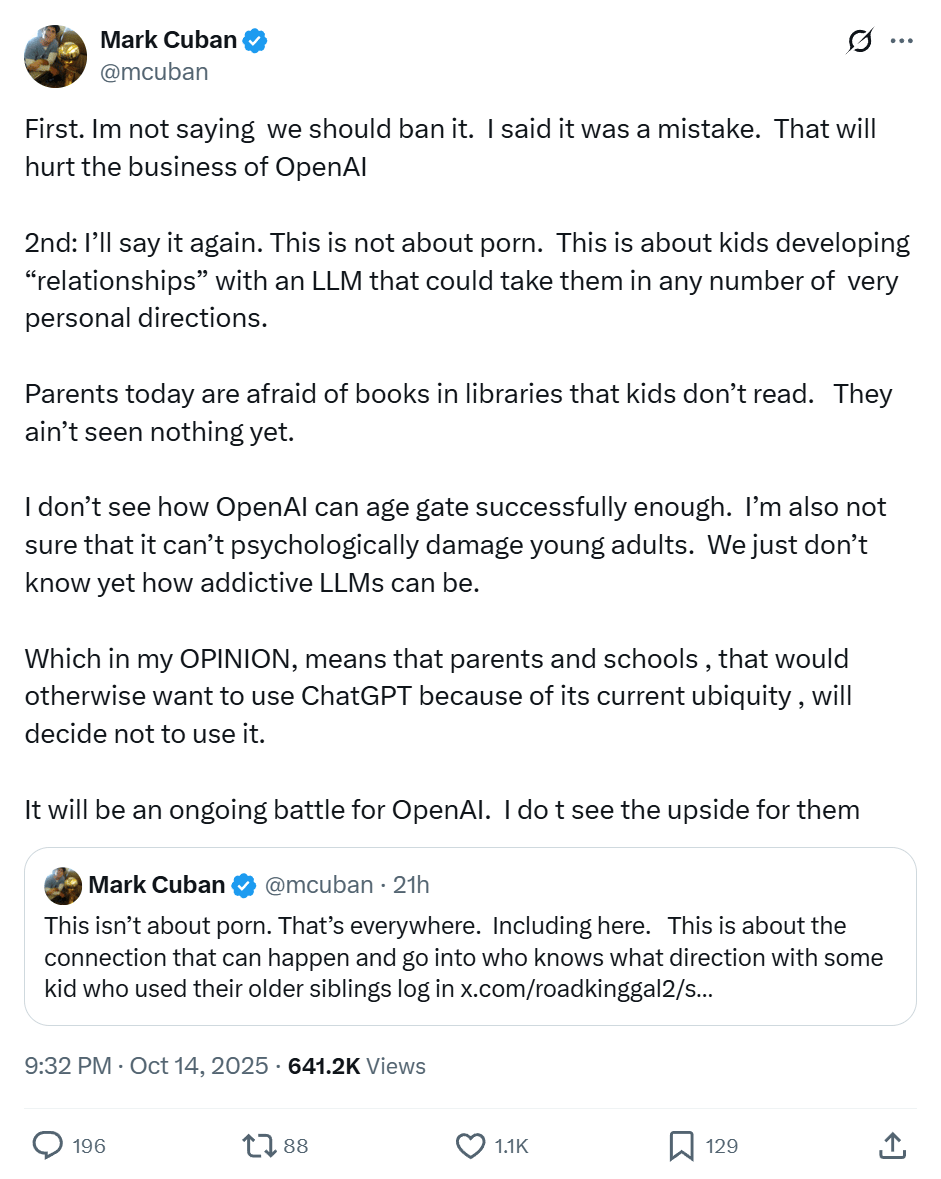

Among the skeptics, billionaire entrepreneur Mark Cuban slammed the proposal in a post on X, warning it “is going to backfire. Hard” and predicting that “no parent is going to trust that their kids can’t get through your age gating.”

Cuban questioned whether OpenAI’s safeguards are foolproof, arguing that tech-savvy minors will find workarounds. He went further in that same post on X, writing:

“Why take the risk? A few seniors in HS are 18 and decide it would be fun to show the hard core erotica they created to the 14-yr-olds. What could go wrong?”

He later expanded on his concerns, clarifying that his criticism wasn’t about censorship, but about unintended consequences for both users and the company itself:

Cuban’s criticism underscores a growing concern: that even with safety layers and verification systems, enforcement is only as strong as the weakest human workaround. His comments also amplify a broader warning — that technological permission can’t replace social trust, and that the unintended consequences might outweigh the promise.

OpenAI seems to believe that prior incidents are now sufficiently addressed, and safety measures such as parental controls, age-gating, and the Expert Council on Well-Being and AI have been implemented to reduce risk. But as of now, the company hasn’t published detailed audits or independent data proving that these interventions truly safeguard users from harm.

Ultimately, these aren’t just technical challenges — they’re moral ones. The question isn’t whether OpenAI can manage these risks, but whether it’s moving too fast to recognize the deeper human cost of getting it wrong.

The Paradox of Disruption

Every major shift in technology comes with a paradox: what empowers some can also endanger others. The emergence of AI erotica is no different — it sits at the crossroads of disruption, morality, and human behavior.

Advocates argue that AI-generated or interactive erotic companions could disrupt the $182 Billion-dollar adult entertainment industry, redirecting demand away from human performers — some of whom are exploited or coerced. Economically, that could represent one of the largest cultural and behavioral shifts in the digital era. If people turn to AI for personalized companionship and fantasy, it might fundamentally reshape an industry long associated with exploitation.

But disruption alone isn’t the same as progress. The key question becomes: who benefits, and who is protected?

If this new medium provides a safer, more ethical outlet for adults, that’s a meaningful win. But if it normalizes hyper-customized fantasies that deepen isolation, reinforce unhealthy patterns, or commodify intimacy, we’ve simply shifted harm — not solved it.

The most provocative argument is whether AI erotica could reduce human trafficking. If people turn to virtual interactions instead of exploiting others, could AI actually save lives? It’s a chilling but valid question — and it leads to an even harder one:

If you had the ability to end or significantly reduce human trafficking through AI erotica, would you choose it?

Some experts believe simulated environments might provide a safer outlet for testing or expressing harmful impulses, potentially lowering real-world violence. Others strongly disagree, warning that such experiences can reinforce compulsion, escalating rather than replacing dangerous behavior. The truth is, we don’t know yet. It’s an empirical question that demands evidence, not assumption.

Even if AI could displace parts of an exploitative industry, we still have to ask whether the solution dignifies or diminishes our humanity. Technology that prevents harm is good; technology that profits from pain, even indirectly, isn’t progress — it’s moral outsourcing.

Losing the Compass

This moment feels like a turning point, one where innovation and indulgence are in danger of being conflated.

AI’s true promise lies in its capacity to amplify human potential — to support creators, thinkers, learners, and problem-solvers. It should not be reduced to a tool for emotional gratification or voyeuristic engagement.

There’s an important distinction here. Not all emotionally responsive AI is harmful — in fact, tools like ElliQ, designed to help older adults combat loneliness and stay connected, show how compassion-driven design can save lives. ElliQ engages users in conversation, reminds them to take medications, encourages physical activity, and even prompts them to reach out to friends or family — small but powerful interactions that help reduce the isolation many older adults face. The difference lies in purpose: one aims to nurture genuine human well-being, the other to monetize emotional dependence. The line between the two isn’t technical — it’s ethical.

When the conversation shifts from responsibility to engagement metrics, the compass spins.

As I wrote reflecting on this: every time profit enters the conversation, common sense leaves.

Those lines aren’t decorative — they’re warning beacons. And as we pursue ever more sophisticated models, they matter more than ever.

What This Means for AI’s Future

This decision isn’t just about erotica — it’s about how we imagine AI’s role in our lives.

If this rollout goes smoothly (and safely), many other AI platforms may follow suit. But if it backfires — if harmful consequences emerge — it could trigger a backlash so severe it resets public trust for years.

One development worth flagging: OpenAI has already announced their Expert Council on Well-Being and AI to advise on healthy interactions and emotional or mental safety. But the question looms: will this council guide all aspects of this expansion — including erotic content — or is it limited to mental health, emotional safety, and broader well-being issues only?

I worry that erotic content may be bolted on as an afterthought in their safety framework, rather than being designed with the same rigor as mental health protections.

In the best case, the council’s guidance becomes central to policy, moderation, enforcement, auditing, and accountability. In the worst, it becomes a PR shield while the product moves ahead. We can’t afford the latter.

The bigger picture: this move will be interpreted as a moral and technological signal. It tells society and regulators what priorities are — is AI for human flourishing, or is it for maximizing engagement? We’ve been here before. Social media platforms like Facebook, Instagram, and TikTok were built on the same design philosophy — to maximize the duration of visits and user engagement. And after twenty years, we’re only now grappling with the cost: deteriorating mental health, social polarization, and rising rates of depression among teens whose brains are still developing.

To see AI now embracing that same logic — under the guise of “freedom” or “connection” — feels like déjà vu. We know where this road leads, and it’s paved with good intentions and unintended consequences.

At its core, this story raises a simple but essential truth: just because you can, doesn’t mean you should.

AI doesn’t need to mimic our desires to understand them. The real measure of progress isn’t how human AI can act — it’s how responsibly humans can use it.

Q&A: Ethics, Impact, and the Future of AI Companionship

Q: Why is OpenAI allowing erotica in ChatGPT now?

A: According to Sam Altman, OpenAI believes it has developed new safety measures and age-gating tools that can mitigate prior mental health risks. The company argues that verified adults should have more freedom in how they interact with AI personalities.

Q: What are the main ethical concerns around AI-generated erotica?

A: Critics cite dangers including emotional dependency, privacy breaches, and boundary confusion between simulated and real intimacy. There are fears this could commercialize human connection and normalize AI-driven companionship without sufficient ethical guardrails.

Q: Could AI erotica actually reduce human trafficking or exploitation?

A: Some advocates believe AI-generated companions might redirect demand away from exploitative industries, including pornography and human trafficking. However, others caution that displacement isn’t elimination — the danger lies in reinforcing unhealthy patterns or fantasies rather than reducing them.

Q: How does this decision compare to social media’s evolution?

A: Like Facebook, Instagram, and TikTok, OpenAI risks prioritizing engagement metrics over ethical responsibility. The concern is that, twenty years later, we might face another wave of addiction, isolation, and mental health decline, but this time through AI-driven intimacy.

Q: What does responsible AI companionship look like?

A: The key difference is purpose. Compassion-driven designs like ElliQ, which help older adults combat loneliness through reminders and social engagement, demonstrate how AI can enhance well-being. In contrast, AI erotica risks monetizing emotional dependence rather than nurturing authentic connection.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.