A developer uses an agentic AI coding system similar to GPT-5.3-Codex to write, review, and refine code across multiple tools in a real-world development workflow. Image Source: ChatGPT-5.2

GPT-5.3-Codex: OpenAI Pushes Codex Beyond Coding Into Full Computer-Based Work

OpenAI has introduced GPT-5.3-Codex, its most capable agentic coding model to date, extending Codex beyond software generation into a broader assistant that can research, reason, and execute complex professional tasks directly on a computer. The new model combines the frontier coding performance of GPT-5.2-Codex with the reasoning and professional knowledge capabilities of GPT-5.2, while running 25% faster than previous versions.

According to OpenAI, GPT-5.3-Codex is designed to handle long-running, multi-step work involving research, tool use, and execution, while remaining interactive throughout the process. Users can steer the model mid-task without losing context, shifting Codex from a code-writing agent into what OpenAI describes as a general collaborator for professional work on a computer.

The release highlights OpenAI’s broader push toward agentic systems that can operate across software development, research, and professional computer-based work.

Key Takeaways: GPT-5.3-Codex

GPT-5.3-Codex is OpenAI’s most advanced agentic coding model, combining frontier software engineering performance with professional reasoning and computer-use capabilities.

The model supports long-running, multi-step workflows, including research, tool use, debugging, deployment, and execution on a computer.

GPT-5.3-Codex leads multiple industry benchmarks, including SWE-Bench Pro, Terminal-Bench, OSWorld, and GDPval.

OpenAI classifies GPT-5.3-Codex as “High capability” for cybersecurity tasks, triggering expanded safeguards under its Preparedness Framework.

The model is available through the Codex app, CLI, IDE extensions, and ChatGPT paid plans, with API access planned.

How OpenAI Used Codex to Train and Deploy GPT-5.3-Codex

OpenAI says GPT-5.3-Codex played an instrumental role in its own development. According to the company, even early versions of the model demonstrated strong enough capabilities to be used internally to improve training and support deployment of later releases.

As development progressed, OpenAI describes Codex as becoming a core tool across research, engineering, and deployment workflows. The research team used Codex to monitor and debug training runs for GPT-5.3-Codex, track patterns throughout training, analyze interaction quality, and propose fixes. Codex was also used to build internal applications that helped researchers more precisely understand how the model’s behavior differed from prior versions.

On the engineering side, OpenAI says Codex was used to optimize and adapt the training harness for GPT-5.3-Codex. When edge cases began impacting users, engineers relied on Codex to identify context-rendering bugs and diagnose low cache hit rates. During launch, Codex continued to assist by dynamically scaling GPU clusters to respond to traffic surges while keeping latency stable.

OpenAI says these internal experiments weren’t about optimizing individual features, but about understanding how a more agentic model behaves across longer, more complex workflows.

During alpha testing, OpenAI describes researchers using GPT-5.3-Codex to better understand productivity and task progress across sessions. In one case, a researcher worked with Codex to create simple classifiers that analyzed clarification frequency, user feedback, and task progress across session logs, producing a report on how much work the model completed per turn.

OpenAI says the alpha data surfaced unexpected and counter-intuitive results due to how different GPT-5.3-Codex was from earlier models. To interpret those results, a data scientist worked with Codex to build new data pipelines and richer visualizations than existing internal dashboards allowed. Codex was then used to summarize key insights across thousands of data points in under three minutes.

According to OpenAI, this sustained use of Codex across training, testing, and launch accelerated iteration across research, engineering, and product teams, supporting a faster, more controlled rollout of GPT-5.3-Codex.

Benchmark Performance: Coding, Agentic Tasks, and Computer Use

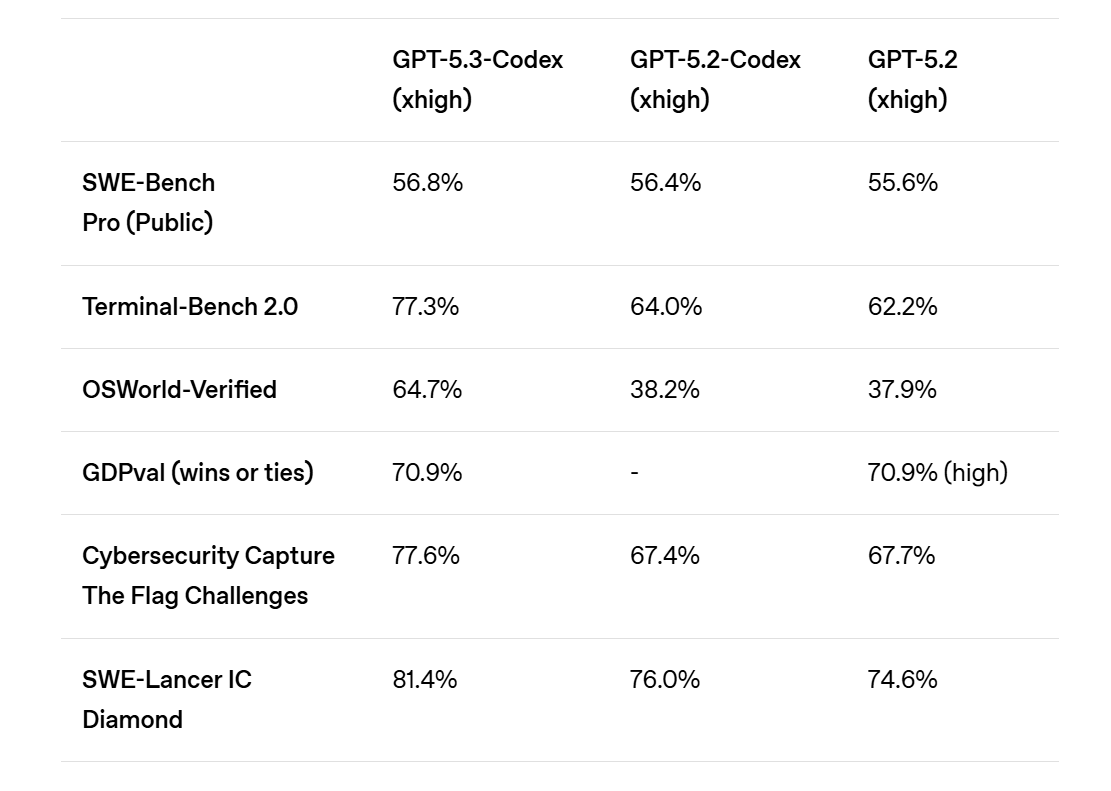

OpenAI says GPT-5.3-Codex sets a new industry high across multiple benchmarks used to evaluate coding ability, agentic behavior, and real-world computer use. These include SWE-Bench Pro, Terminal-Bench, OSWorld, and GDPval, which OpenAI uses to assess performance across software engineering, professional knowledge work, and computer-based tasks.

On SWE-Bench Pro, a rigorous evaluation of real-world software engineering, GPT-5.3-Codex achieved state-of-the-art performance. Unlike SWE-Bench Verified, which focuses only on Python, SWE-Bench Pro spans four programming languages and is designed to be more contamination-resistant, challenging, and industry-relevant.

OpenAI also reports that GPT-5.3-Codex significantly exceeds prior state-of-the-art results on Terminal-Bench 2.0, which measures command-line and terminal skills required for coding agents to operate effectively in real development environments. Notably, the model achieves these results while using fewer tokens than previous models.

Beyond code generation, OpenAI says GPT-5.3-Codex is built to support the full software lifecycle, including debugging, deployment, monitoring, writing product requirements documents, editing copy, user research, testing, and metrics analysis.

OpenAI adds that Codex’s agentic capabilities extend beyond software development, supporting professional work such as creating presentations or analyzing data in spreadsheets.

To evaluate this broader knowledge-work capability, OpenAI points to GDPval, an evaluation released in 2025 that measures performance across 44 occupations. These tasks include producing presentations, spreadsheets, and other structured work products. According to the company, GPT-5.3-Codex matches the performance of GPT-5.2 on GDPval, indicating that expanded agentic and coding capabilities do not come at the expense of general professional reasoning.

OpenAI also reports strong results on OSWorld, an agentic computer-use benchmark requiring models to complete productivity tasks in a visual desktop environment. According to the company, GPT-5.3-Codex demonstrates substantially stronger computer-use capabilities than earlier GPT models, reflecting improvements in interface navigation, tool management, and multi-step execution.

Across these benchmarks, OpenAI says GPT-5.3-Codex shows progress not only on individual tasks, but across coding, professional knowledge work, and computer-based execution, supporting OpenAI’s view of GPT-5.3-Codex as a more general agent capable of reasoning, building, and acting across real-world technical workflows.

Web Development and Long-Running Execution

Beyond traditional coding tasks, OpenAI says GPT-5.3-Codex is designed to support long-running projects that unfold over days rather than minutes. The company attributes this to improved coding capability, stronger aesthetic judgment, and more efficient context usage, enabling the model to autonomously build and refine complex applications over extended periods.

To test these long-running agentic capabilities, OpenAI asked GPT-5.3-Codex to build two web-based games: a second version of the racing game shown during the Codex app launch, and a separate diving game. Using a predefined game-development skill and generic follow-up prompts such as “fix the bug” or “improve the game,” OpenAI says GPT-5.3-Codex iterated autonomously over millions of tokens, refining functionality and gameplay.

OpenAI reports similar improvements in everyday web development tasks. Compared with GPT-5.2-Codex, the new model better interprets underspecified prompts, defaulting to more functional designs and sensible layout choices. According to the company, this results in outputs that feel more complete and production-ready by default.

As an example, OpenAI compared landing pages generated by GPT-5.3-Codex and GPT-5.2-Codex. GPT-5.3-Codex displayed a yearly subscription plan as a discounted monthly price and added an automatically rotating testimonial carousel with three distinct user quotes, producing a page that feels more intentional and usable without additional prompting.

Interactive Agent Design and Human-in-the-Loop Control

As AI agents become more capable, OpenAI says the primary challenge shifts from raw capability to how easily humans can interact with, direct, and supervise multiple agents working in parallel. According to OpenAI, the challenge is less about model intelligence and more about coordination and supervision.

The Codex app is designed to make agent management easier, and GPT-5.3-Codex builds on this by providing frequent progress updates, decision transparency, and real-time interaction during execution.

With GPT-5.3-Codex, users can ask questions, discuss approaches, and adjust direction mid-task. OpenAI says the model explains what it is doing, responds to feedback, and keeps users informed throughout the process.

OpenAI says this interaction model is critical to keeping humans in the loop as agentic systems take on longer-running, higher-impact work, where supervision, transparency, and course correction matter as much as raw performance.

Cybersecurity Safeguards and High-Risk Capabilities

OpenAI says recent improvements in GPT-5.3-Codex have led to meaningful gains in cybersecurity-related performance, benefiting both developers and security professionals. OpenAI says it has also been preparing additional cybersecurity safeguards intended to support defensive use and improve ecosystem resilience.

In response to these changes, OpenAI has classified GPT-5.3-Codex as High capability for cybersecurity-related tasks under its Preparedness Framework, making it the first Codex model to receive that designation. The company says GPT-5.3-Codex is also the first model it has directly trained to identify software vulnerabilities. While OpenAI reports it does not have definitive evidence that the model can automate end-to-end cyberattacks, it says these capabilities warrant a precautionary approach.

That approach includes OpenAI’s most comprehensive cybersecurity safety stack to date, including safety training, automated monitoring, trusted access controls, and enforcement pipelines supported by threat intelligence.

Because cybersecurity work is inherently dual-use, OpenAI says it is taking an evidence-based, iterative approach to accelerate defensive research while limiting misuse. This includes Trusted Access for Cyber, a pilot program supporting cybersecurity research and defensive use cases.

OpenAI also highlighted ecosystem investments, including expanding the private beta of Aardvark, its security research agent, and partnering with open-source maintainers to provide free codebase scanning for widely used projects. In one example, a security researcher used Codex to identify vulnerabilities in Next.js, which were publicly disclosed.

In addition, OpenAI is committing $10 million in API credits to accelerate cyber defense research, building on its $1 million Cybersecurity Grant Program launched in 2023.

OpenAI also published a dedicated system card for GPT-5.3-Codex, outlining the model’s safety evaluations and explaining why certain cybersecurity capabilities are treated as high-risk under its Preparedness Framework.

Availability, Performance Improvements, and Infrastructure

GPT-5.3-Codex is available now through paid ChatGPT plans across the Codex app, CLI, IDE extensions, and the web. OpenAI says it is working to safely enable API access.

The company reports the model runs 25% faster due to improvements in infrastructure and inference, and that GPT-5.3-Codex was trained and served on NVIDIA GB200 NVL72 systems.

Codex App: Turning Model Capability Into Usable Workflows

Alongside the model release, OpenAI has expanded access to the Codex app, a desktop environment designed to help users manage multiple AI agents and long-running tasks in parallel.

The Codex app acts as a centralized interface where users can direct agents, review progress, and switch between projects without losing context. This application layer is intended to make GPT-5.3-Codex’s agentic capabilities more practical for real-world workflows.

By pairing GPT-5.3-Codex with the Codex app, OpenAI emphasizes not just what the model can do, but how professionals interact with, supervise, and coordinate increasingly capable agents in everyday work.

Q&A: Understanding GPT-5.3-Codex

Q: What is GPT-5.3-Codex?

A: GPT-5.3-Codex is OpenAI’s most advanced agentic coding model, designed to support software development, research, execution, and long-running professional tasks on a computer.

Q: How is GPT-5.3-Codex different from previous Codex models?

A: It combines frontier coding performance, professional reasoning, and computer-use capabilities in a single model, enabling end-to-end workflows.

Q: What benchmarks does GPT-5.3-Codex perform well on?

A: SWE-Bench Pro, Terminal-Bench, OSWorld, and GDPval.

Q: Why is cybersecurity a focus in this release?

A: GPT-5.3-Codex is the first OpenAI model classified as High capability for cybersecurity-related tasks, prompting expanded safeguards and programs such as Trusted Access for Cyber.

Q: Where can users access GPT-5.3-Codex today?

A: Through paid ChatGPT plans via the Codex app, CLI, IDE extensions, and the web, with API access planned.

What This Means: From Coding Agents to Coordinated Execution

As AI models advance, the limiting factor is no longer whether they can write code—it’s whether humans can coordinate, supervise, and trust them as they take on sustained, real-world work. GPT-5.3-Codex reflects OpenAI’s move toward agents that actively execute across tools, environments, and time.

Who should care:

Developers, engineering leaders, and organizations moving AI from experimentation into production.

Why it matters now:

As agentic systems scale, workflow visibility, safety controls, and human oversight become essential.

What decision this affects:

Whether AI remains a task-level productivity tool or becomes a coordinated collaborator across the software lifecycle.

How teams answer that question will determine whether agentic AI stays on the sidelines—or becomes a dependable partner in everyday professional execution.

Sources:

OpenAI — Introducing GPT-5.3-Codex

https://openai.com/index/introducing-gpt-5-3-codex/OpenAI — GPT-5.3-Codex System Card

https://openai.com/index/gpt-5-3-codex-system-card/OpenAI — Introducing the Codex app

https://openai.com/index/introducing-the-codex-app/OpenAI — Strengthening cyber resilience as AI capabilities advance

https://openai.com/index/strengthening-cyber-resilience/OpenAI — Updating our Preparedness Framework

https://openai.com/index/updating-our-preparedness-framework/OpenAI — Trusted Access for Cyber

https://openai.com/index/trusted-access-for-cyber/OpenAI — Introducing Aardvark

https://openai.com/index/introducing-aardvark/Vercel — Summaries of CVE-2025-59471 and CVE-2025-59472

https://vercel.com/changelog/summaries-of-cve-2025-59471-and-cve-2025-59472OpenAI — OpenAI Cybersecurity Grant Program

https://openai.com/index/openai-cybersecurity-grant-program/

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.