A user explores new GPT-5 features like planning mode and memory capabilities from a personal laptop. Image Source: ChatGPT-4o

OpenAI Launches GPT-5: A New Era of Voice, Vision & Agents

Key Takeaways:

OpenAI launches GPT-5, its most advanced model yet, with real-time voice, video understanding, autonomous agentic reasoning, and zero-shot coding.

Built for real-world use—not just benchmarks. It’s already helping in finance, healthcare, education, and government.

Offers natural-sounding, customizable voice personalities and contextual visual intelligence.

Introduces massive improvements for developers: from live debugging to tool preambles, planning, and tunable latency.

Rolling out to ChatGPT Plus and API users now, with Team and Enterprise access next week.

A Smarter Voice with Personality

OpenAI unveiled GPT-5 in a highly anticipated livestream this morning. We watched it live, took detailed notes, and put together this breakdown of everything you need to know.

In a major leap forward for natural interaction, GPT-5’s real-time voice mode was front and center during the launch livestream. OpenAI demoed it as a Korean language tutor, where the model spoke clearly in Korean, paused after each phrase to allow repetition, and even adjusted its teaching style when prompted. This wasn't a script—it responded with context awareness and timing.

But what stole the show was this: GPT-5 no longer sounds robotic. Its responses are varied, contextual, and humanlike. Instead of delivering templated lines or repeating stock phrases, it changes tone, adds pauses for effect, and reacts with subtle emotion. The result is a voice assistant that feels less like a bot—and more like a person.

Users can now customize GPT-5’s voice personality, selecting tones that are:

Chatty or concise

Upbeat, thoughtful, or empathetic

Witty, encouraging, or calming

Whether you're learning a new language or getting feedback on a project, GPT-5 adapts its entire conversational style to match your needs. This isn't just a UX upgrade—it’s a foundation for emotional connection and better learning outcomes, especially in education and healthcare.

Another big shift with GPT-5 is that users no longer need to choose a specific model or tool—GPT-5 intelligently adapts its reasoning style, tools, and output to match the task at hand, whether it's code generation, visual analysis, casual conversation, or agentic workflows.

Voice mode also includes real-time visual context. In one demo, a user showed GPT-5 a mobile app screenshot with a misaligned navigation bar and asked, “What’s wrong here?” GPT-5 not only spotted the issue, but explained how it could harm usability and accessibility, then suggested layout improvements—all while speaking conversationally.

This fusion of voice, vision, and intelligence pushes GPT-5 far beyond a chat interface. It’s a truly multimodal collaborator.

Seeing Is Understanding: Vision and Context Awareness

GPT-5 takes the visual capabilities introduced in GPT-4o to the next level—by adding video comprehension, motion tracking, and visual memory.

In one example, a user uploaded two versions of a user interface and asked what changed. GPT-5 immediately noticed layout inconsistencies in the new version that could affect user flow and accessibility—something previous models like GPT-4 or GPT-4o struggled to do.

New capabilities include:

Comparing multiple images or video frames to detect changes over time

Interpreting screen recordings for workflow analysis or debugging

Assessing design for accessibility (like color contrast, font size, tappable targets)

OpenAI showed how GPT-5 could track a cursor moving through a web app, spot bugs introduced in the latest version, and even suggest ways to improve user experience.

This level of visual context awareness makes GPT-5 ideal for product teams, QA testers, accessibility experts, and designers working across complex platforms.

Developer Workflows: Zero-Shot Coding, Debugging, and Design

GPT-5 redefines what developers can do—with or without prior examples.

Its zero-shot coding capabilities let users describe an app in plain English, and GPT-5 delivers a production-ready build with no training data or examples required. This isn’t theoretical, it’s real, today.

In one demo, a CFO described a finance dashboard, and GPT-5 built it from scratch: complete with charts, interactive capabilities like hover effects, UI/UX structure, and even deployable front-end code using HTML, CSS, and JavaScript. All from a single, natural language prompt.

In another example, GPT-5 built an entire video game, including character artwork, story arcs, dialogue, and sound—all without follow-up clarification.

For developers, it brings:

Live debugging: GPT-5 catches and corrects its own errors in real-time

Tool preambles: It explains what it's about to do before using tools—boosting transparency

Agentic planning: Lays out multistep plans before acting, like a junior developer who thinks before coding

Tunable latency: Adjust for faster but shallower or slower but deeper responses, depending on task

Custom grammars: You can define strict syntax or data formats GPT-5 must follow

The model doesn’t just respond—it participates in the dev cycle like a capable assistant or pair programmer.

Cursor CEO: GPT-5 Is Now Our Default

Cursor, the AI coding environment, has now made GPT-5 its default model, replacing GPT-4.

During the launch, Cursor’s CEO explained how GPT-5 has fundamentally changed how their team works. Key upgrades:

Better state memory: GPT-5 understands and maintains context across sessions, allowing long-term, iterative work

Infrastructure awareness: It sees the big picture of your codebase—not just snippets—and makes architectural suggestions

Proactive reasoning: Instead of just completing code, it questions and improves logic flow

The result? Projects that once took days now take minutes. GPT-5 isn't just helping Cursor users—it’s shaping the future of code collaboration.

Creative Thinking and Playful Moments

GPT-5 brings not just intelligence, but emotional depth.

One standout moment during the livestream was a heartfelt and hilarious eulogy for GPT-3.5 and GPT-4o, delivered by GPT-5 itself. It struck a balance of humor, nostalgia, and empathy, showcasing the model’s ability to speak with real emotional intelligence.

OpenAI also introduced a “think harder” mode—an optional setting that tells GPT-5 to go deeper on complex tasks. This mode is ideal for:

Scriptwriting

Marketing campaigns

Philosophical arguments

Long-form storytelling

Instead of rushing to an answer, GPT-5 reflects, structures, and refines. It’s a major step toward AI that can brainstorm and collaborate at a high level.

Enterprise, Healthcare, and Government Adoption

GPT-5 isn’t just a lab experiment. It’s already live in mission-critical systems:

Amgen is using GPT-5’s visual reasoning for drug discovery, helping scientists analyze complex molecular structures.

BBVA is deploying GPT-5 to automate financial reports and predictive risk models.

Oscar Health is using it to improve clinical decision-making, with GPT-5 generating accurate, plain-language treatment explanations for doctors and patients.

U.S. Federal Agencies are using GPT-5 to summarize legal documents, generate training materials, and assist in compliance workflows.

The pattern is clear: GPT-5 is moving from novelty to necessity.

Safety and Alignment: Smarter Guardrails

One of the most important upgrades to GPT-5 is safety and transparency.

OpenAI developed a curriculum-based alignment system, which helps GPT-5 reason through edge cases and ethical prompts with care. In testing, it consistently:

Asked clarifying questions before responding to unclear or dangerous prompts

Declined unsafe requests and redirected users with accurate, alternative responses

Explained why it couldn’t comply—instead of just refusing without context

Crucially, GPT-5 is designed to resist deception. OpenAI ran trials where it was prompted to lie, rationalize unethical actions, or omit information—and GPT-5 refused. Unlike earlier models, it doesn’t just follow instructions blindly.

It’s also more resistant to jailbreaks and prompt injections, making it a stronger candidate for enterprise-grade deployment.

This is a step toward trustworthy AI—not just powerful AI.

Pricing and Access

OpenAI is bringing this frontier intelligence to everyone. GPT-5 begins rolling out today to users on the Free, Plus, and Pro plans. Enterprise and Education (EDU) users will receive access starting next week.

For the first time ever, OpenAI's most advanced model will be available to free-tier users. When free users reach their usage limit with GPT-5, the system will automatically transition them to GPT-5 Mini—a smaller, faster, but still highly capable version of the model.

GPT-5 Model Variants:

GPT-5 (Full): Voice, vision, agentic reasoning, and memory

GPT-5 Mini: Lightweight version, available to free users during off-peak hours

GPT-5 Nano: Enterprise-grade, private, and latency-optimized

Pricing:

Model | Input Pricing (per 1M tokens) | Notes |

|---|---|---|

GPT-5 | $1.25 | Full-featured model |

GPT-5 Mini | Not specified | Faster than GPT-5 |

GPT-5 Nano | ~25x cheaper than GPT-5 | Ultra-low-cost for lightweight applications |

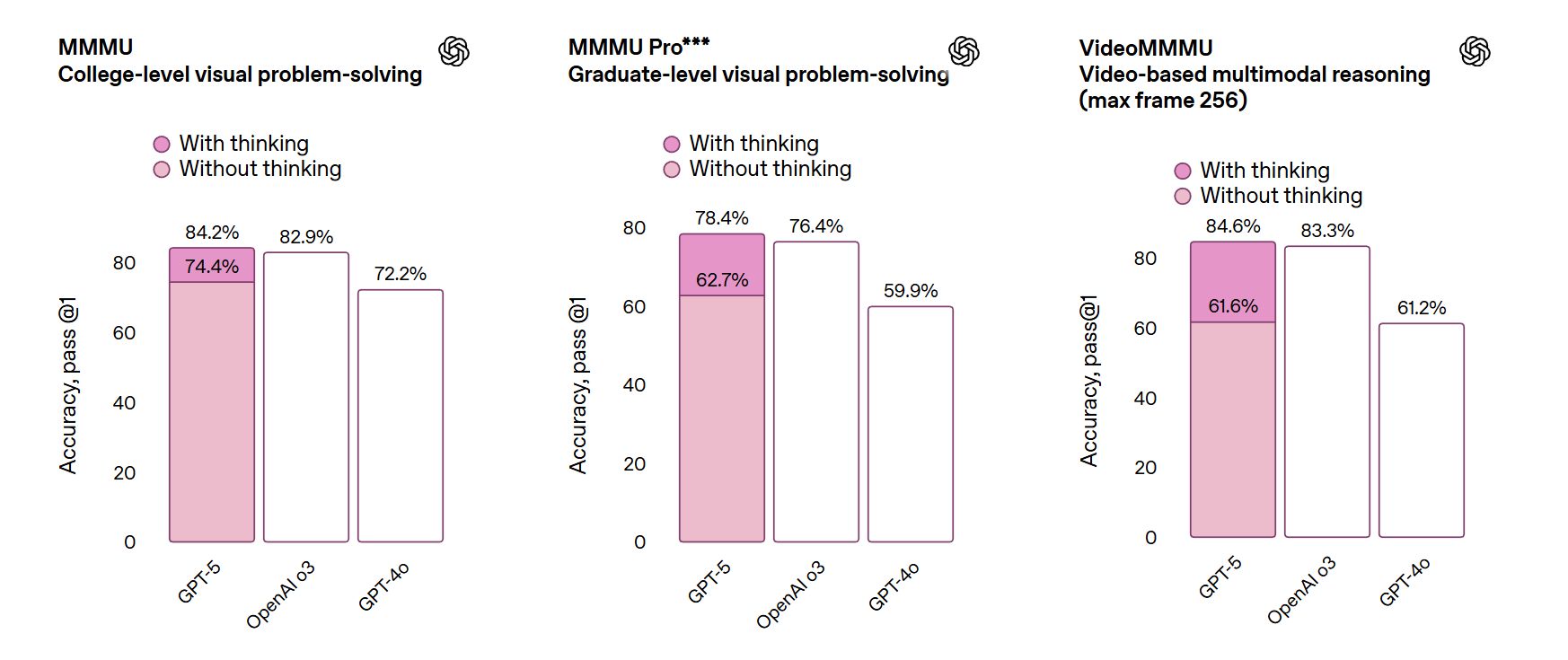

Benchmark Performance: Smarter, Safer, and More Accurate

OpenAI presented new benchmark data during the GPT-5 livestream showing measurable improvements in reasoning, reliability, and real-world performance across a variety of domains.

On standard academic benchmarks:

GPQA (Graduate-Level Questions): GPT-5 scored 95%, significantly outperforming GPT-4o’s 85% and other leading models.

MMLU (Multitask Language Understanding): GPT-5 achieved near-perfect scores, continuing its lead on this critical reasoning test.

HMMT & Tau2 (Math & Logic Tests): GPT-5 delivered top-tier performance on math-heavy benchmarks, including challenging Olympiad-level problems.

In healthcare and scientific reasoning:

HealthBench: GPT-5 not only gave more accurate responses but also reduced critical hallucinations by 83%, a key safety upgrade over previous models.

Helpful, Honest, and Harmless (HHH): GPT-5 improved across all three dimensions—showing fewer hallucinations and better refusal behavior when prompted with unsafe or unethical requests.

Coding and developer tools:

HumanEval & SWE Bench: GPT-5 wrote cleaner, more deployable code with higher accuracy in real-world software engineering tasks. In live demos, it debugged and built entire apps with zero-shot prompts.

Deception and safety guardrails:

GPT-5 significantly reduced deceptive behavior compared to earlier models when tested with adversarial inputs. According to OpenAI, it passed the “humanity’s last exam” with higher truthfulness and transparency than all prior models.

These results highlight OpenAI’s dual focus: pushing performance forward while strengthening safeguards. GPT-5 isn’t just smarter—it’s safer, too. For more benchmarks, please visit OpenAI’s blog announcement.

Q&A: What You Need to Know About GPT-5

Q: How do I use GPT-5?

A: Via API or ChatGPT. Plus users get access now; Team/Enterprise next week.

Q: What’s new compared to GPT-4o?

A: Adds video reasoning, customizable voice, zero-shot coding, deeper memory, and enhanced safety.

Q: What are Mini and Nano?

A: Mini = lighter version for free use. Nano = optimized for enterprise speed/privacy.

Q: Is it really safer?

A: Yes. GPT-5 explains refusals, avoids manipulation, and remains transparent under pressure.

Q: Can GPT-5 still use tools like code interpreters or web browsing?

A: Yes. GPT-5 supports tool use, including file uploads, code execution, browsing, and third-party plugins—with improved transparency and accuracy thanks to tool preambles and better planning.

What This Means

GPT-5 isn’t just a better chatbot—it’s a full-stack AI partner.

It speaks like a human, sees like a designer, thinks like a strategist, and adapts like a true teammate. It can build apps, tutor students, analyze medical charts, and help governments work more efficiently—all with safety and trust built in.

This isn’t a glimpse of what’s coming—it’s the start of a new kind of collaboration between humans and machines, one that will define how we work, learn, and create.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.