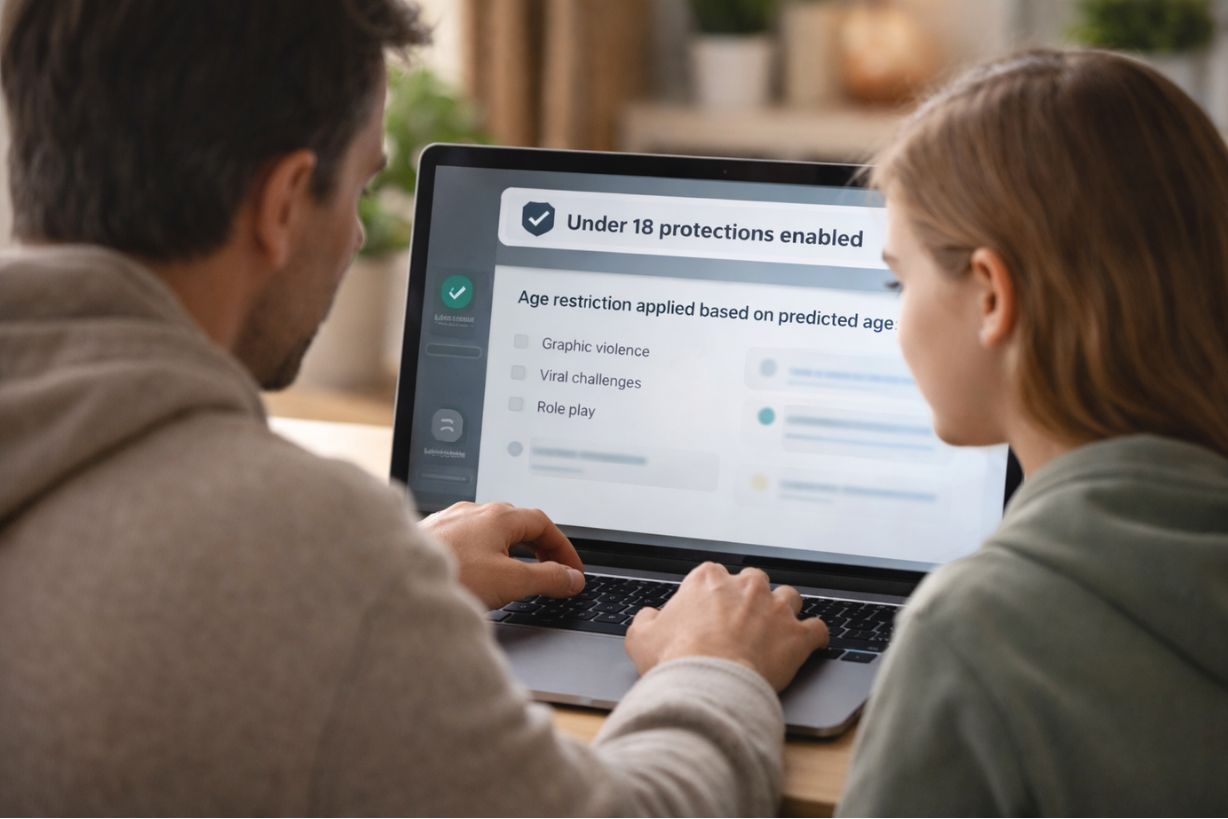

OpenAI’s age prediction system applies teen safety protections directly within ChatGPT, supporting age-appropriate AI use at home. Image Source: ChatGPT-5.2

OpenAI Begins Rolling Out Age Prediction on ChatGPT to Expand Teen Safety Protections

OpenAI has begun rolling out an age prediction system across ChatGPT consumer plans to help determine whether an account likely belongs to someone under the age of 18, building on existing teen safety protections already in place. The goal, according to the company, is to apply the appropriate safeguards for teens while maintaining full functionality for adult users within established safety boundaries.

The rollout builds on OpenAI’s previously published Teen Safety Blueprint and Under-18 Principles for Model Behavior, which emphasize both expanding access to technology and protecting young users’ well-being.

Key Takeaways: OpenAI’s Age Prediction Rollout

OpenAI is deploying an age prediction system on ChatGPT that goes beyond self-reported age.

The system uses behavioral signals and account-level signals to estimate whether an account likely belongs to someone under 18.

Accounts identified as potentially under 18 automatically receive additional safety protections.

Users incorrectly placed in the under-18 experience can verify their age via selfie using Persona, a third-party identity verification service.

EU rollout is expected in the coming weeks to meet regional requirements.

Why OpenAI Is Introducing Age Prediction

OpenAI stated that age prediction is intended to strengthen protections already in place for teens using ChatGPT. Users who self-identify as under 18 during sign-up already receive additional safeguards designed to reduce exposure to sensitive or potentially harmful content.

Age prediction helps extend these protections to accounts where age information may be missing or inaccurate, while also allowing adults to use ChatGPT with fewer unnecessary restrictions.

How Age Prediction Works

ChatGPT uses an age prediction model to estimate whether an account likely belongs to someone under 18. OpenAI said the model evaluates a combination of behavioral signals and account-level signals, including:

How long an account has existed

Typical times of day when the account is active

Usage patterns over time

A user’s stated age

Deploying age prediction allows OpenAI to learn which signals improve accuracy, with those learnings used to continuously refine the model.

When the system estimates that an account may belong to a minor, ChatGPT automatically applies additional safeguards. When OpenAI is not confident about a user’s age or has incomplete information, the company said it defaults to a safer experience.

What Protections Are Applied to Teen Accounts

When age prediction indicates that an account likely belongs to someone under 18, ChatGPT applies additional protections designed to reduce exposure to sensitive content, including:

Graphic violence or gory content

Viral challenges that could encourage risky or harmful behavior

Sexual, romantic, or violent role play

Depictions of self-harm

Content promoting extreme beauty standards, unhealthy dieting, or body shaming

OpenAI said these restrictions are guided by expert input and academic research on child development, including known differences in risk perception, impulse control, peer influence, and emotional regulation among teens.

The company also noted that it is focused on improving protections over time, particularly to address attempts to bypass safeguards.

Age Verification and User Control

OpenAI stated that users who are incorrectly placed into the under-18 experience will always have a fast and simple way to confirm their age and restore full access. Verification is done through a selfie check using Persona, a secure identity-verification service.

Users can check whether safeguards have been applied to their account and begin the verification process at any time by navigating to Settings > Account.

Parental Controls and Customization

In addition to automatic safeguards, OpenAI said parents can further customize their teen’s experience through parental controls, including:

Setting quiet hours when ChatGPT cannot be used

Controlling features such as memory or model training

Receiving notifications if signs of acute distress are detected

These controls are designed to give families additional flexibility while maintaining baseline safety protections.

Rollout, Regional Considerations, and Ongoing Improvements

OpenAI said it is continuing to learn from the initial rollout of age prediction and plans to improve the system’s accuracy over time. According to the company, rollout performance and user signals will be closely monitored to guide ongoing refinements.

In the European Union, OpenAI stated that age prediction will roll out in the coming weeks to account for regional regulatory requirements.

While describing the rollout as an important milestone, OpenAI emphasized that its work to support teen safety is ongoing. The company said it will continue sharing updates on its progress and engage in dialogue with external experts, including the American Psychological Association, ConnectSafely, and the Global Physicians Network.

Q&A: OpenAI’s Age Prediction System

Q: What is OpenAI’s age prediction system designed to do?

A: According to OpenAI, the system estimates whether a ChatGPT account likely belongs to someone under 18 so that appropriate safeguards can be applied automatically.

Q: Does OpenAI require users to provide identification by default?

A: No. OpenAI said age prediction relies on behavioral and account-level signals. Identity verification via selfie is only required if a user wants to correct an incorrect under-18 classification.

Q: What happens if OpenAI is unsure about a user’s age?

A: OpenAI stated that when age information is uncertain or incomplete, the system defaults to a safer experience.

Q: When will age prediction be available in the EU?

A: OpenAI said the rollout in the European Union will begin in the coming weeks to account for regional requirements.

What This Means: Age, Safety, and Trust in Consumer AI

OpenAI’s rollout of age prediction reflects a growing reality for consumer AI systems: self-reported age is no longer sufficient when tools are widely accessible, increasingly capable, and used by people at very different stages of development. As AI becomes part of everyday life, platforms are being forced to decide not just what users say about themselves, but when the system itself should step in to apply safeguards.

By using behavioral and account-level signals to determine when teen protections should apply, OpenAI is shifting age-related safety decisions into the design of the system itself. That approach aims to reduce reliance on manual disclosure while avoiding mandatory identity checks for all users—placing more responsibility on the platform to balance protection, accuracy, and user autonomy.

For teens, this means safety measures can apply even when age information is incomplete or inaccurate. For adults, it is intended to preserve access without unnecessary restrictions. But more broadly, the rollout highlights a key challenge facing AI platforms: how systems make judgments that affect user experience, and how transparent and correct those judgments need to be to maintain trust.

As governments, parents, and technology companies grapple with age-appropriate AI use, OpenAI’s approach offers an early example of how large platforms may navigate safety and privacy without defaulting to universal identification. The outcome of this effort—how well it works in practice and how it is received by users—will likely influence how age awareness is built into consumer AI systems going forward.

Sources:

OpenAI – Our Approach to Age Prediction

https://openai.com/index/our-approach-to-age-prediction/OpenAI – Introducing the Teen Safety Blueprint

https://openai.com/index/introducing-the-teen-safety-blueprint/OpenAI – Updating Model Spec With Teen Protections

https://openai.com/index/updating-model-spec-with-teen-protections/OpenAI – Building Toward Age Prediction

https://openai.com/index/building-towards-age-prediction/OpenAI Help Center – Parental Controls on ChatGPT: FAQ

https://help.openai.com/en/articles/12315553-parental-controls-on-chatgpt-faqOpenAI – Strengthening ChatGPT Responses in Sensitive Conversations

https://openai.com/index/strengthening-chatgpt-responses-in-sensitive-conversations/OpenAI Help Center – Age Prediction in ChatGPT

https://help.openai.com/en/articles/12652064-age-prediction-in-chatgpt

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.