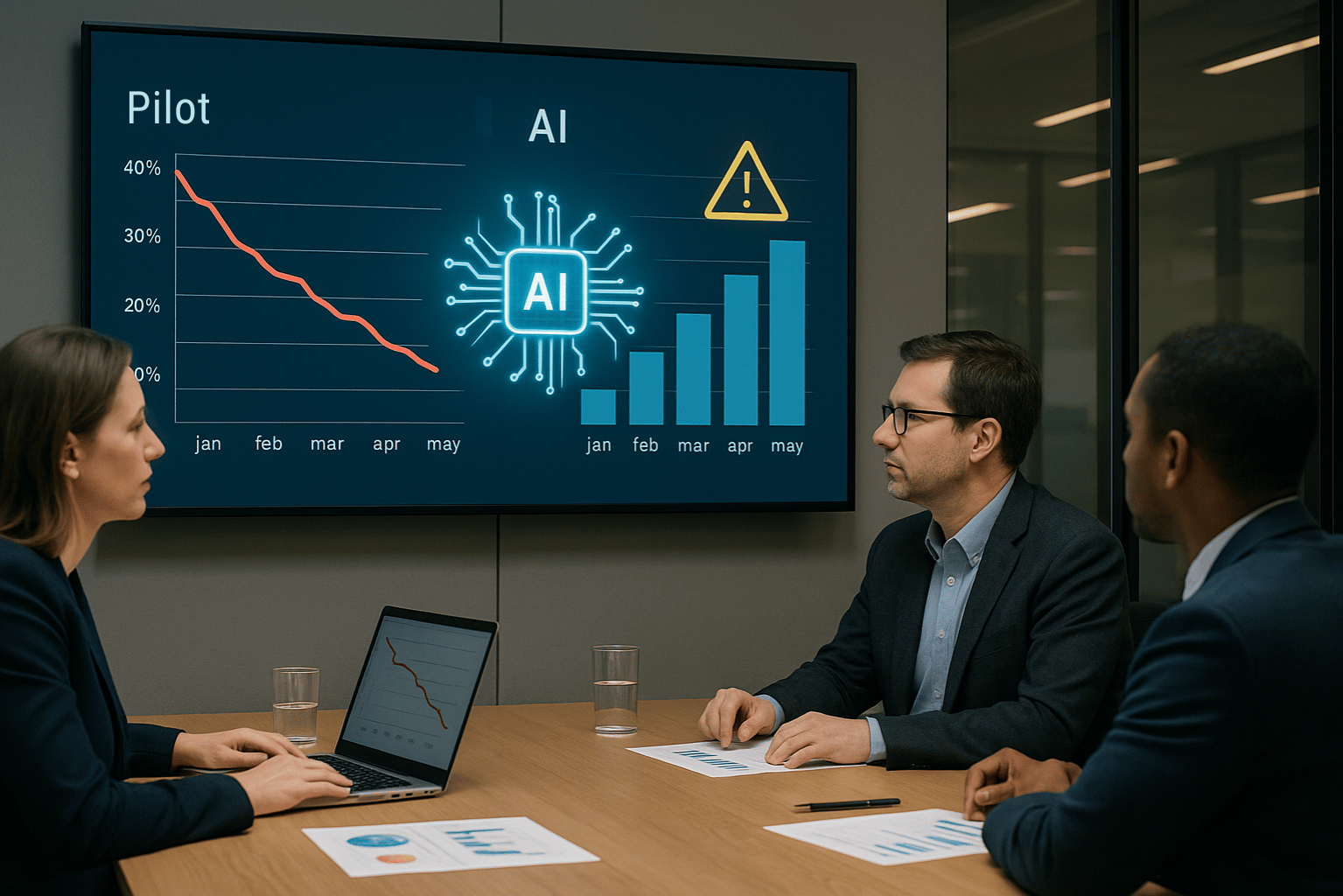

Executives analyze the disappointing results of generative AI pilot programs during a strategy meeting. Image Source: ChatGPT-5

MIT Report: 95% of Generative AI Pilots Are Failing in Business

Key Takeaways:

95% of generative AI pilots fail to produce measurable impact, according to the MIT NANDA report.

Only 5% of pilots succeed, creating millions in value by focusing on narrow workflows and adaptive systems.

Specialized vendors succeed ~67% of the time, while in-house builds succeed only ~33%.

AI budgets are misallocated—over half flows to sales and marketing, but the highest ROI comes from back-office automation.

Workforce impacts are gradual: companies aren’t cutting en masse, but they aren’t replacing entry-level jobs in support and admin functions.

MIT’s Findings on the GenAI Divide

The MIT NANDA initiative has published The GenAI Divide: State of AI in Business 2025, revealing that despite $30–40 billion in enterprise investment, 95% of companies see no measurable return on generative AI.

Researchers analyzed more than 300 public AI deployments, conducted 52 structured interviews, and surveyed 153 senior leaders across industries. The report concludes that adoption is high—94% of organizations use AI in some form—but meaningful transformation is rare. Only two of nine major industries (technology and media) show signs of structural disruption.

MIT calls this gap the “GenAI Divide.” On one side are the few organizations crossing into real business transformation. On the other side are the majority stuck with stalled pilots, brittle workflows, and no return on investment.

Why Generative AI Pilots Fail

According to MIT, the failures are not primarily about model quality or regulation, but about a “learning gap.” Most enterprise systems fail to retain feedback, adapt to context, or improve over time, leaving them unable to integrate into day-to-day workflows.

Tom’s Hardware underscores this finding, reporting that “95% of generative AI implementations deliver little to no measurable impact on P&L.” The issue is not whether tools like ChatGPT work in theory, but whether they align with existing workflows. As MIT researcher Aditya Challapally explained:

“It’s because they pick one pain point, execute well, and partner smartly with companies who use their tools.”

TechRadar adds that the few winners—sometimes startups led by very young founders—are scaling revenues “from zero to $20 million in a year” by targeting one use case and executing with precision.

Vendor vs. In-House Approaches

The report and both media outlets highlight stark differences between buying and building:

External partnerships succeed ~67% of the time.

In-house builds succeed ~33% of the time.

Despite this, many organizations in regulated industries like finance and healthcare still choose to build internally to minimize compliance risks, even though this approach is more likely to fail.

Misallocated Investments

MIT’s research shows that 50–70% of AI budgets go to sales and marketing tools, because outcomes are visible and easy to report to boards. Yet the highest ROI comes from back-office automation—processes like finance, procurement, and customer support.

This misalignment explains why most projects stall: money is flowing to high-visibility tools that deliver flash but not ROI, while back-office applications quietly eliminate millions in outsourcing and agency costs for the rare companies crossing the divide.

Workforce Impacts Emerging

Both MIT and Tom’s Hardware confirm that generative AI is not yet driving mass layoffs. Instead, organizations are reshaping their workforces through attrition—not refilling entry-level support and administrative roles.

In sectors where AI disruption is strongest (technology and media), over 80% of executives expect reduced hiring volumes within 24 months. Meanwhile, executives across industries emphasize AI literacy as a key hiring criterion, with younger workers often outpacing experienced professionals.

Risks and Governance

CIOs interviewed by MIT stressed that the risks of generative AI go beyond performance. The key concerns are:

Protecting intellectual property and data boundaries.

Managing bias and accuracy in public-model outputs.

Preventing shadow AI, where employees use personal ChatGPT or Claude accounts outside of company governance frameworks.

Without strong governance, organizations risk compliance failures and reputational damage—factors that can stall or reverse AI initiatives.

Q&A: Generative AI Pilots

Q: What is the GenAI Divide?

A: MIT defines it as the gap where 95% of organizations fail to extract value from generative AI, while only 5% achieve measurable success.

Q: Why do most generative AI pilots fail?

A: Because of the learning gap—systems don’t adapt to workflows, retain memory, or improve with feedback.

Q: Which AI deployments succeed?

A: Those that focus narrowly on one workflow, partner externally, and demand measurable business outcomes.

Q: Are in-house AI projects effective?

A: Rarely—external vendors succeed twice as often as in-house builds. Internal projects often stall in regulated industries.

Q: How is AI affecting jobs?

A: Instead of layoffs, companies are not replacing entry-level roles, especially in support and admin, signaling a gradual shift.

Lessons for AI Businesses

MIT’s findings, reinforced by Tom’s Hardware and TechRadar, provide clear lessons for companies adopting generative AI:

Pick one pain point. Success comes from narrow focus, not broad ambition.

Prioritize back-office ROI. The highest returns come from automating internal operations, not flashy sales tools.

Buy, don’t build. Vendor partnerships are twice as likely to succeed as internal projects.

Insist on learning systems. Tools must adapt, retain context, and improve over time.

Govern shadow AI. Employees already use ChatGPT privately; organizations must channel this into secure, sanctioned use.

Looking Ahead

The MIT study highlights a sobering truth: most corporate AI efforts are wasting time and money. But it also shows a path forward. Organizations that focus on workflow integration, vendor partnerships, and adaptive systems can cross the GenAI Divide and unlock real transformation.

For businesses, the message is clear: stop chasing hype, start demanding learning systems that deliver measurable outcomes.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.