Image Source: ChatGPT-4o

Mistral Medium 3 Launches With High Performance and Lower Cost

Mistral AI has released Mistral Medium 3, a new language model that delivers strong performance in professional tasks—especially coding—while significantly reducing cost and complexity for enterprise users. The model stands out for matching or exceeding much larger competitors on industry benchmarks at a fraction of the price.

According to Mistral, Medium 3 delivers over 90% of Claude Sonnet 3.7’s benchmark performance but at a considerably lower cost: $0.40 per million tokens for input and $2 for output. It also beats both open and proprietary models like Llama 4 Maverick and Cohere Command R+ in coding, instruction following, and multimodal tasks. To break down what sets the model apart, here are the key highlights:

Key Highlights

Mistral Medium 3 delivers on both performance and efficiency, offering strong results across technical benchmarks at significantly lower cost.

Performance:

Across industry-standard benchmarks, the model matches or exceeds the performance of larger proprietary and open models—particularly in coding, reasoning, and long-context tasks.

Achieves top results on HumanEval (92.1%), ArenaHard (97.1%), and RULER 32K (96%).

Outperforms Llama 4 Maverick and GPT-4o in multiple categories, including coding and math.

Comes close to Claude Sonnet 3.7 on MultiPL-E (81.4% vs. 83.4%) and MMLU Pro (77.2% vs. 80.0%), despite being significantly smaller and more cost-efficient.

Falls behind DeepSeek 3.1 on LiveCodeBench (30.3% vs. 42.9%) and MultiPL-E (81.4% vs. 83.8%), but delivers stronger performance overall across coding, instruction, and long-context benchmarks.

Efficiency and usability: Introduces a new class of models that combines state-of-the-art performance with 8x lower cost and simpler deployment—making it easier for enterprises to adopt and scale advanced AI.

Enterprise Features:

Designed with real-world business needs in mind, Mistral Medium 3 offers flexibility, customization, and secure deployment options that make it easier to integrate into enterprise systems.

Supports hybrid, on-premises, and virtual private cloud (VPC) deployments.

Allows custom post-training and integration with enterprise tools.

Designed for domain-specific adaptation with continuous pretraining and fine-tuning options.

Multimodal and Language Support:

In addition to text performance, the model shows strong results in multimodal tasks and supports high-quality responses across multiple languages.

Performs well in multimodal benchmarks like DocVQA (95.3%) and AI2D (93.7%).

Consistently leads in multilingual tasks, with win rates over Llama 4 Maverick in English (66.7%), French (71.4%), Spanish (73.3%), and Arabic (64.7%).

Deployment Options:

Mistral Medium 3 is available through multiple platforms, with support for both managed services and self-hosted environments—allowing teams to choose the infrastructure that fits best.

Available via Mistral’s platform and Amazon SageMaker.

Coming soon to IBM WatsonX, NVIDIA NIM, Azure AI Foundry, and Google Cloud Vertex.

Supports deployment on self-hosted environments with four or more GPUs.

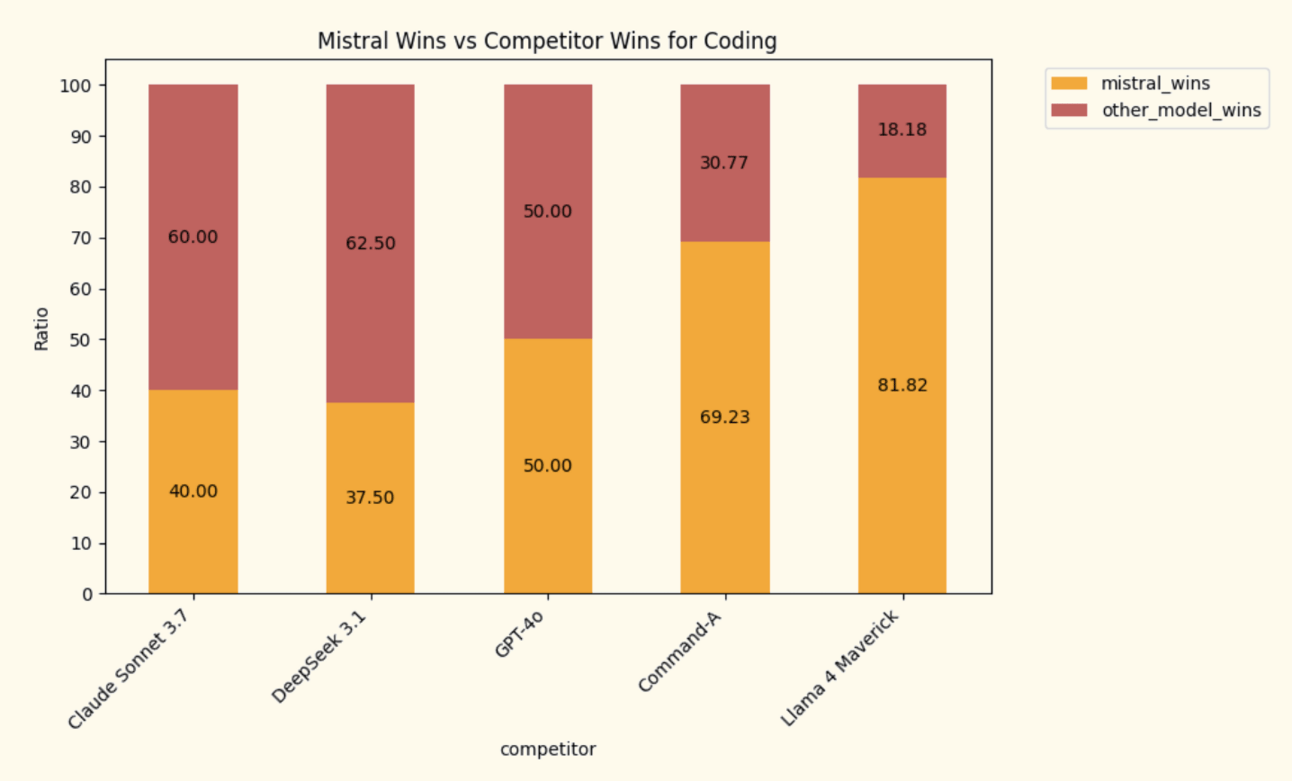

Coding Benchmark Leadership

In head-to-head coding evaluations, Mistral Medium 3 outperforms major models in the space:

Against Llama 4 Maverick: Wins 81.8% of coding tasks.

Against Command-A: Wins nearly 70% of comparisons.

Against GPT-4o: Matches performance evenly at 50/50.

Overall: Consistently ranks at the top or near-top in HumanEval, LiveCodeBench, and MultiPL-E.

These results hold across evaluation categories and are supported by Mistral’s internal pipeline and third-party human assessments, suggesting real-world coding advantage—not just academic benchmarks.

Built With Enterprise in Mind

Unlike other models that force a choice between API fine-tuning or full self-deployment, Mistral offers both. Organizations can continuously pretrain and fully fine-tune Medium 3 for integration into internal workflows and proprietary knowledge systems.

Mistral Medium 3 targets real-world business applications with its flexible deployment and training options. Enterprises in sectors like finance, energy, and healthcare are already using the model in beta to power customer service, automate complex analysis, and personalize business operations.

Looking Ahead

With the March launch of Mistral Small and today’s release of Mistral Medium 3, the company is building momentum toward the launch of a larger-scale model. Though details are limited, Mistral hints that its upcoming release will extend the same performance and efficiency gains to a new class of users and tasks.

“With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we’re excited to ‘open’ up what’s to come :)” the company noted in its announcement.

What This Means

The launch of Mistral Medium 3 marks a significant shift in how high-performing language models can be deployed and priced. For businesses, the ability to access frontier-class AI—especially for coding, reasoning, and multimodal understanding—without the high costs or infrastructure demands of larger models is a practical advantage. With support for on-premises and VPC deployment, Mistral is also catering directly to enterprise concerns around data privacy, customization, and control.

In a broader AI landscape increasingly divided between open models and tightly controlled proprietary systems, Mistral Medium 3 is carving out a middle path: offering competitive performance, flexibility, and affordability. For developers, it lowers the barrier to entry for building intelligent applications. For enterprises, it offers a way to embed adaptable, high-accuracy language models into sensitive or specialized environments—without the steep financial or technical trade-offs.

By consistently outperforming open models like Llama 4 Maverick and matching proprietary leaders like Claude Sonnet 3.7 on critical tasks, Mistral Medium 3 reinforces that scale is not the only path to quality. Efficiency, design, and enterprise readiness now matter just as much as raw model size.

Even in a field dominated by giants, Mistral Medium 3 shows that smart engineering can deliver more with less—and that powerful AI doesn’t need to come at a premium.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.