Image Source: ChatGPT-4o

Mistral Launches Magistral, a New Reasoning Model in Open and Enterprise Versions

Mistral AI has released its first reasoning model, Magistral, marking a significant step forward in AI systems designed for transparent, domain-specific, and multilingual thinking. The model is available in two forms: an open-source version called Magistral Small and a more powerful enterprise variant, Magistral Medium.

While general-purpose AI models often provide fast, fluent answers, reasoning models are designed to think through problems step by step—more like a human analyst or researcher might. Mistral says Magistral was built to extend that capability into real-world applications that require clarity, precision, and domain expertise.

Two Versions, Built for Real-World Use

Magistral is being released as a dual offering:

Magistral Small: A 24-billion parameter model released under an open Apache 2.0 license, available on Hugging Face for self-deployment.

Magistral Medium: A higher-performance enterprise version available via API through Mistral’s Le Chat interface, as well as on platforms like Amazon SageMaker, with upcoming support for IBM WatsonX, Azure AI, and Google Cloud Marketplace.

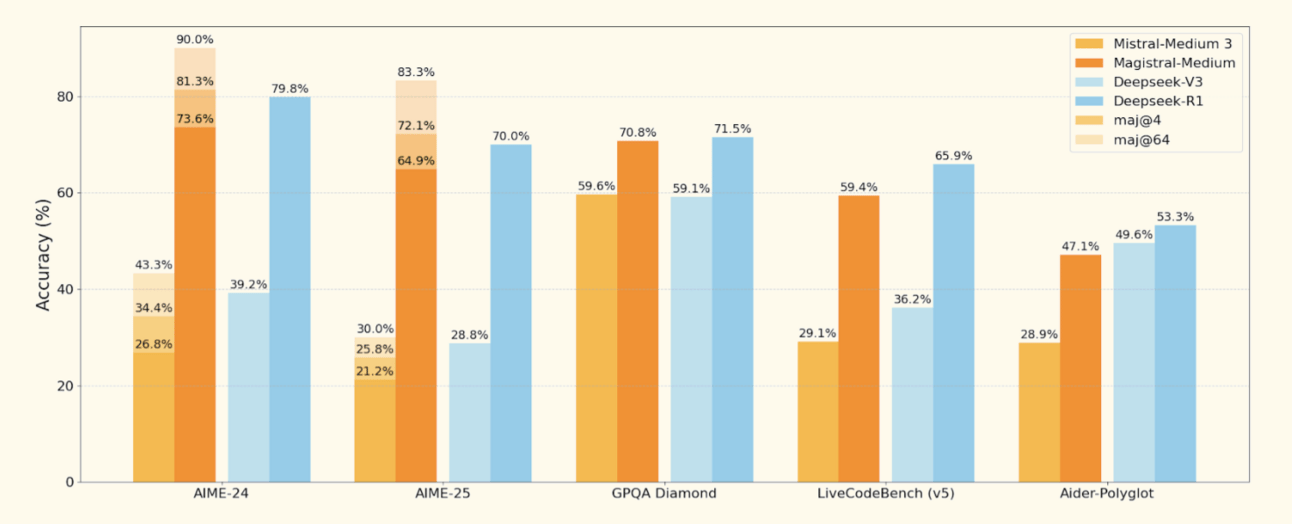

Early benchmarks show:

Magistral Medium scored 73.6% on AIME2024 and 90% with majority voting at 64 questions.

Magistral Small scored 70.7% and 83.3%, respectively.

Built for Transparent, Multilingual Reasoning

Mistral positions Magistral as a model purpose-built for traceable reasoning. Unlike standard LLMs, which may obscure their logic behind fluent responses, Magistral is designed to provide visible, verifiable steps—helping users understand not just the answer, but how it was reached.

The model’s chain-of-thought capabilities support high-fidelity reasoning in multiple languages, including:

English

French

Spanish

German

Italian

Arabic

Russian

Simplified Chinese

This multilingual support is paired with flexibility in structured tasks, from logic trees and calculations to decision modeling.

Fast, Feedback-Driven Interaction

In addition to transparency, Magistral offers speed. When used with Le Chat—Mistral’s conversational platform—the new Flash Answers feature enables up to 10x faster token throughput compared to competing models. This speed enables real-time reasoning and interaction across high-volume use cases.

Mistral says it plans to iterate quickly on Magistral, incorporating user feedback to refine the model’s reasoning capabilities and expand its domain coverage.

Versatile Applications Across Industries

Magistral is positioned for a broad range of enterprise and technical uses where step-by-step accuracy and explainability are essential:

Legal, finance, and healthcare: Designed for compliance-sensitive environments, with traceable logic and audit-ready outputs.

Software and data engineering: Supports sequenced development tasks, API orchestration, backend planning, and complex architecture design.

Business operations: Suited for modeling, forecasting, and operational decision-making under constraints.

Creative communication: Early tests show strong results in storytelling, structured writing, and even playful or unconventional content generation.

An Open Model Designed for Iteration

Magistral Small’s release under an open license signals Mistral’s continued commitment to open science. The company encourages developers and researchers to examine and build on its reasoning process—following the example of earlier community-driven projects based on its models, such as ether0 and DeepHermes 3.

The release is accompanied by a technical paper outlining:

The model’s evaluation results

Its training infrastructure

Reinforcement learning methods

Observations about reasoning model development

What This Means

With Magistral, Mistral is carving out a distinctive role in the AI ecosystem—focused not just on performance, but on how AI reaches its conclusions. As AI tools move deeper into sensitive domains like law, health, and finance, that kind of transparency is becoming as important as raw capability.

Magistral’s dual release format also reflects a broader shift: pairing open access with enterprise-ready performance. By doing so, Mistral aims to serve both the open-source community and high-stakes business environments.

In an increasingly crowded model landscape, reasoning could be the next frontier—and Mistral is betting that thinking out loud is exactly what users will want from their AI.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.