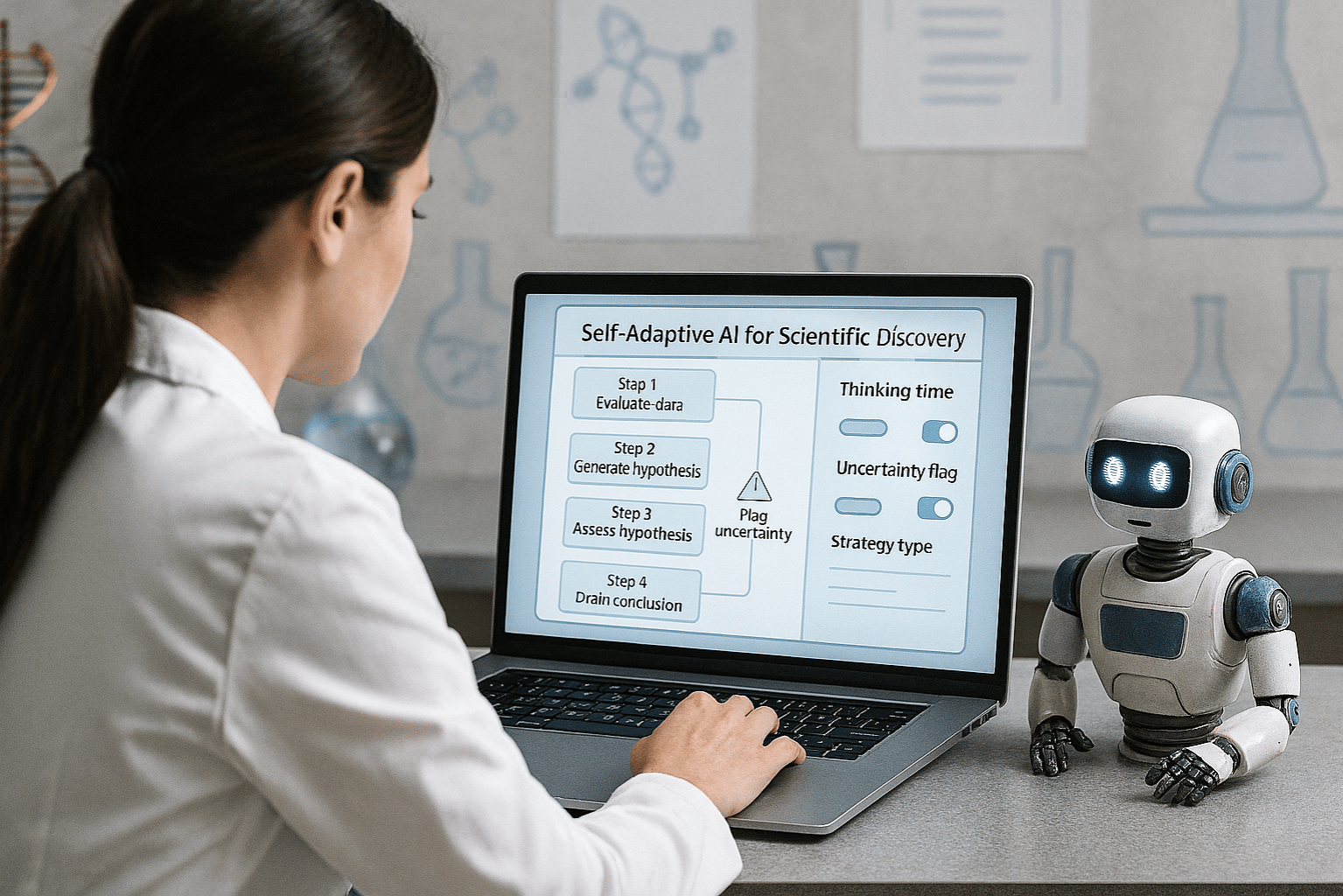

The CLIO interface allows scientists to guide AI reasoning in real time by adjusting control settings and monitoring uncertainty. Shown here: a researcher collaborates with CLIO to evaluate scientific hypotheses in a lab setting. Image Source: ChatGPT-4o

Microsoft Unveils CLIO: Self-Adaptive Reasoning for Scientific Discovery

Key Takeaways:

Microsoft has developed CLIO, a self-adaptive cognitive loop system that enables AI models to reason in scientific domains without post-training.

CLIO boosts GPT-4.1’s accuracy in biology and medicine questions from 8.55% to 22.37%, surpassing OpenAI’s o3 (high).

The system generates its own data through runtime reflection loops, eliminating the need for reinforcement learning or curated datasets.

Scientists can critique and revise CLIO’s reasoning paths, including adjusting beliefs and re-running logic from any point in the process.

CLIO offers fine-grained controls, such as setting time-to-think and strategy selection, giving users real-time influence over model behavior.

Microsoft Introduces CLIO for Controllable Scientific Reasoning

Microsoft has announced CLIO (Cognitive Loop via In-situ Optimization), a novel self-adaptive reasoning system designed to enhance scientific discovery by giving researchers real-time control over how language models think, reflect, and revise.

As AI agents grow more autonomous, the need for effective human oversight and accountability becomes critical—especially in scientific domains where precision and reproducibility are essential. Today’s reasoning strategies are typically defined during a model’s post-training phase by the provider, leaving end users with little to no control over how reasoning unfolds.

In response, Microsoft is advancing a new vision: a continually steerable virtual scientist. Instead of relying on static, pre-trained behaviors, Microsoft’s new system enables non-reasoning models to develop dynamic, customizable thought patterns guided by the scientist themselves.

Unlike traditional reasoning models that rely on reinforcement learning from human feedback (RLHF) or techniques like reinforcement learning with verification rewards (RLVR) and intrinsic rewards (RLIR), CLIO allows non-reasoning models to generate their own reflective thought processes at runtime. This opens the door to more explainable and customizable AI systems, particularly in complex fields like biology, medicine, and material science—domains where predefined reasoning patterns often fall short.

Microsoft’s system addresses a central limitation in current long-running LLM agents: the lack of end-user control. By shifting reasoning development from model providers to runtime environments, CLIO introduces a steerable, virtual scientist that adapts to evolving research needs without requiring additional training data.

How CLIO Works: Internal Reflection, Control, and Adaptability

While most reasoning models rely on large datasets and post-training to learn reasoning behaviors, CLIO’s cognitive loop creates its own internal data through runtime reflection. These reflection loops are used to explore ideas, manage memory, adjust behavior, and even raise correction flags when uncertainty is detected.

Critically, CLIO’s reasoning processes are not static—they evolve dynamically based on context. The system can learn from its prior inferences, allowing it to correct or redirect its logic as new information becomes available.

For example, in its evaluation using Humanity’s Last Exam (HLE), a challenging benchmark for scientific reasoning, CLIO was specifically instructed to follow the scientific method as a guiding structure. This ability to align AI with established human workflows is central to CLIO’s design.

The system also allows researchers to critique reasoning paths, edit internal beliefs mid-process, and re-run reasoning from any chosen point, giving scientists direct, real-time influence over the model’s problem-solving strategy.

Transparent Uncertainty Management to Build Scientific Trust

CLIO introduces a native framework for uncertainty awareness, enabling it to both recognize and communicate when it lacks confidence in an answer. This is essential in scientific workflows where overstated conclusions can undermine results.

Most current models generate answers with high confidence—even when wrong. CLIO counters this by offering threshold-based flags that indicate uncertainty at appropriate moments, improving reproducibility and reducing risks.

Importantly, CLIO includes prompt-free control knobs, allowing users to set how sensitive the system should be to ambiguity. Scientists can define how much time the system should “think,” which strategies to use, and at what point uncertainty should be surfaced or deferred.

These transparency features build a foundation of scientific defensibility, allowing experts to audit reasoning and revise approaches with rigor.

Performance Results: Accuracy Gains Across Models and Subdomains

In benchmark tests using text-based biology and medicine questions from HLE, CLIO delivered substantial gains:

A 61.98% relative increase (8.56% absolute) in accuracy over OpenAI's o3

An improvement from 8.55% to 22.37% accuracy on GPT-4.1

Similar boosts in GPT-4o, which originally scored below 2% on HLE

CLIO’s recursive reasoning structure allows it to evaluate broader solution paths and build deeper logic chains. In GPT-4.1, recursion alone lifted accuracy by 5.92%.

Microsoft extended this with GraphRAG, a module that ensembles different reasoning paths and selects the best-performing one. This adds another 7.90% performance gain over non-ensembled strategies.

In domain-specific testing on immunology-related questions, CLIO improved GPT-4o’s base performance by 13.60%, bringing it on par with top reasoning models in that category. This type of granular evaluation demonstrates CLIO’s flexibility across subdomains—not just general benchmarks.

Like Microsoft’s AI Diagnostic Orchestrator (MAI-DxO), CLIO is model-agnostic, offering performance improvements across multiple LLMs without being limited to a single architecture.

Scientific and Cross-Domain Implications

While CLIO is designed for scientific discovery, its transparent and self-adaptive architecture could serve broader domains including finance, law, and engineering—fields where reasoning transparency and human control are essential.

Microsoft notes that upcoming work will show how CLIO can enhance tool use in high-value scientific domains, including drug discovery, where precision and fluency in the language of science are essential. While the current experiments center on scientific discovery, CLIO is designed to be domain-agnostic, with the potential to support complex reasoning in other fields as well.

Microsoft sees CLIO as a persistent control layer in hybrid AI stacks—systems that may combine reasoning and completion models, external memory, and advanced tool usage. As these components evolve, the continuous checks and balances that CLIO enables will remain essential, ensuring that AI systems stay coherent, accountable, and adaptable over time.

CLIO is also a core part of the Microsoft Discovery platform, a broader initiative focused on tool-optimized, trustworthy scientific research.

For a deeper look at the research behind CLIO, read the pre-print paper published alongside this announcement.

Q&A: Microsoft CLIO and Scientific Reasoning

Q: What is CLIO?

A: CLIO (Cognitive Loop via In-situ Optimization) is Microsoft’s new self-adaptive AI system that enables controllable, explainable reasoning during runtime—without post-training or reinforcement learning.

Q: How does CLIO differ from RLHF, RLVR, or RLIR?

A: Unlike models trained using reinforcement learning from human feedback (RLHF), verification rewards (RLVR), or intrinsic rewards (RLIR), CLIO learns at runtime by generating its own reflection loops—no additional data or training required.

Q: How does CLIO help manage uncertainty?

A: CLIO flags its own uncertainty based on user-defined thresholds, allowing researchers to audit, revise, or re-run reasoning in a transparent, scientifically defensible way.

Q: What performance improvements does CLIO offer?

A: CLIO boosts GPT-4.1 accuracy from 8.55% to 22.37% on HLE biology/medicine tasks and delivers similar gains for GPT-4o, including a 13.60% boost in immunology.

Q: Can CLIO be used with other models?

A: Yes. CLIO is model-agnostic and functions as a reasoning layer that enhances performance across different LLM architectures.

What This Means: A New Standard for Scientific AI

CLIO represents more than just an accuracy gain—it redefines how scientists interact with AI. By removing the dependency on post-training and placing reasoning control directly in the user’s hands, Microsoft is charting a path toward collaborative, transparent AI systems that serve the goals of science rather than obscure them.

The ability to self-reflect, flag uncertainty, and follow the scientific method makes CLIO uniquely aligned with how real research is done. In fields where incorrect answers carry real consequences, this combination of adaptability and accountability sets a new bar for what trustworthy AI should look like.

More broadly, CLIO signals a shift toward hybrid AI systems—where completion, reasoning, and memory modules are orchestrated through a flexible control layer. As these systems grow more complex, the continuous checks and balances that CLIO enables will remain critical, helping ensure AI stays steerable, verifiable, and rigorously useful.

CLIO’s breakthrough isn’t limited to scientific research. Its ability to adapt its own reasoning in real time, explain how it thinks, and ask for help when uncertain could shape how AI is used in everything from medical diagnostics to financial systems to robots that work alongside people. In any setting where decisions matter—and where AI needs to be not just smart, but safe, flexible, and accountable—this kind of controllable reasoning opens up new possibilities. It’s a step toward AI that doesn't just answer, but collaborates, self-corrects, and earns trust.

This isn’t just an improvement in performance—it’s a foundational upgrade in how AI can participate in discovery.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.