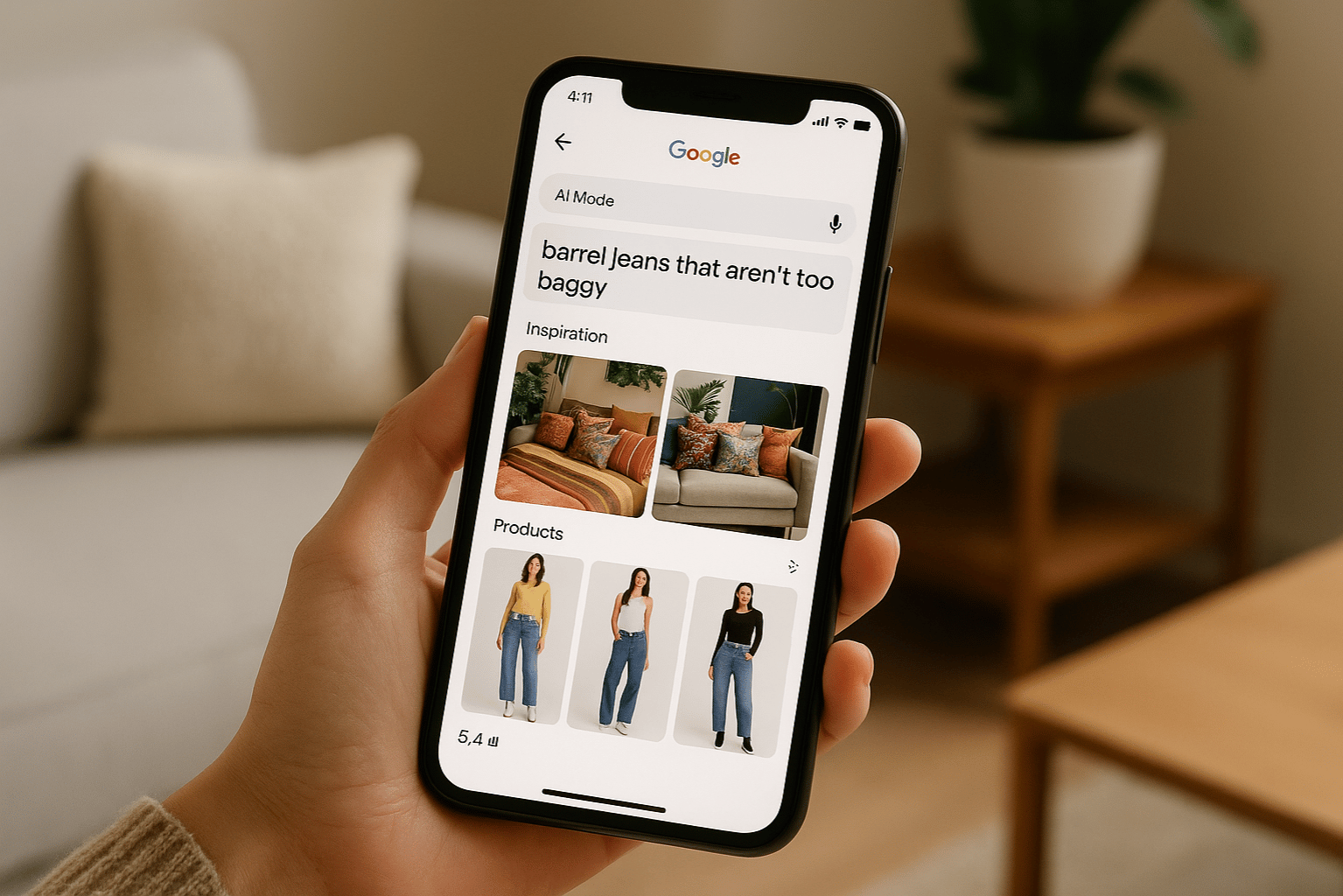

Google expands AI Mode in Search, showing visual shopping results and inspiration side by side on a smartphone. Image Source: ChatGPT-5

Google Expands AI Mode in Search With Visual Exploration and Shopping

Key Takeaways: Google Adds Visual Exploration and Shopping to AI Mode

Google has introduced visual exploration in AI Mode, allowing users to refine searches with images and natural language.

The update supports multimodal queries, including photos, screenshots, and conversational follow-ups.

Shoppers can describe items naturally — like “barrel jeans that aren’t too baggy” — and get personalized, shoppable results.

The feature uses Google’s Shopping Graph, with more than 50 billion product listings refreshed every hour.

The upgrade leverages Gemini 2.5 multimodal AI and a new visual search fan-out technique for deeper context understanding.

The new experience is rolling out in English in the U.S. this week.

Google Search AI Mode: Visual Exploration Made Natural

Google is reshaping how people use Search with the expansion of AI Mode, now designed to help users explore visually. The update enables people to start with an image, a photo, or even a vague idea described conversationally, and then refine results in the most natural way.

For example, someone looking for maximalist bedroom design inspiration could start with a broad query, then narrow results by requesting “dark tones and bold prints.” Each image includes direct links, enabling users to click through for more details.

Because the experience is multimodal, users can upload images, snap photos, or combine text and visuals, receiving rich, tailored results in return.

Shopping in AI Mode: Conversational Product Discovery

The update also brings a new way to shop inside AI Mode. Instead of scrolling through filters, users can describe what they want in everyday language, and Search will generate shoppable results.

For instance, a user could type: “barrel jeans that aren’t too baggy”, and AI Mode will generate product results aligned with that description. Follow-ups like “show me ankle length” allow further refinement without restarting the search.

When users select an item, they can go directly to the retailer’s website to make a purchase. Results are powered by the Google Shopping Graph, which now contains over 50 billion product listings, refreshed at a rate of 2 billion updates per hour.

The Shopping Graph pulls from both major retailers and local shops, surfacing details such as reviews, deals, availability, and color variations.

Gemini 2.5: Powering Visual Search and Context Awareness

This breakthrough in AI Mode is driven by Google’s visual understanding capabilities (including Lens and Image Search) and enhanced with Gemini 2.5’s multimodal intelligence.

Building on the query-fan-out method, new method called “visual search fan-out” allows the system to analyze not just the primary subject of an image, but also subtle details and secondary objects. This generates multiple queries in the background, giving Search a deeper grasp of both visual context and natural language questions.

On mobile, users can go even further by searching within a specific image and asking conversational follow-ups about the details they see.

U.S. Rollout: English-Language Availability This Week

The new AI Mode experience is rolling out this week in English in the United States, with further expansions expected.

Google emphasized that the feature is designed to help users find inspiration for creative projects, explore new styles, and shop more intuitively without relying on rigid filters or keyword precision.

Q&A: Google AI Mode Visual Exploration in Search

Q1: What did Google announce for AI Mode in Search?

A: Google introduced visual exploration, enabling users to refine searches with images, photos, and conversational queries.

Q2: How does shopping work in AI Mode?

A: Users can describe products naturally, like talking to a friend, and receive shoppable results powered by the Shopping Graph.

Q3: What powers the new AI Mode experience?

A: It combines Google Lens, Image Search, and Gemini 2.5 multimodal AI, supported by the new visual search fan-out method.

Q4: How large is Google’s Shopping Graph?

A: The Shopping Graph includes more than 50 billion listings, refreshed with 2 billion updates per hour.

Q5: Where and when is this feature available?

A: It is rolling out in English in the U.S. this week.

What This Means: The Future of Visual Search and AI Shopping

The expansion of AI Mode in Search marks a significant step in Google’s efforts to move beyond traditional text-based queries. By blending multimodal input, conversational refinement, and real-time product discovery, the company is positioning Search as both a creative inspiration tool and a shopping companion.

For users, this means more intuitive ways to find what they need — whether designing a room, searching for style inspiration, or making a purchase. For retailers, it offers greater visibility in an increasingly AI-driven search ecosystem.

As AI Mode evolves, the combination of visual understanding and conversational AI may redefine how people discover, decide, and shop online.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.