Google Demonstrates First Verifiable Quantum Advantage

Key Takeaways: Verifiable Quantum Advantage

Google’s Willow quantum processor completed a specialized task ~13,000× faster than classical supercomputers.

The result is the first demonstration of verifiable quantum advantage.

Interference-based validation increases scientific credibility.

One trillion measurements indicate hardware maturity at scale.

Progress aligns with Google’s roadmap toward fault-tolerant quantum systems.

Google Advances Quantum Computing With Verified Performance

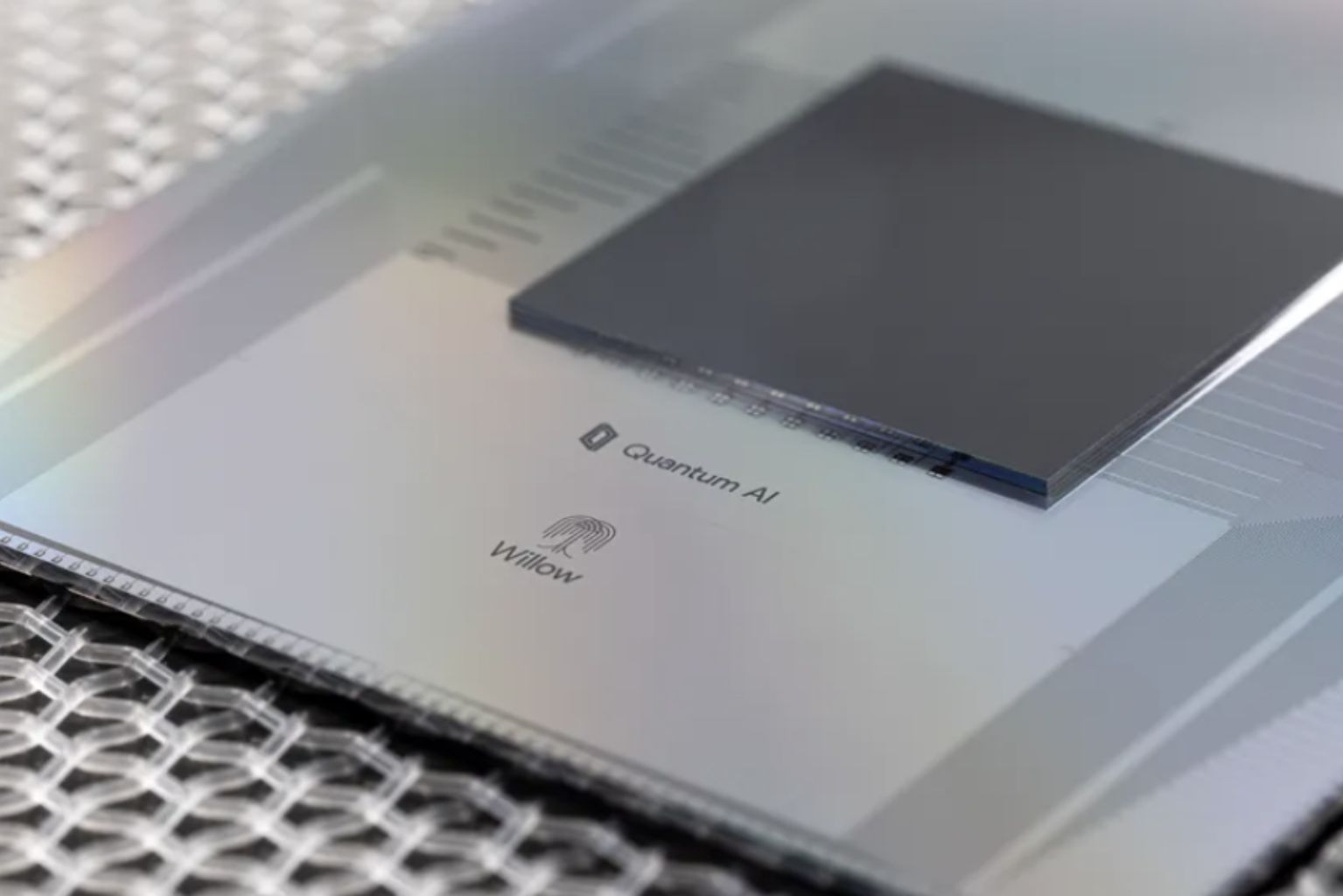

Google researchers have achieved the first experimental demonstration of verifiable quantum advantage, using its 105-qubit superconducting processor called Willow. The system executed a complex algorithm known as Quantum Echoes, which reverses the flow of quantum information to reveal hidden patterns within quantum systems. This reversal step is extremely difficult because any tiny error along the way can collapse the computation.

Classical supercomputers struggle with this type of simulation because the computation grows exponentially as complexity increases. In testing, Willow completed the workload in seconds, while comparable classical machines would take hours to days, resulting in an estimated ~13,000× speedup.

The results were published in Nature and validated using interference-based signatures, allowing classical computers to confirm correctness without reproducing the full computation.

Understanding Qubits and Why They’re Difficult to Control

A qubit (quantum bit) can represent both 0 and 1 simultaneously, a property known as superposition. Qubits can also become entangled, meaning changes to one instantly influence the others, allowing quantum computers to explore many possibilities at once.

However, qubits are extremely fragile:

heat

vibration

electromagnetic noise

…can knock them out of their delicate quantum state, a failure mode called decoherence.

This fragility is why error correction matters.

Because qubits drift easily, multiple physical qubits need to work together as one logical qubit that detects mistakes and realigns the computation. Without error correction, useful quantum computing is impossible.

Gate Fidelity Shows How Accurately Quantum Instructions Run

Willow’s milestone is supported by improvements in gate fidelity, which measures how often quantum operations produce the correct outcome:

99.97% accuracy for single-qubit operations

99.88% accuracy for two-qubit entangling operations

99.5% accuracy for readout (measuring results)

These numbers are noteworthy because tiny errors multiply quickly when many qubits work together. Higher fidelity reduces error buildup, which is essential for scaling toward practical applications.

Willow’s quantum gate operations run at nanosecond speeds, enabling high-volume measurement cycles and rapid repetition of complex algorithms.

Why Interference Across the Full Chip Matters

In addition to higher fidelity, Willow was able to maintain interference patterns across its entire chip. Interference describes how qubit states add together or cancel out—similar to overlapping ripples in water. Keeping these patterns stable across many qubits at once is extremely difficult, and it shows that quantum behavior can be controlled at system scale, not just on a few isolated qubits. This level of control is essential for building larger, more practical quantum computers.

Why Google’s Quantum Roadmap Matters

Quantum computing requires solving problems in sequence. Google’s six-step roadmap exists because:

you cannot build useful quantum computers without first stabilizing qubits,

you cannot stabilize qubits without error correction,

and you cannot correct errors without long-lived logical qubits.

Today’s work moves toward Milestone 3: making one logical qubit that can preserve information longer and more reliably.

Without this roadmap, progress would be difficult to measure and harder to coordinate across industry and academia. It's also useful to show policymakers how each advancement builds on foundation layers beneath it.

Why These Tasks Are Considered “Narrow”

Today’s demonstrations stress-test the hardware rather than solve practical problems. These benchmarks:

measure qubit stability,

verify system control,

and push limits on measurement volume.

These are purpose-built benchmarks—not real-world applications like drug design or logistics optimization yet.

How Quantum Computing Could Support Future AI

Researchers and companies like Nvidia see potential synergies between quantum hardware and advanced AI systems over time:

Exploring model designs more efficiently:

AI models contain billions of adjustable settings. Quantum hardware may search that space faster, improving accuracy.

Reducing training costs

Quantum systems can evaluate many possibilities at once, which may shorten training time and energy use.

Improving simulation workflows

Quantum simulation could help train AI models on more accurate physical data—for example, modeling molecules or materials beyond classical capability.

Simulation-based improvement:

Quantum simulations can test changes virtually, reducing trial-and-error cycles and energy consumption.

These benefits are theoretical today, but they represent a promising long-term direction.

Answering a Common Question: Why Work on Quantum While AI Still Hallucinates?

Does this eliminate hallucinations?

Not directly. Hallucinations stem from language model reasoning, not training hardware. However, faster training could enable:

improved data grounding (tying AI’s answers to real evidence),

better retrieval systems,

and new model designs that reduce hallucination frequency.

Quantum computing, by contrast, depends on decades of hardware innovation:

cooling systems,

fabrication techniques,

control electronics,

and error correction.

Different challenges, different teams, different timelines. Progress in one does not slow progress in the other.

Investing in quantum now lays groundwork for future computing demands—just as early GPU research enabled today’s AI breakthroughs.

How Quantum Could Impact Cybersecurity, Science, and Industry

Here’s how each area is affected:

Cybersecurity

Most modern encryption relies on mathematical problems that take classical computers too long to solve. Quantum systems could solve some of these problems faster, requiring countries to adopt post-quantum encryption standards.

Drug discovery and materials science

Molecules behave according to quantum physics. Quantum computers simulate them natively, potentially reducing years of laboratory trial-and-error and faster drug production times.

Logistics, finance, and energy grids

Quantum systems excel at optimization—choosing the best combination out of millions of possibilities. This may improve:

market simulations,

and power distribution efficiency.

Why governments care

Countries see quantum computing as:

an economic accelerator,

a national security factor,

and a scientific advantage.

Nations that lead in quantum may shape future standards, patents, and defense capabilities.

Remaining Challenges for Quantum Hardware

Researchers highlight several barriers that must still be overcome:

Error-corrected qubits:

Because physical qubits are unstable, multiple qubits must work together to form a logical qubit that can detect and correct mistakes. Millions may be required.

Cooling and scaling:

Quantum processors require cryogenic temperatures near absolute zero. Scaling control systems to manage millions of qubits will require new engineering approaches.

Result reliability:

Without fault tolerance, quantum errors accumulate rapidly. Fault-tolerant machines automatically detect and fix mistakes in real time, providing consistent results.

Experts estimate years—possibly decades—before fully fault-tolerant machines reach commercial use.

Q&A: Verifiable Quantum Advantage

Q1: Why is verifiability important?

A: It enables classical computers to check results without recreating the whole computation.

Q2: Why is the ~13,000× speedup notable?

A: It puts quantum hardware in a regime where classical simulation becomes impractical.

Q3: Why is reversing quantum information hard?

A: Any small error can collapse the entire computation, making consistency extremely difficult.

Q4: Is this useful today?

A: Not directly. It demonstrates engineering progress toward future applications.

Q5: Will quantum computing fix AI hallucinations?

A: No. Hallucinations are a language reasoning issue, not a compute limitation.

What This Means: Verifiable Quantum Advantage

This milestone signals that quantum computing is moving beyond theory and into repeatable engineering progress. While applications remain experimental, improvements in accuracy, error correction, and verification show momentum toward useful hardware.

If future breakthroughs continue, quantum computing could accelerate scientific research, improve industrial efficiency, and reshape cybersecurity standards. For businesses and policymakers, today’s result is a reminder that quantum computing is likely to influence how technology evolves over the next decade—and that preparing early may offer meaningful advantages.

As quantum hardware advances, the real advantage won’t just be faster machines—it will be what humanity chooses to do with them.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant used for research and drafting. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.