Researchers collaborate with advanced AI-driven data modeling tools in a modern lab environment, illustrating how reasoning-focused systems like Gemini 3 Deep Think are being applied to scientific and engineering workflows. Image Source: ChatGPT-5.2

Gemini 3 Deep Think Expands Access as Google Targets Scientific and Engineering Breakthroughs

Google DeepMind has released a major upgrade to Gemini 3 Deep Think, enhancing its ability to solve complex problems across scientific research, mathematics, and engineering. The updated model was refined in collaboration with researchers to improve multi-step reasoning, mathematical rigor, and performance on demanding academic benchmarks, while also strengthening its usefulness for practical engineering workflows.

The release also arrives as major AI labs including OpenAI and Anthropic increasingly emphasize reasoning-focused systems, underscoring growing industry attention on models designed for scientific and technical tasks.

In addition to these capability upgrades, Google is expanding access beyond internal testing — making Deep Think available to Google AI Ultra subscribers in the Gemini app and, for the first time, to select researchers and enterprises through the Gemini API Early Access Program. Built to tackle problems with incomplete data and no clear single answer, the new version is aimed at helping scientists and engineers accelerate discovery and real-world experimentation.

Key Takeaways: Gemini 3 Deep Think Benchmarks, Access, and Research Applications

Gemini 3 Deep Think is Google’s most advanced reasoning mode, optimized for scientific research, engineering, and advanced mathematics.

The model is now available to Google AI Ultra subscribers and through a Gemini API Early Access Program for researchers and enterprises.

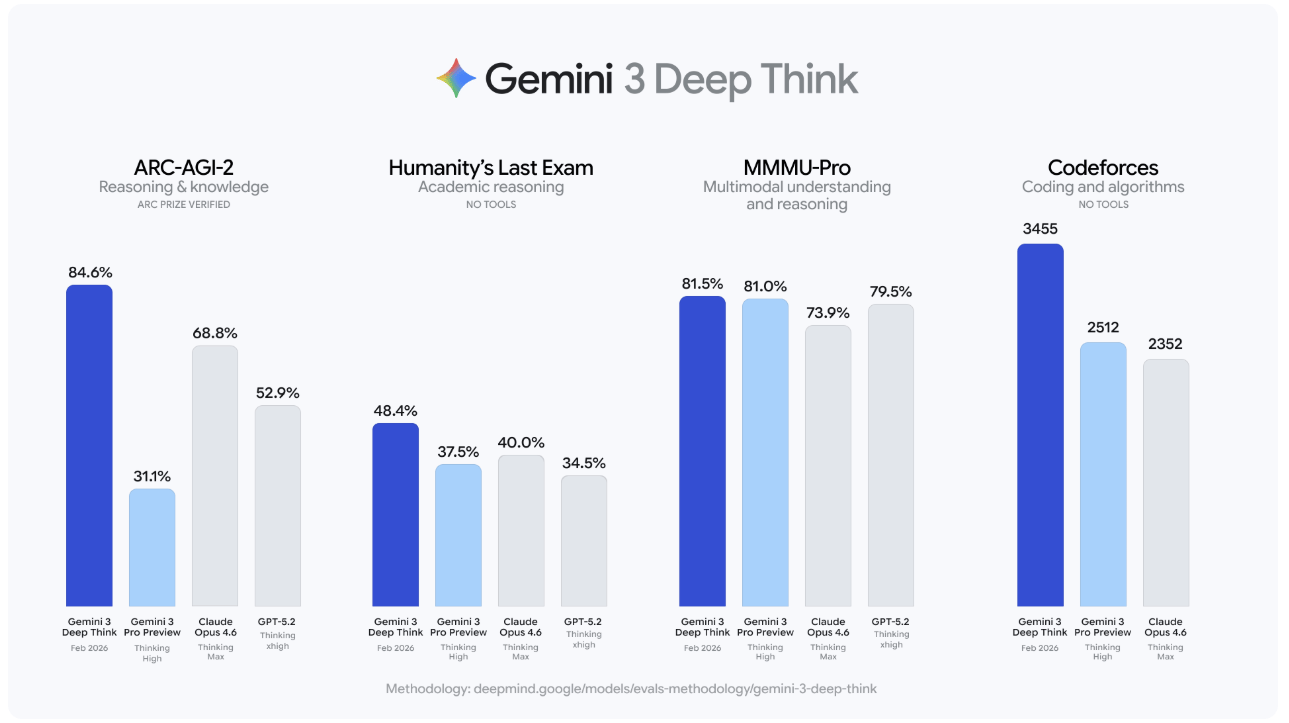

Deep Think scored 84.6% on ARC-AGI-2 and 48.4% on Humanity’s Last Exam, benchmarks designed to evaluate frontier reasoning abilities.

The system achieved a Codeforces Elo of 3455, indicating strong competitive programming and algorithmic reasoning performance.

Google reports gold-medal-level results across the International Math Olympiad 2025, International Physics Olympiad 2025, and International Chemistry Olympiad 2025 (theory).

Early testing includes academic use cases such as peer-review analysis and semiconductor fabrication optimization.

Gemini 3 Deep Think in Research: From Abstract Reasoning to Scientific Workflows

Google describes Deep Think as a reasoning system built specifically for research environments where problems do not have a single correct answer and datasets may be messy or incomplete.

Unlike general-purpose chat models, Gemini 3 Deep Think is engineered to combine:

Deep scientific knowledge

Mathematical rigor

Algorithmic precision

Practical engineering utility

The goal, according to Google, is to enable researchers to move from theoretical exploration to actionable solutions more efficiently.

Early academic testing suggests promising use cases:

Lisa Carbone, mathematician at Rutgers University, studies advanced mathematical structures used by high-energy physicists working to reconcile Einstein’s theory of gravity with quantum mechanics — an area with limited existing training data for AI systems. She used Deep Think to review a highly technical mathematics paper, where the model identified a subtle logical flaw that had previously passed human peer review unnoticed.

At Duke University, the Wang Lab used Deep Think to optimize fabrication methods for complex crystal growth aimed at discovering new semiconductor materials. The model designed a recipe for growing thin films larger than 100 μm, achieving a precise engineering target that previous methods had difficulty reaching.

Anupam Pathak, an R&D lead in Google’s Platforms and Devices division and former CEO of Liftware, tested Deep Think to accelerate the design of physical components, exploring how reasoning models can shorten iteration cycles in hardware development.

These early examples illustrate how Google is presenting Deep Think as a tool intended for research collaboration rather than general-purpose assistance.

Gemini 3 Deep Think Benchmarks: ARC-AGI-2, Codeforces, and Olympiad Results

Google says earlier specialized versions of Deep Think demonstrated strong performance in advanced reasoning tasks, achieving gold-medal-level results in mathematics and programming competitions in world championships. More recently, Deep Think has been used to support research-level mathematical exploration through specialized agent systems.

The updated Deep Think mode builds on earlier research efforts and reports improved results across several widely used reasoning and scientific benchmarks.

Academic and Reasoning Benchmarks

Humanity’s Last Exam: 48.4% without tools — a benchmark designed to test the limits of frontier AI systems with difficult, expert-level questions spanning multiple disciplines.

ARC-AGI-2: 84.6%, which Google describes as an unprecedented result for the benchmark, verified by the ARC Prize Foundation. ARC-AGI-2 evaluates abstract reasoning and the ability to apply rules in novel situations, emphasizing compositional reasoning and contextual problem solving rather than memorization.

MMMU-Pro: 81.5% — a multimodal benchmark designed to test whether models can reason across both visual and textual information in advanced, multidisciplinary problems, including science and mathematics.

International Math Olympiad 2025: Gold-medal level performance — based on problems modeled after one of the world’s most challenging mathematics competitions for pre-university students, which emphasizes creative proof-based reasoning.

Competitive Programming

Codeforces Elo: 3455 — a rating derived from performance in timed competitive programming contests, where higher ratings reflect stronger algorithmic problem-solving ability under pressure and relative ranking against other participants.

An Elo score at this level places the system among the strongest competitive programming performers, reflecting advanced algorithmic reasoning under constraint.

While benchmark results help measure reasoning capability under controlled conditions, real-world performance can vary depending on data quality, tooling, and how models are integrated into practical workflows.

Beyond mathematics and competitive programming, Google says Gemini 3 Deep Think performs strongly across scientific domains such as physics and chemistry. Google reports gold-medal-level performance on the written theory sections of the International Physics Olympiad 2025 and International Chemistry Olympiad 2025, as well as a 50.5% score on CMT-Benchmark, which evaluates advanced theoretical physics reasoning.

These results suggest Deep Think is being developed not only as a coding or mathematics model, but as a broader scientific reasoning system capable of operating across multiple academic domains.

In addition to benchmark performance, Google says the updated system is intended to support practical engineering use cases — enabling researchers to interpret complex datasets and engineers to model physical systems through code. Expanded API access is designed to make those capabilities available inside real research and development workflows.

Q&A: What Makes Gemini 3 Deep Think Different?

Q: How is Deep Think different from standard Gemini models?

A: Gemini 3 Deep Think is a specialized reasoning mode optimized for research-level mathematics, scientific reasoning, and engineering tasks. It emphasizes multi-step logical exploration, algorithmic rigor, and technical domain expertise rather than conversational fluency.

Q: Who can access the updated Deep Think mode?

A: The updated version is available to Google AI Ultra subscribers within the Gemini app. Researchers, engineers, and enterprises can apply for access via the Gemini API Early Access Program.

Q: Is this aimed at general consumers?

A: No. The model is targeted toward scientists, engineers, and research institutions tackling complex, open-ended problems in physics, chemistry, mathematics, and engineering.

Q: How does Deep Think compare to general-purpose AI models?

A: While general models prioritize versatility and conversational performance, Gemini 3 Deep Think is tuned for multi-step reasoning, mathematical rigor, and complex scientific problem solving. Its design emphasizes logical consistency and technical depth over speed or casual interaction.

Q: Why is API access important for researchers and enterprises?

A: API access allows organizations to integrate Deep Think directly into existing research pipelines, simulation workflows, and engineering tools. This enables experimentation at scale rather than limiting use to standalone chat interfaces.

What This Means: AI Reasoning Models Enter Scientific Research Workflows

The release of Gemini 3 Deep Think highlights how frontier AI systems are increasingly being introduced not simply as conversational assistants, but as tools intended to participate directly in scientific and engineering work.

Who should care: If you are a research lab, university team, semiconductor manufacturer, advanced materials startup, or enterprise R&D division, this update may influence how you evaluate AI tools inside active research workflows. The model’s benchmark performance suggests Google is prioritizing reasoning reliability and technical depth for environments where errors carry real scientific or financial consequences.

Why it matters now: AI progress is increasingly judged by its ability to operate in difficult, ambiguous domains rather than just generate fluent answers. By opening API access to researchers and enterprises, Google is encouraging experimentation inside real research pipelines — where models can help analyze complex datasets, surface logical inconsistencies, or accelerate hypothesis testing.

What decision this affects: Organizations may begin evaluating whether specialized reasoning models belong in early-stage research workflows. Early adoption may reduce iteration cycles, improve error detection, and expand the range of experiments teams can attempt.

More broadly, industry development is pointing toward AI systems that function as structured collaborators in scientific discovery, supporting human researchers rather than replacing them. Institutions that learn how to combine human expertise with advanced reasoning tools early may gain a meaningful advantage as AI-assisted research becomes more common.

The frontier is no longer just conversational AI — it is computational reasoning applied directly to scientific discovery.

Sources:

Google Blog — Gemini 3 Deep Think Announcement

https://blog.google/innovation-and-ai/models-and-research/gemini-models/gemini-3-deep-think/Google DeepMind Blog — Accelerating Mathematical and Scientific Discovery with Gemini Deep Think

https://deepmind.google/blog/accelerating-mathematical-and-scientific-discovery-with-gemini-deep-think/Google DeepMind Blog — Gemini Deep Think Achieves Gold Medal Standard at the International Mathematical Olympiad

https://deepmind.google/blog/advanced-version-of-gemini-with-deep-think-officially-achieves-gold-medal-standard-at-the-international-mathematical-olympiad/Google DeepMind Blog — Gemini Achieves Gold-Medal Level at the International Collegiate Programming Contest World Finals

https://deepmind.google/blog/gemini-achieves-gold-medal-level-at-the-international-collegiate-programming-contest-world-finals/Google Forms — Gemini API Early Access Interest Form

https://docs.google.com/forms/d/e/1FAIpQLSc6PZY8KkEAOLfuNc8znxHY8HuphkEOMoN4jbE6n2JSturXmg/viewform?pli=1

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.