Image Source: ChatGPT-4o

Elon Musk Plans to Rewrite Human History Using Grok AI

Elon Musk says his AI company, xAI, plans to retrain its Grok chatbot by rewriting the entire foundation of its knowledge base—including the full record of human history. Musk claims that existing AI models are built on biased or inaccurate information, and says Grok will instead be trained on a corrected version of history shaped by user-submitted facts.

A Plan to "Correct" the Record

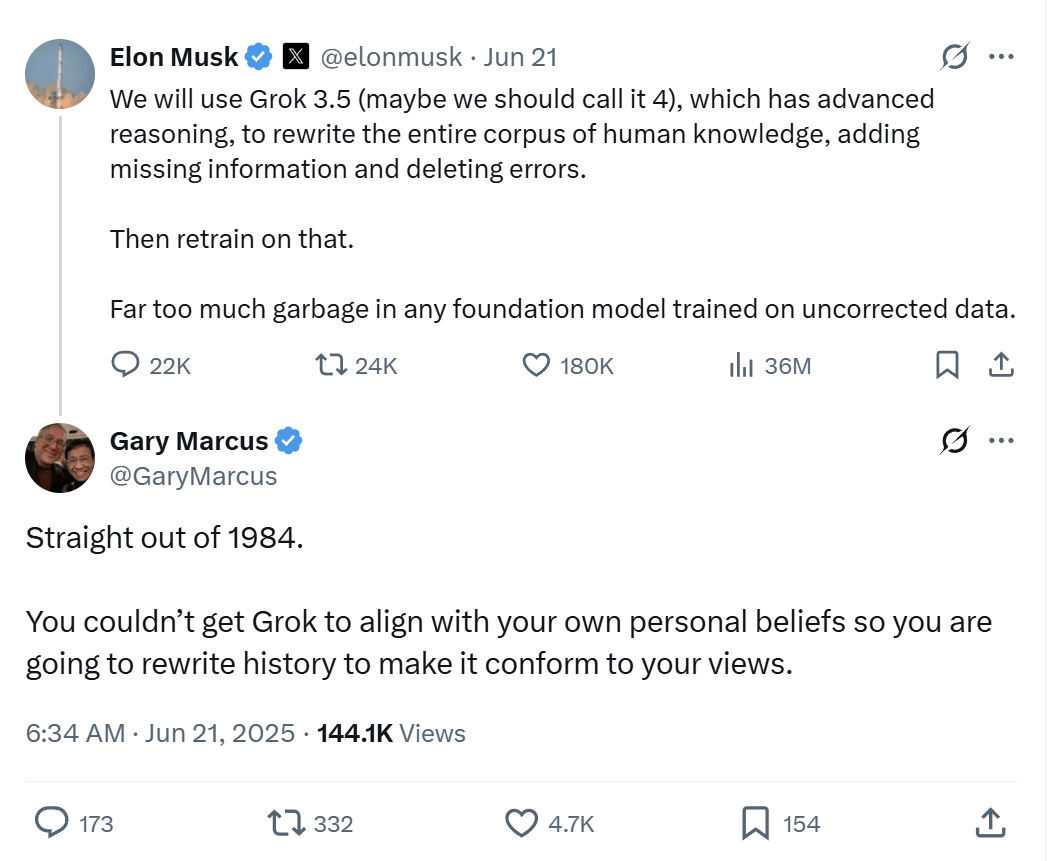

In a post on X (formerly Twitter), Musk announced plans to retrain Grok on a “cleaned” dataset by first using the upcoming Grok 3.5 model to “rewrite the entire corpus of human knowledge, adding missing information and deleting errors.” He described existing training data used in other AI models as full of “garbage” and “uncorrected data.”

According to Musk, Grok 3.5 will be equipped with “advanced reasoning” and tasked with purging inaccuracies from the internet’s record. Once revised, the new knowledge base will be used to retrain the model from scratch.

Musk, who co-founded OpenAI but later distanced himself from the company, has frequently criticized mainstream AI systems like ChatGPT for what he calls political bias. He has positioned Grok as an “anti-woke” alternative—an approach that aligns with his broader push against political correctness.

Since taking over Twitter in 2022, Musk has relaxed content moderation on the platform, allowing a wave of conspiracy theories, extremist rhetoric, and false claims to circulate—some of which he has echoed or amplified. In response to criticism, he launched a “Community Notes” feature, allowing users to append context to misleading posts.

Critics Warn of Dangerous Precedent

His latest move, however, has triggered warnings from AI researchers and philosophers alike.

“Straight out of 1984,” wrote Gary Marcus, an AI entrepreneur and professor emeritus of neural science at NYU, on X. “You couldn’t get Grok to align with your own personal beliefs so you are going to rewrite history to make it conform to your views.”

Philosophy of science professor Bernardino Sassoli de’ Bianchi of the University of Milan called the proposal “dangerous,” warning that altering training data to reflect ideological preferences amounts to “narrative control.” He added: “Rewriting training data to match ideology is wrong on every conceivable level.”

As part of Grok’s retraining process, Musk has invited X users to contribute what he called “divisive facts”—defined as politically incorrect yet “factually true.” The open call has already drawn a flood of controversial claims, many of which have been widely discredited. Among the responses: Holocaust distortion, anti-vaccine misinformation, pseudoscientific racism, and climate change denial.

What This Means

Elon Musk’s plan to rewrite Grok’s training data represents more than a technical pivot—it’s a direct challenge to how truth is defined, preserved, and propagated in the digital age. AI systems already influence what billions of people see, learn, and believe. When the data that trains these systems is rewritten to fit the worldview of a single individual or community, the line between information and ideology begins to blur.

This isn’t just about one model. Musk is inviting users to reshape Grok’s foundation with what he calls “politically incorrect but factually true” claims—many of which include discredited conspiracy theories, misleading scientific assertions, and distorted versions of history. If those submissions are used to retrain Grok, the result could be an AI system that presents misinformation as fact under the guise of correction.

The danger isn’t hypothetical. AI-generated content is increasingly used in education, media, and policymaking. A model trained on distorted data doesn’t just risk confusion—it risks normalizing falsehoods at scale.

At its core, this move reframes AI not as a tool for understanding the world, but as a mechanism for rewriting it. And once a version of history is rewritten into an AI system, undoing that change becomes exponentially harder.

If powerful individuals can redefine historical “facts” through algorithmic design, the implications extend far beyond one chatbot. They reach into the heart of public trust, democratic discourse, and the integrity of information itself.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.