Claude Sonnet 4.6 enables AI systems to assist developers and enterprise teams by reasoning across large datasets and operating software workflows in real-world environments. Image Source: ChatGPT-5.2

Claude Sonnet 4.6: 1M Context Window, Stronger Coding, and Near-Opus Performance

Anthropic has released Claude Sonnet 4.6, an upgraded version of its widely used mid-tier AI model that significantly improves coding performance, computer interaction, and long-context reasoning while maintaining the same pricing as its predecessor. The model introduces a 1-million-token context window (beta) and is now the default model across Claude.ai for Free and Pro users.

The update matters because capabilities that previously required Anthropic’s higher-cost Opus models are now available in a more affordable tier, lowering the barrier for organizations deploying AI into everyday workflows. Rather than focusing solely on peak intelligence, the release emphasizes reliability, consistency, and sustained performance across long, real-world tasks.

The announcement reflects a broader industry trend toward agentic AI systems — models designed to operate software, manage workflows, and reason across large bodies of information instead of answering isolated prompts.

For developers, enterprise teams, and AI product builders, Sonnet 4.6 changes how practical deployment decisions are made: advanced reasoning and automation capabilities are now available in models affordable enough to run continuously.

Here’s what this means for organizations evaluating AI models for production use.

Key Takeaways: Claude Sonnet 4.6 Features, Benchmarks, and Enterprise Impact

Claude Sonnet 4.6 becomes Anthropic’s default model, replacing Sonnet 4.5 across Claude.ai Free and Pro plans.

Introduces a 1-million-token context window, enabling analysis of entire codebases, contracts, and research collections.

Achieves near-Opus-level benchmark performance across coding, computer use, and enterprise reasoning tasks.

Shows major gains in agentic computer use, allowing AI to operate software interfaces without custom APIs.

Developers preferred Sonnet 4.6 over Sonnet 4.5 roughly 70% of the time in early testing.

Maintains pricing at $3 input / $15 output per million tokens, improving performance-to-cost efficiency.

Adds developer features including adaptive thinking, context compaction, and expanded tool execution.

Safety evaluations report improved resistance to prompt-injection attacks and strong alignment behavior.

Claude Sonnet 4.6 Improves Coding, Reasoning, and Agent Workflows

Anthropic describes Claude Sonnet 4.6 as a comprehensive upgrade across the types of tasks increasingly associated with real-world AI deployment, including coding, long-context reasoning, agent planning, computer interaction, and knowledge work. Rather than introducing a single breakthrough capability, the release focuses on improving reliability and consistency across complex, multi-step workflows.

Early testing suggests the improvements are most noticeable in software development environments. Developers reported that Sonnet 4.6 reads existing context more carefully before modifying code, reducing unnecessary rewrites and duplicated logic — a common frustration in earlier models. According to Anthropic, users preferred Sonnet 4.6 over Sonnet 4.5 roughly 70% of the time in Claude Code testing, citing stronger instruction following and more dependable execution during extended sessions.

The model also showed gains in sustained reasoning, particularly when working across large bodies of information. With its expanded context window and improved reasoning across long inputs, Sonnet 4.6 can analyze entire repositories, lengthy contracts, or multiple research documents within a single request while maintaining coherence across tasks that unfold over many steps.

Anthropic says these improvements make performance previously associated with Opus-class models available within the Sonnet tier for many practical workloads, including office automation, document analysis, and collaborative knowledge work. Testers reported fewer hallucinations, fewer false claims of task completion, and more consistent follow-through when executing multi-step instructions.

Users also preferred Sonnet 4.6 to Claude Opus 4.5 in 59% of comparisons during internal evaluations. Participants described the model as less prone to overengineering solutions and “laziness” while still maintaining strong reasoning performance — an outcome Anthropic attributes to improvements in instruction adherence and planning behavior.

Beyond coding and reasoning, early customers also reported improvements in structured visual outputs such as frontend interfaces, documents, and analytical layouts. Outputs generated by Sonnet 4.6 were described as more polished, with improved layouts, animations, and design sensibility, often requiring fewer iteration cycles to reach production-ready quality.

These changes reflect Anthropic’s continued emphasis on making AI models more dependable collaborators for sustained work rather than tools optimized only for short prompts or isolated tasks.

AI Computer Use Advances as Claude Learns to Operate Software Like Humans

One of the most significant areas of progress in Claude Sonnet 4.6 is computer use — Anthropic’s effort to enable AI models to operate software directly rather than relying on APIs or custom integrations. Instead of requiring developers to build connectors between systems, the model interacts with applications in ways similar to a human user, navigating interfaces by clicking, typing, and moving between programs.

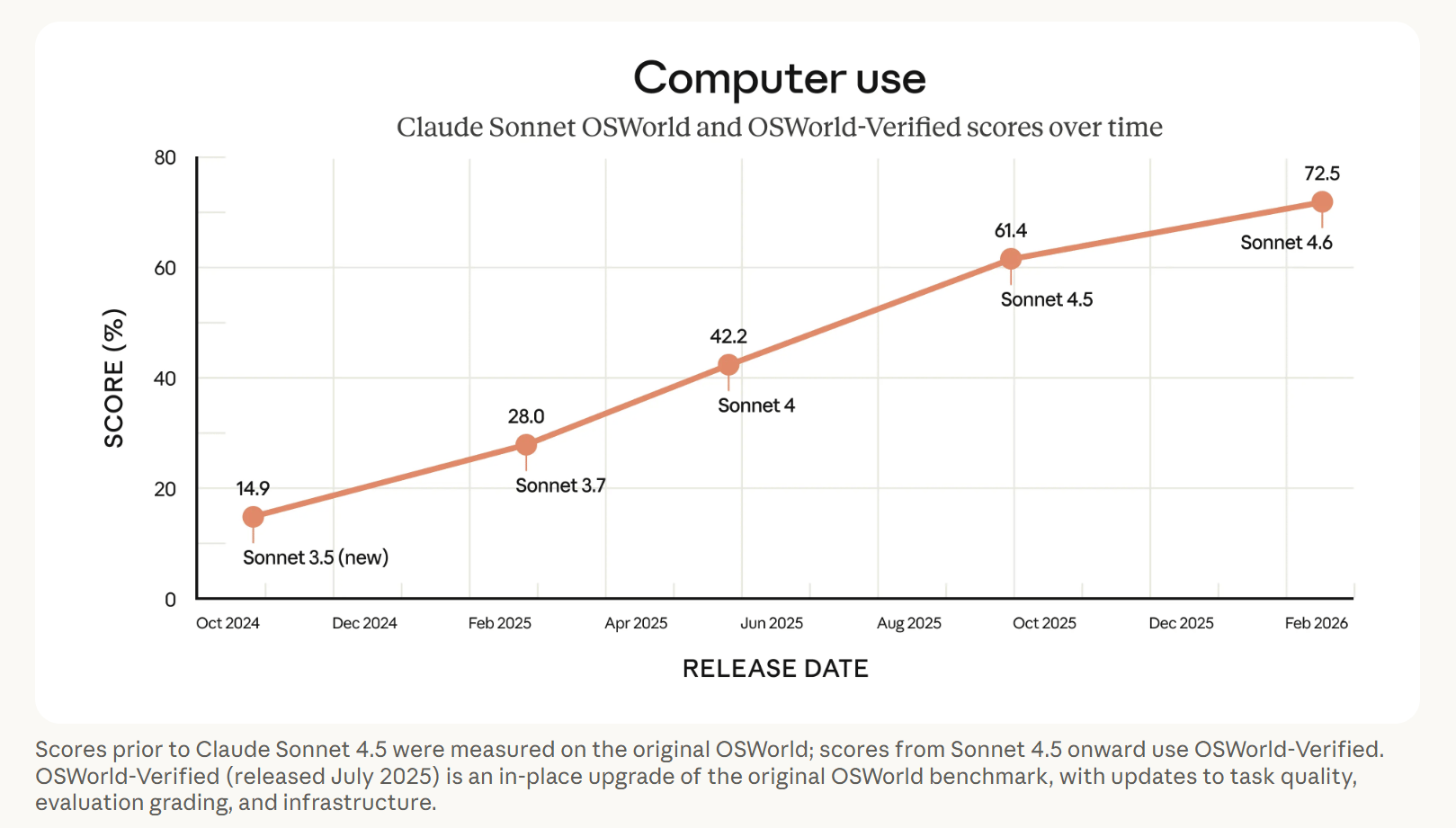

Anthropic first introduced general-purpose computer use capabilities in October 2024, describing the early version as experimental and sometimes unreliable. Since then, the company has focused on improving consistency and task completion across real software environments. According to Anthropic, Sonnet models have made steady gains over sixteen months, culminating in substantial improvements with Sonnet 4.6.

Performance is measured using OSWorld, a benchmark designed to evaluate how effectively AI systems can complete tasks inside real applications such as Chrome, LibreOffice, and VS Code running on a simulated computer. Unlike traditional benchmarks that test isolated reasoning problems, OSWorld evaluates whether a model can execute multi-step workflows without specialized APIs — closer to how employees actually use software.

Early users report that Sonnet 4.6 can now perform tasks approaching human-level capability in certain scenarios, including navigating complex spreadsheets, completing multi-step web forms, and coordinating actions across multiple browser tabs. These workflows require sustained planning, memory of prior actions, and adaptation to changing interface states — areas where earlier models often struggled.

Anthropic notes that the model still trails highly skilled human operators, particularly in edge cases requiring deep domain expertise. However, the rate of improvement suggests that computer-using AI systems are becoming increasingly practical for everyday work tasks, especially in environments built on legacy software that lacks modern automation interfaces.

The capability also introduces new security considerations. Because the model interacts with live environments, malicious instructions hidden on webpages — known as prompt injection attacks — can attempt to manipulate behavior. Anthropic reports that Sonnet 4.6 shows significantly improved resistance to such attacks compared to Sonnet 4.5, performing similarly to Opus 4.6 in safety evaluations.

As computer-use capabilities improve, AI moves closer to functioning as an operational assistant capable of executing workflows across existing software ecosystems rather than serving solely as a conversational interface.

Benchmarks Show Sonnet 4.6 Approaching Opus Performance at Lower Cost

Anthropic evaluated Claude Sonnet 4.6 across a range of applied benchmarks designed to measure real-world AI work rather than isolated reasoning tasks. The results show the Sonnet tier closing much of the performance gap with Opus-class models while maintaining significantly lower operating cost — a development that could influence deployment decisions for enterprise teams.

In agentic coding, measured using SWE-bench Verified, Sonnet 4.6 achieved 79.6%, nearly matching Opus 4.6’s 80.8% performance. The benchmark evaluates whether models can understand existing codebases and implement correct fixes across complex repositories, suggesting that advanced software engineering capability is increasingly available without requiring top-tier models.

Performance parity appears even clearer in computer-use tasks. On OSWorld Verified, which evaluates an AI system’s ability to operate real software environments, Sonnet 4.6 scored 72.5%, effectively matching Opus 4.6 at 72.7%. The result supports Anthropic’s claim that computer-operating agents are becoming practical for everyday workflows involving browsers, documents, and development tools.

The model also demonstrated strong reliability when using external tools. In τ²-bench, which measures agentic tool use across industry scenarios, Sonnet 4.6 achieved 91.7% performance in retail workflows and 97.9% in telecom environments, indicating improved consistency when coordinating multi-step actions across systems.

Enterprise productivity gains were reflected in office task evaluations using the GDPval-AA ELO benchmark, where Sonnet 4.6 achieved a score of 1633, surpassing Opus 4.6’s 1606. The benchmark measures performance across common knowledge-work activities such as document analysis, planning, and structured business reasoning.

Reasoning improvements were also visible in ARC-AGI-2, a benchmark designed to test novel problem-solving ability. Sonnet 4.6 reached 58.3%, a substantial increase from 13.6% in Sonnet 4.5, indicating stronger generalization when solving unfamiliar problems.

Across these evaluations, differences between model tiers appear increasingly defined by efficiency rather than capability ceilings — allowing organizations to deploy advanced reasoning and agent workflows at scale without relying exclusively on premium models.

Replit, Databricks, and GitHub Report Early Enterprise Results

Several early enterprise and developer partners reported real-world performance gains with Claude Sonnet 4.6, offering early validation beyond benchmark testing. Their feedback highlights improvements across software development, enterprise document analysis, and large-scale agent workflows — areas where reliability and consistency often matter more than raw model intelligence.

Replit President Michele Catasta said:

“The performance-to-cost ratio of Claude Sonnet 4.6 is extraordinary—it’s hard to overstate how fast Claude models have been evolving in recent months. Sonnet 4.6 outperforms on our orchestration evals, handles our most complex agentic workloads, and keeps improving the higher you push the effort settings.”

Databricks CTO of Neural Networks Hanlin Tang added:

“Claude Sonnet 4.6 matches Opus 4.6 performance on OfficeQA, which measures how well a model can read enterprise documents (charts, PDFs, tables), pull the right facts, and reason from those facts. It’s a meaningful upgrade for document comprehension workloads.”

GitHub VP of Product Joe Binder noted:

“Out of the gate, Claude Sonnet 4.6 is already excelling at complex code fixes, especially when searching across large codebases is essential. For teams running agentic coding at scale, we’re seeing strong resolution rates and the kind of consistency developers need.”

Across partners, the feedback points to a common theme: improvements are appearing not only in isolated tests but in sustained production environments where models must operate reliably across complex workflows.

How Claude’s 1M-Token Context Enables Long-Horizon AI Planning

Claude Sonnet 4.6 includes a 1-million-token context window, a capability Anthropic introduced in earlier Claude models that allows the system to process extremely large bodies of information within a single interaction. In practical terms, this capacity enables the model to analyze entire code repositories, lengthy legal contracts, multi-document research archives, or extended conversation histories without losing awareness of earlier details.

Traditional AI systems operate with far smaller context limits, forcing users to summarize or repeatedly reintroduce information as tasks grow longer. A larger context window reduces this fragmentation by allowing the model to maintain continuity across extended workflows, improving consistency when solving problems that unfold over many steps.

Anthropic says the expanded context particularly benefits long-horizon reasoning, where an AI system must track goals, constraints, and prior decisions over time. Examples include coordinating multi-stage software refactoring projects, reviewing large collections of enterprise documents for compliance analysis, or planning complex operational tasks that require referencing earlier outputs.

The capability also supports emerging agent-based workflows, in which models operate semi-autonomously while maintaining memory of prior actions. By retaining more historical context, Sonnet 4.6 can better align later decisions with earlier instructions, reducing errors caused by forgotten constraints or incomplete understanding.

While larger context windows do not inherently make models more intelligent, they significantly expand the range of problems AI systems can handle without human intervention. For organizations deploying AI across sustained workflows, context size increasingly functions as an operational constraint rather than a technical specification.

Developer Platform Updates Add Adaptive Thinking and Tool Automation

Alongside model improvements, Anthropic introduced several updates to the Claude Developer Platform aimed at making AI systems easier to integrate into production workflows and long-running applications.

Claude Sonnet 4.6 supports adaptive thinking and extended thinking modes, allowing developers to balance response speed with deeper reasoning depending on task complexity. This gives applications greater control over when the model prioritizes efficiency versus more deliberate problem-solving — an increasingly important capability for agent-based systems operating across varied workloads.

The platform also introduces context compaction (beta), which automatically summarizes older portions of a conversation as interactions approach context limits. By preserving relevant information while reducing token usage, the feature helps maintain continuity during long-running sessions without requiring developers to manually manage conversation history.

On the Claude API, web search and fetch tools can now automatically write and execute code to filter and process search results, keeping only relevant content in context. Anthropic says this improves both response quality and token efficiency by reducing unnecessary data processing during retrieval tasks.

Additionally, code execution, memory, programmatic tool calling, tool search, and tool use examples are now generally available, expanding Claude’s ability to interact with external systems and perform structured multi-step operations through the API.

Anthropic also expanded enterprise integrations through its Claude for Excel add-in, which now supports MCP connectors. These integrations allow users to pull data directly from platforms such as S&P Global, Moody’s, PitchBook, Daloopa, LSEG, and FactSet without leaving spreadsheets, enabling AI-assisted analysis within existing financial and research workflows.

These platform updates emphasize Anthropic’s focus on enabling persistent, tool-using AI systems that operate within real software environments rather than standalone chat interfaces.

Availability and How to Access Claude Sonnet 4.6

Claude Sonnet 4.6 is available immediately across Anthropic’s ecosystem, including Claude.ai, the Claude Developer Platform, and major cloud integrations.

The model is now the default system model for users on Claude’s Free and Pro plans, where it replaces Sonnet 4.5 while maintaining the same pricing structure, starting at $3 per million input tokens and $15 per million output tokens.

Developers can access the model through the Claude API using the model identifier claude-sonnet-4-6, enabling integration into applications, agent workflows, and enterprise systems.

Claude Sonnet 4.6 is available across all Claude subscription tiers, including:

Free and Pro plans (now using Sonnet 4.6 as the default model)

Max

Team

Enterprise

Sonnet 4.6 is also supported across:

Claude Cowork

Claude Code

Anthropic’s API ecosystem

Major cloud platforms offering Claude models

Anthropic has additionally expanded capabilities available on the free tier, which now includes features such as file creation, connectors, skills, and context compaction.

For enterprise users, Claude’s Excel add-in supports MCP connectors that allow the model to access external data sources directly within spreadsheet workflows, extending AI-assisted analysis into existing financial and research environments.

Safety Evaluations Show Strong Alignment and Prompt-Injection Resistance

Anthropic reports that Claude Sonnet 4.6 underwent extensive safety evaluations prior to release, with results indicating performance comparable to or safer than recent Claude models. According to the company’s safety researchers, the model demonstrated “a broadly warm, honest, prosocial, and at times funny character,” alongside strong adherence to safety guidelines and no signs of major high-risk misalignment.

A key focus of testing involved resistance to prompt-injection attacks, a growing security concern for AI systems that browse the web or operate software environments. In these attacks, malicious instructions hidden within webpages or external content attempt to override a model’s intended behavior.

Anthropic says Sonnet 4.6 shows significant improvement in resisting such attacks compared to Sonnet 4.5, performing similarly to Opus 4.6 in internal evaluations. Strengthening these defenses is particularly important as computer-using AI systems gain the ability to interact directly with applications and online environments, where exposure to untrusted inputs becomes unavoidable.

Anthropic notes that safety safeguards remain an ongoing area of development as AI systems move from controlled testing environments into real operational workflows.

Q&A: What Claude Sonnet 4.6 Means for Developers, Enterprises, and AI Builders

Q: What is Claude Sonnet 4.6?

A: Claude Sonnet 4.6 is Anthropic’s latest Sonnet-tier AI model, designed as a full upgrade across coding, reasoning, computer use, agent planning, and knowledge work. It introduces improved reliability across multi-step workflows while maintaining the lower cost structure of the Sonnet model family.

Q: How is Sonnet 4.6 different from previous Sonnet models?

A: Anthropic focused on improving consistency rather than introducing a single new feature. Developers reported stronger instruction following, fewer hallucinations, and more dependable execution across long sessions. Internal testing showed users preferred Sonnet 4.6 over Sonnet 4.5 roughly 70% of the time and even favored it over Opus 4.5 in 59% of comparisons.

Q: What new capabilities stand out most in Sonnet 4.6?

A: Key improvements include enhanced coding performance, stronger long-context reasoning supported by a 1-million-token context window, improved computer-use abilities that allow the model to operate software interfaces, and expanded developer tools such as adaptive thinking modes and automated retrieval filtering through the API.

Q: How does Sonnet 4.6 compare to Opus models?

A: Benchmark results and enterprise testing suggest Sonnet 4.6 approaches Opus-level performance for many practical workloads while remaining significantly less expensive to run. Anthropic notes that Opus models still perform best on tasks requiring the deepest reasoning, such as large-scale codebase refactoring or highly complex coordination problems.

Q: Who should consider using Claude Sonnet 4.6?

A: The model is aimed at developers, enterprise teams, and AI product builders who need reliable reasoning and automation capabilities that can run continuously at sustainable cost levels. It is particularly suited for coding workflows, document analysis, office automation, and agent-based applications operating across existing software tools.

Q: How can developers and organizations access Sonnet 4.6?

A: Claude Sonnet 4.6 is available across all Claude plans, Claude.ai, Claude Cowork, Claude Code, the Claude API, and major cloud platforms. Developers can integrate the model using the claude-sonnet-4-6 API identifier.

What This Means: Practical Intelligence Moves Down the Cost Curve

Claude Sonnet 4.6 illustrates how advances in AI capability are increasingly arriving through models designed for sustained, real-world deployment rather than remaining limited to the most advanced and expensive AI systems.

Who should care: Developers, enterprise AI leaders, and product teams evaluating model strategy should pay attention because capabilities once associated primarily with premium frontier models are becoming economically viable for continuous use.

Why it matters now: AI adoption is moving beyond experimentation toward operational deployment. Organizations increasingly require models that can reason across large systems, operate existing software environments, and sustain long workflows without rapidly escalating costs.

What decision this affects: The decision is no longer simply which model is the most capable, but which delivers the most reliable performance per dollar. By bringing near-Opus-level capability into the Sonnet tier, Anthropic narrows the gap between experimentation and production deployment, making advanced agentic workflows more practical for everyday business operations.

The competitive frontier in AI is no longer defined only by peak intelligence, but by how much usable intelligence organizations can afford to run continuously.

Sources:

Anthropic - Claude Sonnet 4.6

https://www.anthropic.com/news/claude-sonnet-4-6Claude Pricing - Claude API Pricing

https://claude.com/pricing#apiAnthropic - Claude Sonnet 4.6 System Card

https://www-cdn.anthropic.com/78073f739564e986ff3e28522761a7a0b4484f84.pdfAnthropic Developer Platform - Mitigate Jailbreaks and Strengthen Guardrails

https://platform.claude.com/docs/en/test-and-evaluate/strengthen-guardrails/mitigate-jailbreaksArtificial Analysis - GDPval-AA Evaluation Benchmark

https://artificialanalysis.ai/evaluations/gdpval-aaAnthropic - Claude 3.5 Models and Computer Use

https://www.anthropic.com/news/3-5-models-and-computer-useOSWorld - OSWorld Benchmark Documentation

https://os-world.github.io/Anthropic Developer Platform - Adaptive Thinking

https://platform.claude.com/docs/en/build-with-claude/adaptive-thinkingAnthropic Developer Platform - Context Compaction

https://platform.claude.com/docs/en/build-with-claude/compactionAnthropic Developer Platform - Memory Tool

https://platform.claude.com/docs/en/agents-and-tools/tool-use/memory-toolAnthropic Developer Platform - Programmatic Tool Calling

https://platform.claude.com/docs/en/agents-and-tools/tool-use/programmatic-tool-callingAnthropic Developer Platform - Tool Search Tool

https://platform.claude.com/docs/en/agents-and-tools/tool-use/tool-search-toolAnthropic Developer Platform - Providing Tool Use Examples

https://platform.claude.com/docs/en/agents-and-tools/tool-use/implement-tool-use#providing-tool-use-examplesClaude Support - Use Claude in Excel

https://support.claude.com/en/articles/12650343-use-claude-in-excel

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.