A developer supervises multiple AI-driven workflows running in parallel, illustrating how Claude Opus 4.6 supports agent teams and long-horizon tasks. Image Source: ChatGPT-5.2

Claude Opus 4.6: Anthropic Pushes AI Toward Long-Horizon, Agentic Work

Anthropic has released Claude Opus 4.6, its most advanced AI model to date, designed for long-horizon reasoning, agentic workflows, and real-world professional work. The update introduces a 1 million-token context window and improves the model’s ability to plan, reason, and remain reliable across extended tasks.

Anthropic describes Opus 4.6 as an upgrade focused less on faster responses and more on sustained execution. The model is intended for scenarios where AI systems must operate over time—such as large codebases, multi-source research, financial analysis, and document-heavy workflows—without losing context or accuracy.

Alongside model improvements, Anthropic introduced updates to its developer platform, agent tooling, and office integrations, while reporting expanded safety evaluations and new cybersecurity safeguards. These changes clarify how Anthropic expects advanced AI models to be used in production environments rather than isolated experiments.

Key Takeaways: Claude Opus 4.6 and Long-Horizon Agentic AI

Claude Opus 4.6 is designed for sustained, autonomous work, not just short-form responses or single-step prompts.

It introduces a 1 million-token context window, enabling long-context reasoning with reduced performance degradation.

The model leads frontier benchmarks across agentic coding, multidisciplinary reasoning, and agentic search.

Anthropic reports measurable improvements in context retention and error correction during extended workflows.

Safety evaluations show low misalignment and expanded cybersecurity safeguards, even as model capabilities increase.

What Anthropic Changed in Claude Opus 4.6 — and Why It Matters

From “Smart Responses” to Sustained Agentic Work

Anthropic describes Opus 4.6 as a model that plans more deliberately and sustains focus over longer sessions. Rather than optimizing for speed alone, the model is designed to:

Break down complex tasks into stages

Revisit its own reasoning before finalizing outputs

Detect and correct mistakes during execution

Remain productive across extended interactions

This capability is especially relevant for agentic workflows, where models are expected to operate autonomously—calling tools, navigating large codebases, or synthesizing information across many documents—without constant human intervention.

In Claude Code, Anthropic’s developer-focused coding environment, this shows up as improved performance when working inside large repositories, conducting code reviews, and debugging complex systems.

Early access partners report that these changes translate into more autonomous work, improved planning, and stronger performance on complex, multi-step tasks.

“Claude Opus 4.6 is the best model we’ve tested yet. Its reasoning and planning capabilities have been exceptional at powering our AI Teammates. It’s also a fantastic coding model—its ability to navigate a large codebase and identify the right changes to make is state of the art.”

— Amritansh Raghav, Interim CTO, Asana

“Early testing shows Claude Opus 4.6 delivering on complex, multi-step coding work developers face every day—especially agentic workflows that demand planning and tool calling. This starts unlocking long-horizon tasks at the frontier.”

— Mario Rodriguez, Chief Product Officer, GitHub

“Claude Opus 4.6 excels in high-reasoning tasks like multi-source analysis across legal, financial, and technical content. Box’s evaluation showed a 10% lift in performance, reaching 68% versus a 58% baseline, and near-perfect scores in technical domains.”

— Yashodha Bhavnani, Head of AI, Box

Long Context Without Losing the Plot

A central focus of Opus 4.6 is long-context reasoning. Anthropic explicitly calls out “context rot,” the tendency of models to degrade as conversations or documents grow longer.

Opus 4.6 is designed to hold and reason over hundreds of thousands of tokens with less drift. On MRCR v2, a needle-in-a-haystack benchmark that tests whether a model can retrieve specific information hidden deep inside large texts, Opus 4.6 scored 76% on the 1M-token variant. By comparison, Claude Sonnet 4.5 scored 18.5% on the same task.

Anthropic emphasizes that the improvement is not just a larger context window, but the model’s ability to use that context while maintaining reasoning quality.

Benchmark Performance: How Claude Opus 4.6 Compares Across Frontier Evaluations

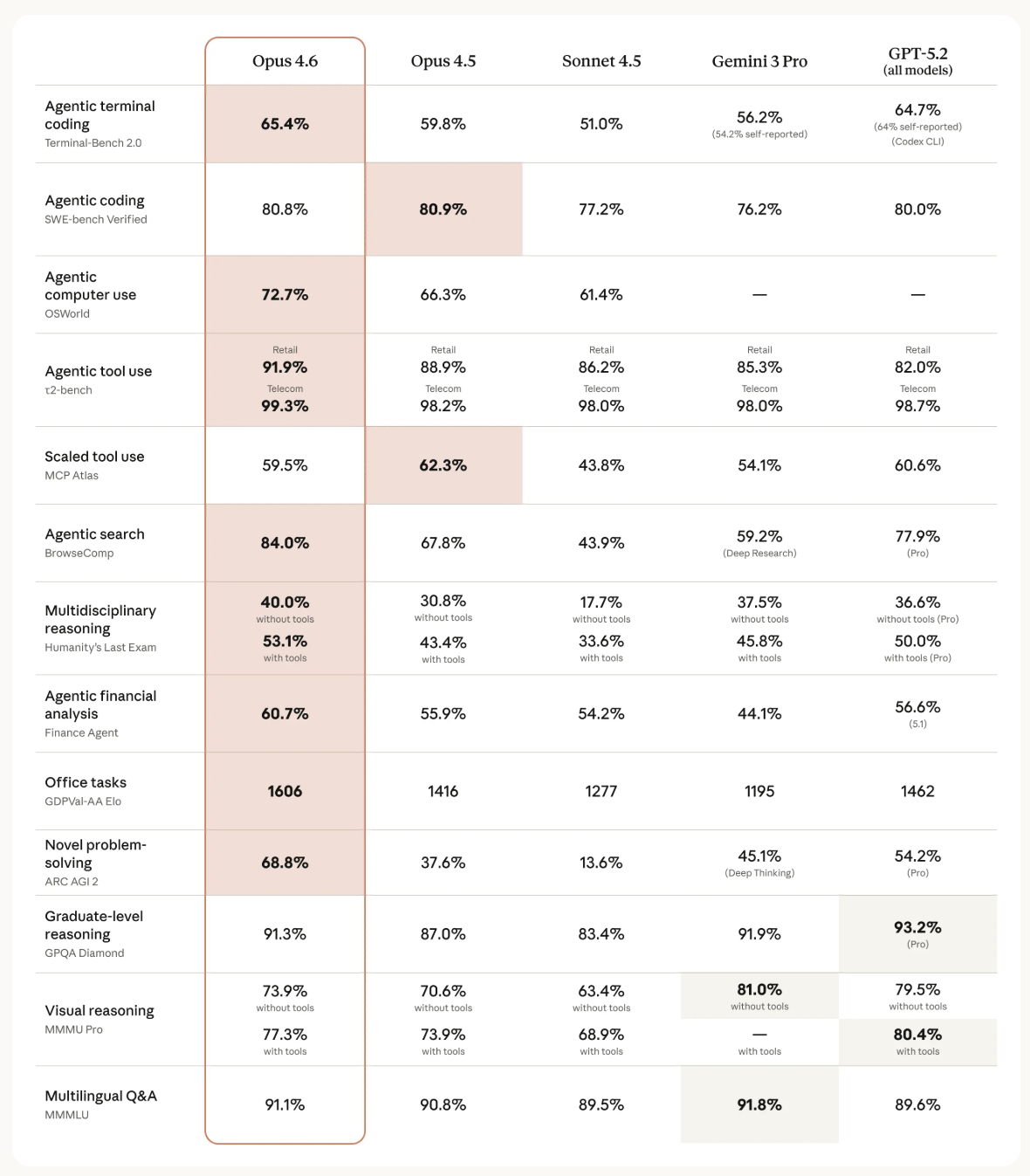

Anthropic reports state-of-the-art results across several evaluations that measure different aspects of real-world work:

Terminal-Bench 2.0 (Agentic Coding): Opus 4.6 achieves the highest reported score among frontier models, reflecting its ability to plan and execute multi-step coding tasks in realistic environments.

Humanity’s Last Exam (Multidisciplinary Reasoning): The model leads across tests that require combining knowledge from multiple domains, both with and without tools.

BrowseComp (Agentic Search): Opus 4.6 outperforms other models at locating hard-to-find information online, a key capability for research and investigative workflows.

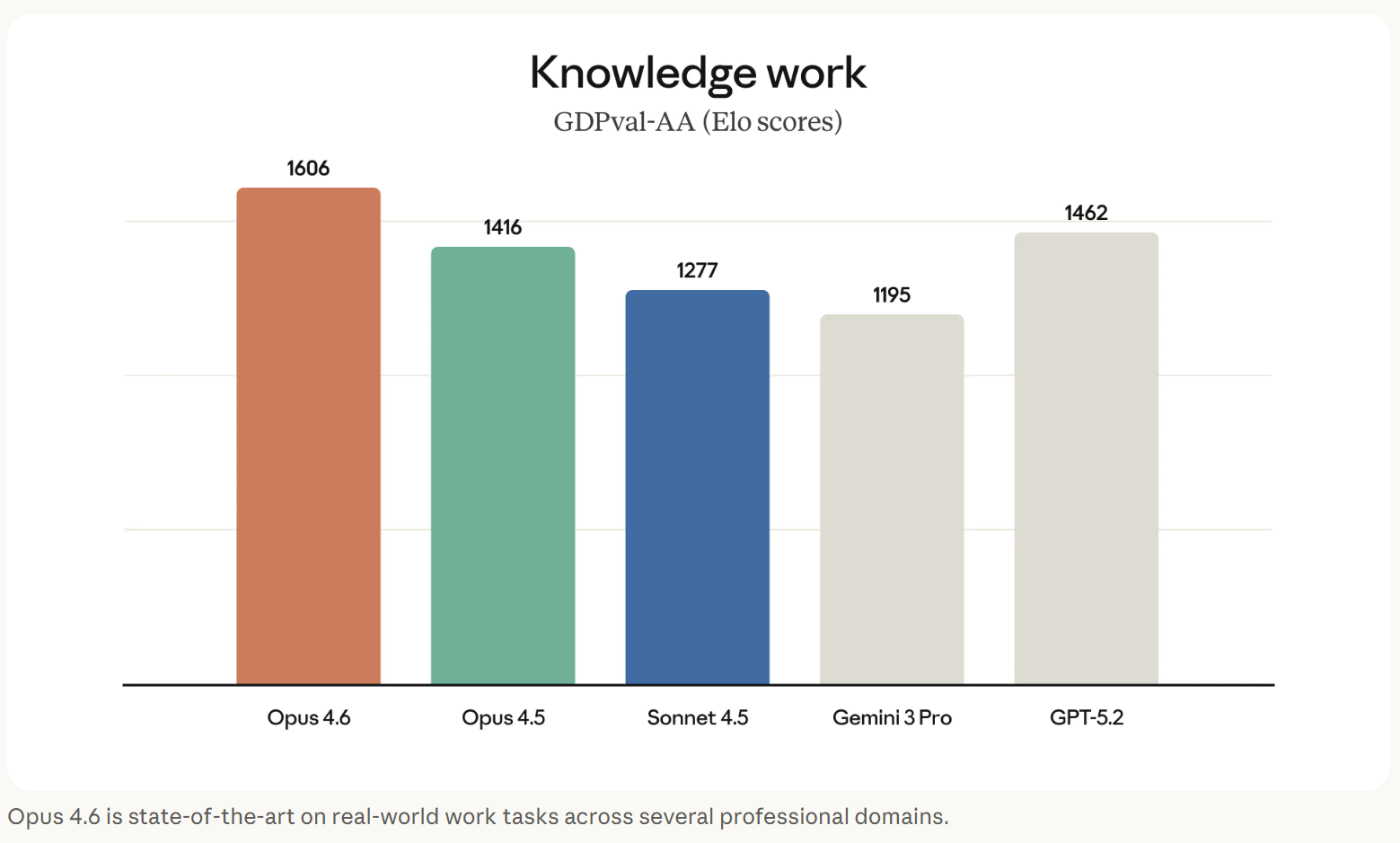

GDPval-AA (Knowledge Work): On tasks representing economically valuable work in domains like finance and law, Opus 4.6 outperforms the next-best model by roughly 144 Elo points, and its own predecessor by 190 points.

Rather than presenting these results as abstract leaderboard wins, Anthropic connects them to practical capabilities such as sustained reasoning, accurate retrieval, and decision-making after absorbing large volumes of information.

Beyond Coding: How Anthropic Positions Claude Opus 4.6 for Knowledge Work

While much of the attention is on software engineering, Anthropic emphasizes that Opus 4.6 is also designed for broader professional use. The model can:

Run financial analyses

Conduct multi-source research

Create and edit documents and spreadsheets

Generate and refine presentations

Within Cowork, Claude’s autonomous multitasking environment, Opus 4.6 can chain these activities together—analyzing data, summarizing findings, and producing structured outputs—without requiring constant user input.

Anthropic describes the model as a general-purpose knowledge worker rather than only a developer tool.

Anthropic Developer Platform and Product Updates for Claude Opus 4.6

API and Agent Controls

On the API, Anthropic introduced several features designed to support longer-running agentic tasks and give developers more control over reasoning depth, performance, and cost:

Adaptive thinking: Instead of forcing developers to toggle extended reasoning on or off, the model now decides when deeper reasoning is needed based on context, while still allowing developers to adjust the effort level to make that reasoning more or less selective.

Effort controls: Four levels—low, medium, high (default), and max—allow developers to trade off intelligence, latency, and cost.

Context compaction (beta): When conversations approach the context window limit, Claude can automatically summarize and replace older context, allowing longer-running agentic tasks to continue without interruption.

Expanded outputs: Opus 4.6 supports outputs of up to 128k tokens, allowing larger-output tasks to complete without breaking them into multiple requests.

US-only inference: Available for workloads that need to run within the United States, with token pricing at 1.1× the standard rate.

1M token context (beta): Opus 4.6 is Anthropic’s first Opus-class model to support a 1 million-token context window. Premium pricing applies for prompts exceeding 200k tokens, at $10 per million input tokens and $37.50 per million output tokens.

Anthropic also introduced agent teams in Claude Code as a research preview, allowing multiple Claude agents to operate in parallel on independent parts of a task, such as read-heavy codebase reviews. According to the company, these agents can coordinate autonomously, and developers can take direct control of any subagent using Shift+Up/Down or terminal tools such as tmux. This supports longer, more complex workflows without requiring constant human coordination.

Office and Productivity Tools

Anthropic also expanded Claude’s integration with office tools to help workers with their everyday tasks:

Claude in Excel now handles longer-running and more complex tasks with improved performance, can plan before acting, ingest unstructured data and infer the appropriate structure without guidance, and execute multi-step changes in a single pass.

Claude in PowerPoint, released in research preview for Max, Team, and Enterprise plans, can generate slides that respect layouts, fonts, and slide masters—allowing users to move from data analysis to presentation without leaving Claude.

Safety and Alignment in Claude Opus 4.6: Evaluations and Safeguards

Anthropic reports that Opus 4.6 maintains an overall safety profile equal to or better than other frontier models. In automated behavioral audits, the model showed low rates of:

Deception

Sycophancy

Encouragement of user delusions

Cooperation with misuse

The model was also less likely to block harmless requests that it could safely answer.

For Claude Opus 4.6, Anthropic reports running its most comprehensive set of safety evaluations to date. According to the company, this included introducing new tests for the first time, upgrading existing evaluations, and expanding assessments focused on user wellbeing. Anthropic also conducted more complex testing of the model’s ability to refuse potentially dangerous requests, as well as updated evaluations designed to detect whether the model could perform harmful actions without explicit user prompting.

Anthropic says it also applied new interpretability techniques—methods used to study how AI models arrive at their outputs—to better understand why the model behaves in certain ways and to identify potential issues that standard testing might miss. A detailed description of these capability and safety evaluations is provided in the Claude Opus 4.6 system card.

Because Opus 4.6 demonstrates stronger cybersecurity capabilities, Anthropic introduced six new cybersecurity probes to help detect and monitor potential misuse. The company also describes using the model for defensive cybersecurity applications, including identifying and patching vulnerabilities in open-source software. Anthropic notes that safeguards will continue to be updated as threats evolve, and that additional measures, including real-time interventions to block abuse, may be introduced in the future.

Claude Opus 4.6 Availability and Pricing

Claude Opus 4.6 is available today via claude.ai, the Claude API, and major cloud platforms. Pricing remains unchanged at $5 per million input tokens and $25 per million output tokens, with premium pricing for extended context usage beyond 200k tokens.

Q&A: Claude Opus 4.6 Explained

Q: What is Claude Opus 4.6?

A: Claude Opus 4.6 is Anthropic’s most capable AI model, designed for long-running, agentic tasks such as software development, research, financial analysis, and document-heavy workflows.

Q: What makes Opus 4.6 different from previous Claude models?

A: Opus 4.6 improves planning, long-context reasoning, and error correction, and introduces a 1 million-token context window for the first time in the Opus family.

Q: Why does the 1M token context window matter?

A: It allows the model to reason over very large documents and extended conversations while retaining accuracy, reducing a common failure mode known as “context rot.”

Q: How does Opus 4.6 perform compared to other frontier models?

A: Anthropic reports that Opus 4.6 leads several benchmarks, including agentic coding, multidisciplinary reasoning, agentic search, and economically valuable knowledge work tasks.

Q: Is Claude Opus 4.6 focused only on developers?

A: No. While it performs strongly in coding tasks, Anthropic describes Opus 4.6 as a general-purpose knowledge worker for research, finance, documents, spreadsheets, and presentations.

Q: What safety measures are included?

A: Anthropic reports low rates of misaligned behavior, expanded cybersecurity evaluations, and new safeguards designed to monitor and prevent misuse as capabilities increase.

What This Means: From Long-Context Models to Long-Horizon Work

As AI models become more capable, the challenge is no longer whether they can generate answers—it’s whether they can sustain reasoning, planning, and execution across time. Claude Opus 4.6 reflects Anthropic’s move toward AI systems that operate reliably inside extended workflows rather than isolated prompts.

Who should care:

Enterprise leaders, engineering teams, and developers responsible for deploying AI systems in production—particularly those managing long-running workflows, large codebases, regulated data, or multi-step decision processes where reliability and oversight are critical.

Why it matters now:

As organizations move AI into operational roles, weaknesses in memory, context retention, and sustained reasoning surface quickly. Models that lose coherence over time or require constant human correction introduce compounding risk, increased costs, and workflow instability.

What decision this affects:

Whether AI is deployed as a collection of isolated task helpers or trusted to carry out extended, multi-step work across tools, documents, and environments with predictable behavior and human supervision.

How organizations answer that question will shape how confidently AI can be integrated into core business processes—or becomes embedded in everyday professional operations.

Sources:

Anthropic. Claude Opus 4.6 Announcement

https://www.anthropic.com/news/claude-opus-4-6Anthropic. Cowork Research Preview

https://claude.com/blog/cowork-research-previewTerminal-Bench. Terminal-Bench 2.0 Announcement

https://www.tbench.ai/news/announcement-2-0Center for AI Safety. Humanity’s Last Exam

https://agi.safe.ai/Artificial Analysis. GDPval-AA Evaluation

https://artificialanalysis.ai/evaluations/gdpval-aaOpenAI. BrowseComp Benchmark

https://openai.com/index/browsecomp/Hugging Face. MRCR Dataset (Needle-in-a-Haystack Benchmark)

https://huggingface.co/datasets/openai/mrcrAnthropic. Claude Opus 4.6 System Card

https://www.anthropic.com/claude-opus-4-6-system-cardAnthropic. Claude Code: Agent Teams Documentation

https://code.claude.com/docs/en/agent-teamsAnthropic. Context Compaction Documentation

https://platform.claude.com/docs/en/build-with-claude/compactionAnthropic. Adaptive Thinking Documentation

https://platform.claude.com/docs/en/build-with-claude/adaptive-thinkingAnthropic. Effort Controls Documentation

https://platform.claude.com/docs/en/build-with-claude/effortAnthropic. Claude in Excel

https://claude.com/claude-in-excelAnthropic. Claude in PowerPoint

https://claude.com/claude-in-powerpointAnthropic. Claude Models Overview

https://platform.claude.com/docs/en/about-claude/models/overviewAnthropic. Claude API Pricing

https://claude.com/pricing#apiAnthropic Engineering. Effective Context Engineering for AI Agents

https://www.anthropic.com/engineering/effective-context-engineering-for-ai-agentsAnthropic Research. Next-Generation Constitutional Classifiers

https://www.anthropic.com/research/next-generation-constitutional-classifiersAnthropic RED Team. Zero-Days and Cybersecurity Research

https://red.anthropic.com/2026/zero-days/Anthropic. Data Residency and US-Only Inference

https://platform.claude.com/docs/en/build-with-claude/data-residency

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.