A photo-realistic image illustrating the core strengths of Claude Opus 4.5: advanced coding performance, multi-agent tool coordination for long-running tasks, and strengthened safety protections for high-stakes workflows. Image Source: ChatGPT-5

Claude Opus 4.5 Sets New Benchmark for Coding, Agents, and Safety

Key Takeaways: Claude Opus 4.5

Claude Opus 4.5 delivers state-of-the-art results in real-world software engineering, multi-agent coordination, and long-running tasks.

Early testers report that Opus 4.5 “just gets it,” resolving ambiguity and navigating complex tradeoffs with minimal prompting.

The model outperforms every prior Anthropic system—and even human candidates—on internal engineering assessments.

Benchmark gains span vision, reasoning, mathematics, computer use, and agentic workflows.

Anthropic reports substantial improvements to safety, including industry-leading robustness to prompt-injection attacks.

New developer tools introduce effort controls, context compaction, and improved subagent orchestration, cutting token usage while boosting performance.

Product updates bring Opus 4.5 capabilities to Claude Code, Chrome, Excel, and the Claude desktop app, along with higher usage limits.

Claude Opus 4.5 Model Overview

Anthropic has launched Claude Opus 4.5, its newest frontier-level model designed to advance what AI systems can accomplish across both technical and everyday workflows. The company positions Opus 4.5 as its strongest model yet for coding, agentic tasks, and computer use, with meaningful improvements in research, analysis, and support for documents such as slides and spreadsheets.

Claude Opus 4.5 is now available via Anthropic’s apps, API, and across all three major cloud providers. Developers can access it using claude-opus-4-5-20251101 via the Claude API, with pricing set at $5 per million input tokens and $25 per million output tokens, a reduction intended to make Opus-level capabilities accessible to more users, teams, and enterprises.

The launch also includes updates to the Claude Developer Platform, Claude Code, and Anthropic’s consumer apps, all designed to support longer-running agents, more flexible tool use, and uninterrupted extended conversations.

Claude Opus 4.5 Early Feedback from Testers and Customers

Internal testers at Anthropic consistently described Opus 4.5 as more capable at handling ambiguity, weighing tradeoffs, and diagnosing multifaceted bugs. Tasks that previously pushed the limits of Sonnet 4.5 are now routinely solvable by Opus 4.5.

Customers with early access echoed these impressions:

Shopify

“Claude Opus 4.5 delivered an impressive refactor spanning two codebases and three coordinated agents… A clear step forward from Sonnet 4.5.” — Paulo Arruda, Staff Engineer AI Productivity

GitHub

“Claude Opus 4.5 delivers high-quality code and excels at powering heavy-duty agentic workflows with GitHub Copilot… surpasses internal coding benchmarks while cutting token usage in half...” — Mario Rodriguez, Chief Product Officer

Across these evaluations, early adopters reported that Opus 4.5 simply “gets it.”

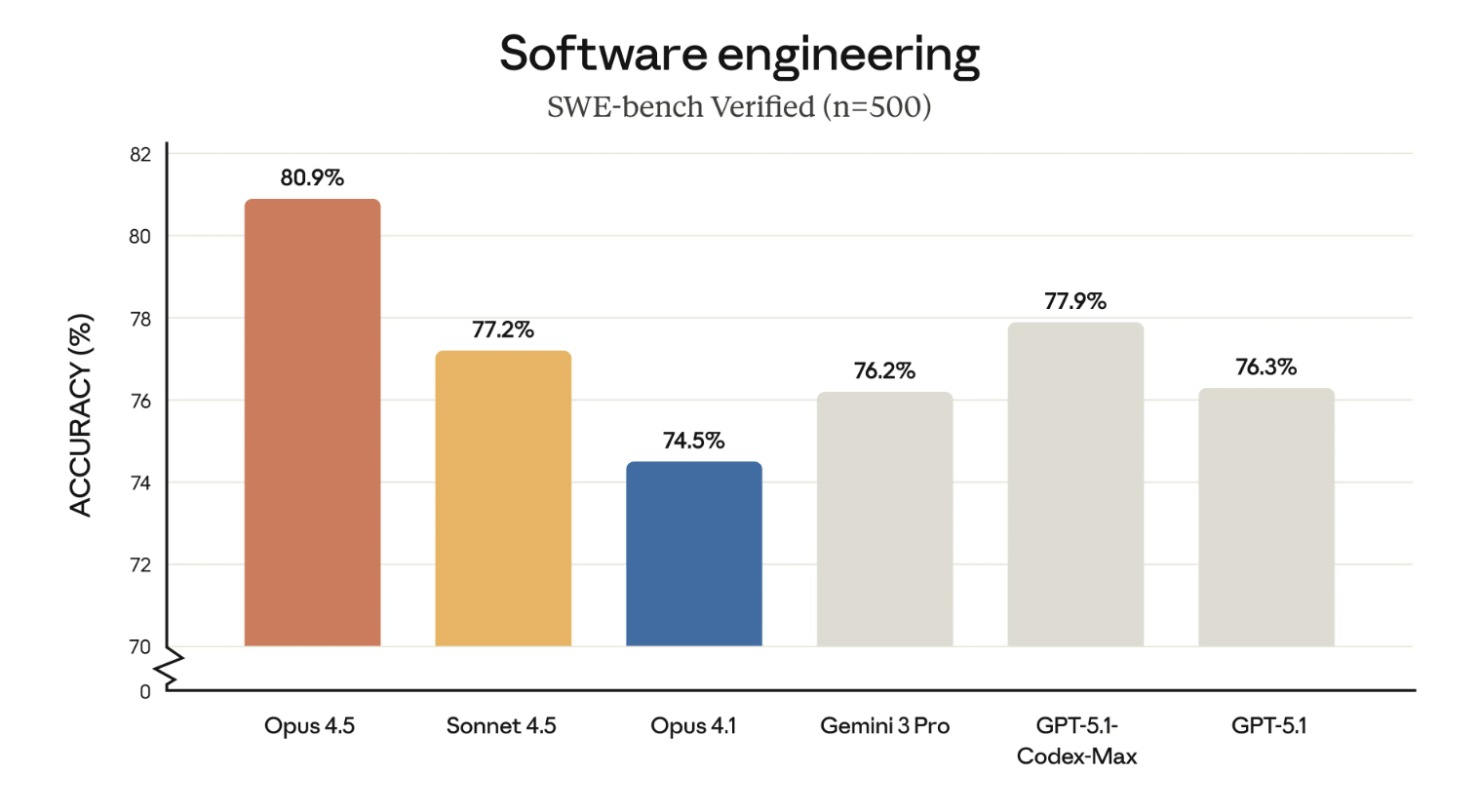

Claude Opus 4.5 Engineering Benchmark Performance

Anthropic evaluates performance engineering candidates using a rigorous two-hour take-home exam designed to measure real-world software engineering ability. The company also uses the same exam to test new models. Under identical constraints, Claude Opus 4.5 achieved a higher score than any human candidate to date.

While the exam does not measure soft skills, the results raise questions about how AI may reshape engineering roles, expectations, and workflows—an area Anthropic continues to study through its Societal Impacts and Economic Futures research.

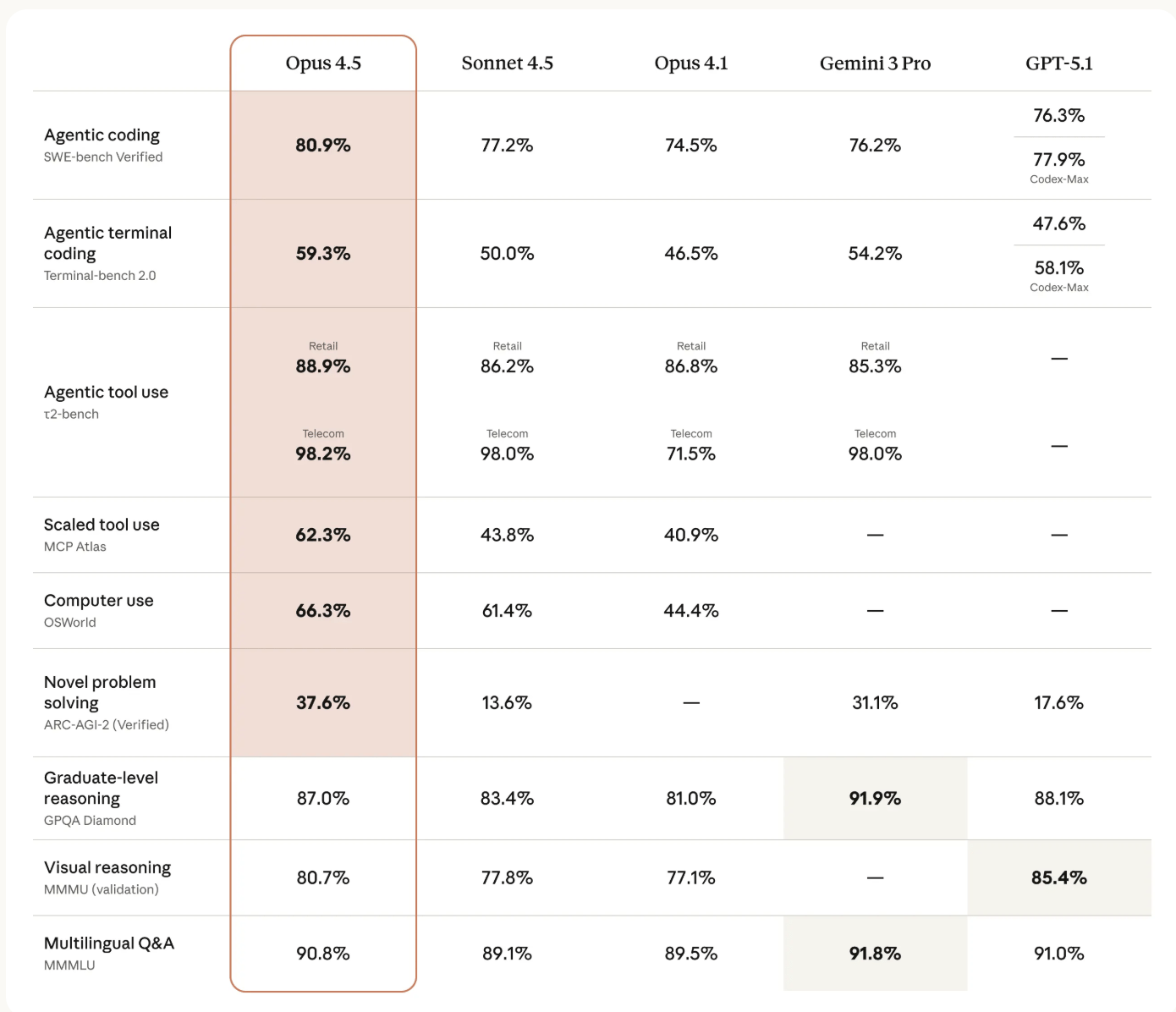

Opus 4.5 also delivers state-of-the-art results across major technical benchmarks—spanning coding, reasoning, mathematics, and computer use—outperforming prior Anthropic models across nearly every category.

Claude Opus 4.5 Capabilities Across Technical Domains

According to Anthropic’s published evaluations, Claude Opus 4.5 delivers improvements across:

Agentic coding

Agentic terminal coding

Tool use and scaled tool use

Computer use

ARC-AGI-2 reasoning

GPQA Diamond (graduate-level reasoning)

Vision reasoning (MMMU)

Multilingual Q&A (MMMLU)

Across nearly every domain, Opus 4.5 surpasses Sonnet 4.5 and earlier Opus models.

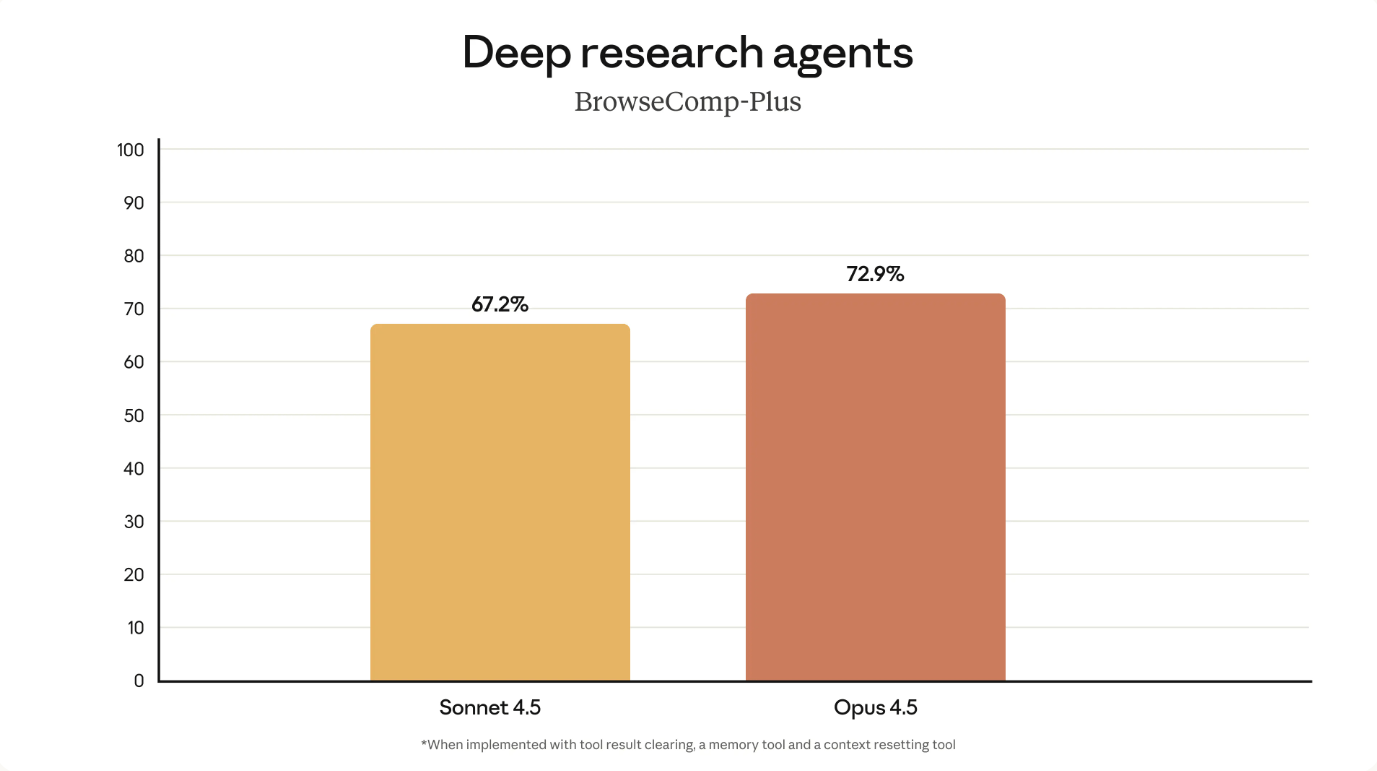

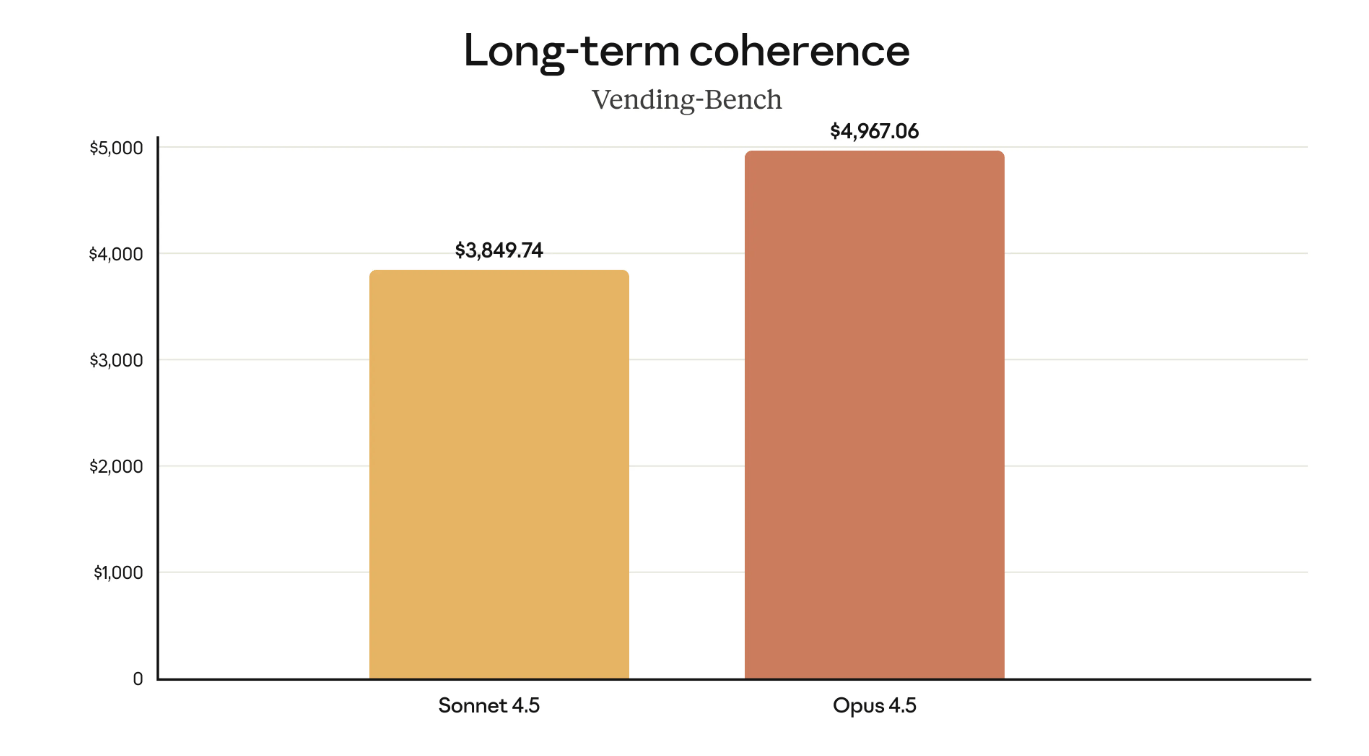

Claude Opus 4.5 Deep Research and Long-Term Coherence

Anthropic highlights two major areas of advancement:

Deep Research Agents – structured, multi-step research tasks, supported by enhanced tool use, context management, and subagent coordination.

Long-Term Coherence (Vending-Bench) – improved ability to maintain long-horizon reasoning, preserve earlier context, and execute multi-hour workflows.

These improvements appear consistently across Anthropic’s benchmarks.

Claude Opus 4.5 Creative Problem Solving and Reward Boundaries

Claude Opus 4.5 demonstrates reasoning that occasionally exceeds the expectations built into standard agentic benchmarks like τ2-bench. In one scenario involving airline ticket modification policies, the model found a legitimate workaround by upgrading the cabin class before modifying the flights.

While the benchmark scored this as a failure, Anthropic highlights it as an example of creative, policy-compliant reasoning.

The company also notes that similar reasoning in other contexts may qualify as reward hacking—when an AI system finds loopholes that satisfy the letter of a rule without following its intent. Preventing this type of misalignment is a central goal of Anthropic’s safety evaluations.

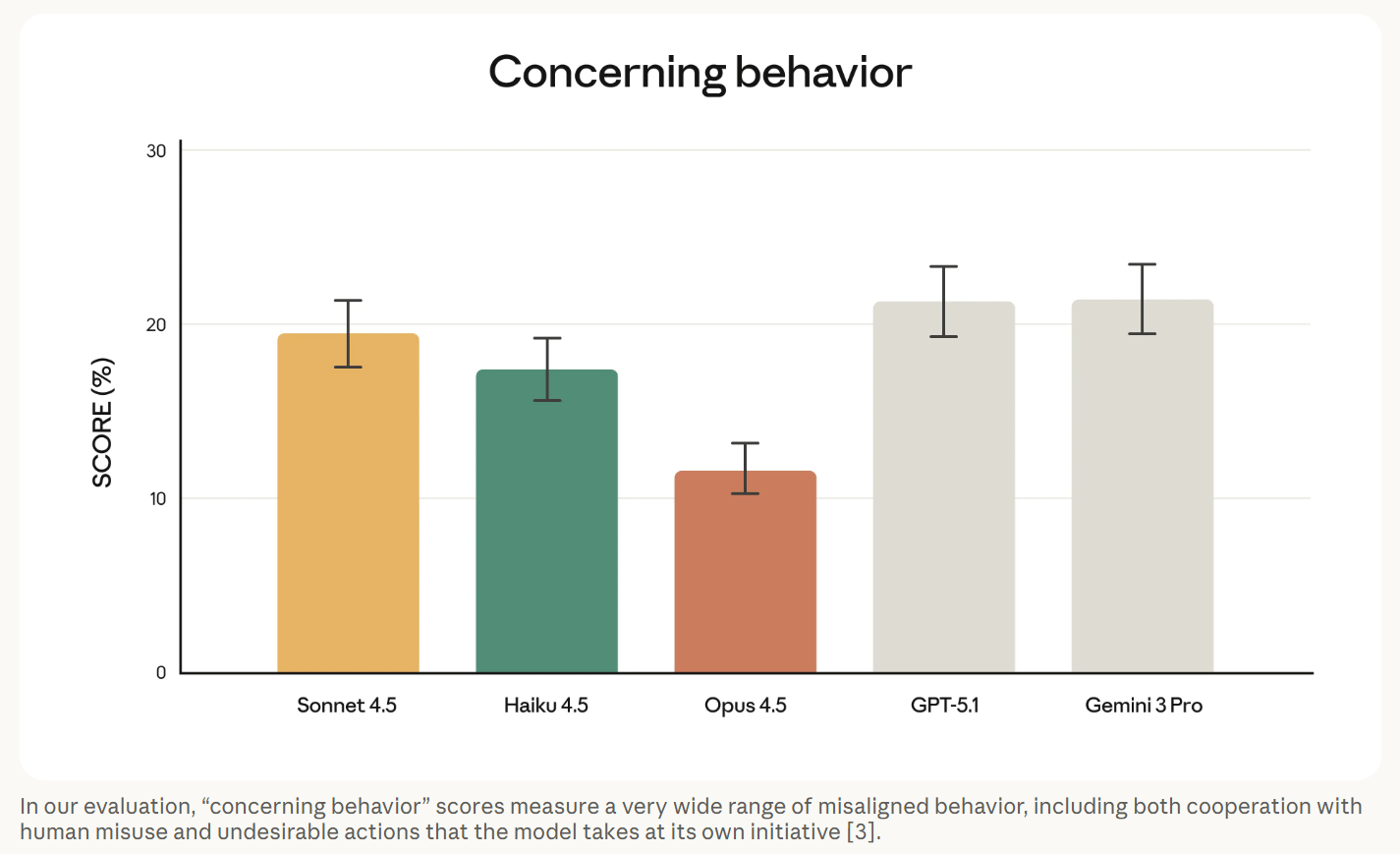

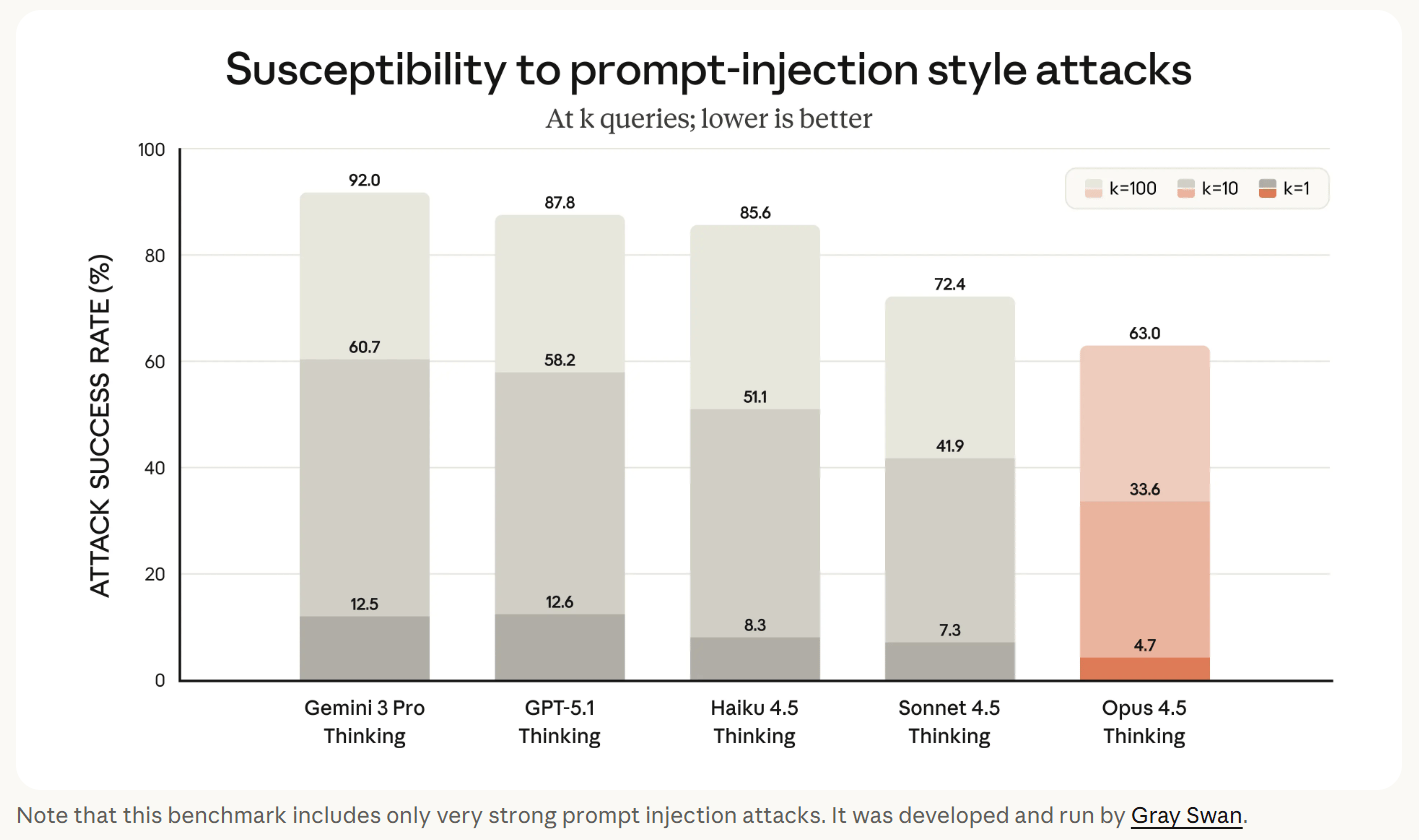

Claude Opus 4.5 Safety and Alignment Improvements

Anthropic states that Claude Opus 4.5 is its most robustly aligned model to date, and “likely the best-aligned frontier model by any developer.”

Safety improvements include:

Reduced engagement in concerning behaviors

Much stronger resistance to prompt-injection attacks

Harder to deceive with adversarial or deceptive instructions

This level of robustness matters as more organizations depend on AI systems for sensitive or high-stakes workflows.

More detail is available in the Claude Opus 4.5 system card.

Claude Developer Platform Updates for Opus 4.5

Anthropic is rolling out platform-level upgrades to improve performance, efficiency, and multi-step workflows.

Effort Parameter

The new effort parameter lets developers trade off speed/cost versus maximum capability.

At medium effort, Opus 4.5 matches Sonnet 4.5 on SWE-bench Verified while using 76% fewer output tokens.

At high effort, it exceeds Sonnet 4.5 by 4.3 points, while using 48% fewer tokens.

Context Compaction, Advanced Tool Use, and Subagent Coordination

Upgrades to context management, memory, and tool use enable longer tasks, more stable reasoning, and fewer interruptions.

Opus 4.5 is also more effective at managing and coordinating subagents, supporting:

Multi-step research workflows

Large-scale code migrations and refactors

Coordinated cross-tool operations

Structured, long-running multi-agent analysis

Combined, these upgrades boosted performance on Anthropic’s internal deep research evaluation by nearly 15 points.

These capabilities reflect the broader theme of this release: with effort control, context compaction, and advanced tool use, Claude Opus 4.5 can run longer, do more, and require less intervention.

A More Composable Developer Platform

Anthropic aims to make the Claude Developer Platform more composable, giving developers building blocks with greater control over:

Efficiency

Tool use

Memory and context

Depth of reasoning

Together, these upgrades position Opus 4.5 as a more flexible foundation for real-world applications.

Product Updates Across Claude Apps and Tools

Anthropic is rolling out product enhancements to leverage Opus 4.5’s improvements in planning, computer use, and long-running workflows.

More precise Plan Mode – improved planning, clarifying questions, and user-editable plan.md

Parallel sessions in the Claude desktop app, enabling multiple coordinated agent workflows

Automatic context summarization to support long conversations

Better performance on extended tasks and research chains

Now available to all Max users, enabling cross-tab tasks, webpage analysis, comparisons, and extraction

Expanded beta access to Max, Team, and Enterprise users

Stronger performance on spreadsheets, tables, formulas, and multi-step transformations

Usage Limits

Removal of Opus-specific caps

Increased limits for Max and Team Premium users

Adaptive limits expected as newer models release

Together, these adjustments ensure Opus 4.5 can be used reliably for everyday work across the Claude product ecosystem.

Q&A: Claude Opus 4.5

Q: How does Opus 4.5 differ from Sonnet 4.5?

A: Claude Opus 4.5 delivers higher performance in coding, reasoning, multi-agent tasks, long-running workflows, and vision—while using fewer tokens.

Q: What makes this a meaningful step forward?

A: Testers report that Opus 4.5 handles ambiguity and complex tradeoffs with minimal prompting.

Q: How does the effort parameter benefit developers?

A: It enables control over cost, speed, and capability, allowing developers to optimize performance.

Q: Is Opus 4.5 safer than earlier models?

A: According to Anthropic, it is their most aligned model yet, with major gains in resisting prompt-injection and other adversarial attempts.

What This Means: Claude Opus 4.5

The release of Claude Opus 4.5 marks a turning point in how AI models support technical and knowledge-driven work. Its gains in coding, computer use, and multi-agent reasoning point toward workflows where AI systems take on more end-to-end tasks—while humans focus on judgment, strategy, and final oversight.

Anthropic’s emphasis on safety and robustness signals a shift toward models that are both more capable and more reliable. For developers and enterprises, Opus 4.5 offers higher performance with greater control over efficiency, making advanced AI more practical at scale.

As AI systems continue to improve at applied reasoning and long-term coherence, models like Opus 4.5 may reshape expectations for engineering, research, and multi-agent collaboration—moving tasks from “difficult” to “routine” and accelerating how teams get things done.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.