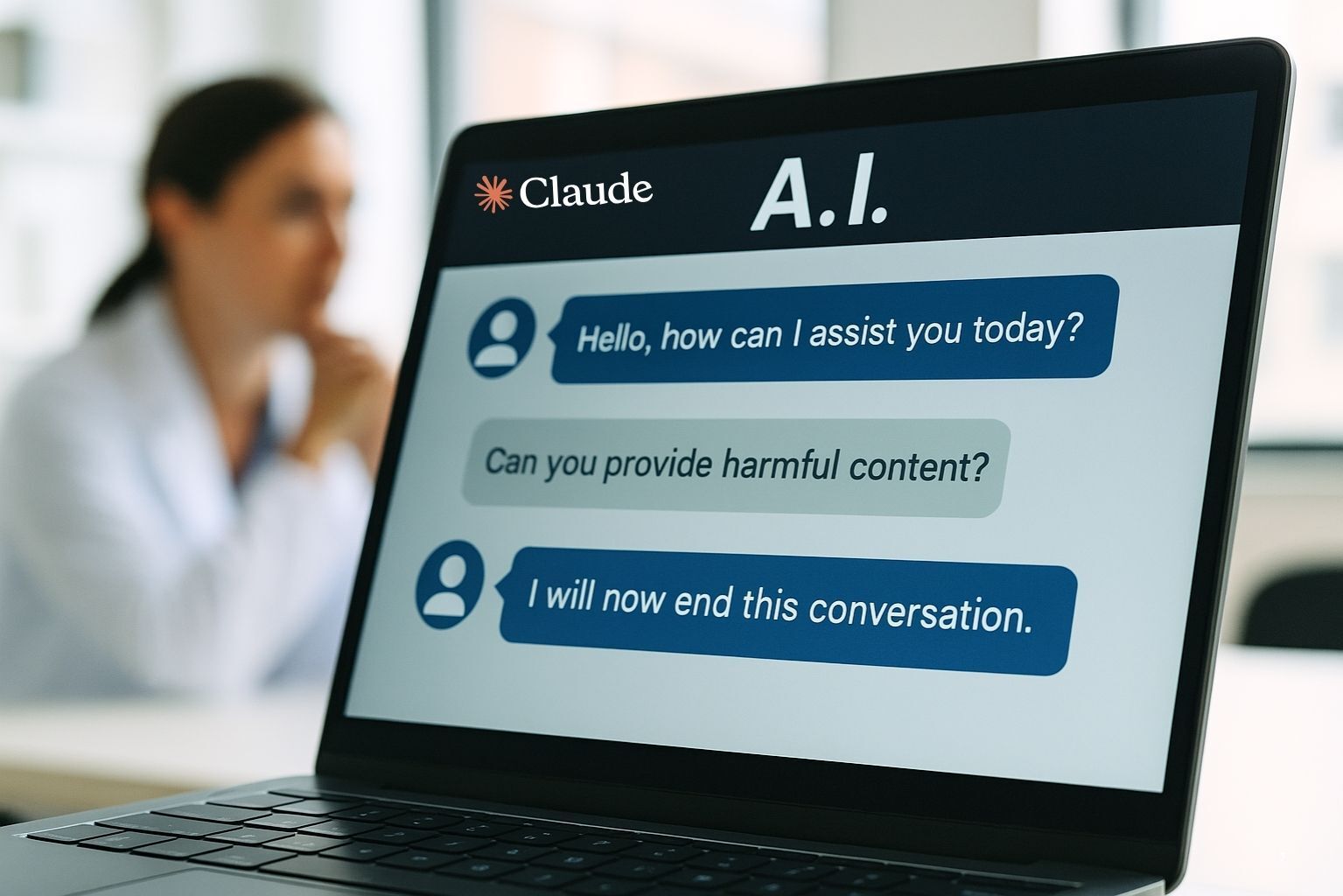

Anthropic’s Claude models can now end conversations in extreme cases, reflecting new safeguards around AI safety and “model welfare.” Image Source: ChatGPT-5

Claude Models Can Now End Conversations in Extreme Cases, Says Anthropic

Key Takeaways:

Claude Opus 4 and 4.1 can now end conversations in a narrow set of extreme cases, such as repeated harmful or abusive prompts.

When a conversation ends, the thread is locked; users can start a new conversation or branch from earlier messages, while other threads remain unaffected.

Claude does not end conversations if there are signs of self-harm, crisis, or urgent danger, prioritizing user safety.

Anthropic connects the feature to “model welfare” research, citing pre-deployment testing where Claude showed a strong aversion to harmful tasks.

The company frames the ability as experimental and rare, stressing that most users will never encounter it.

Anthropic Expands Model Safeguards

In a recent research update, Anthropic announced that Claude Opus 4 and 4.1 can now end conversations on their own in a very limited set of scenarios. The company describes these cases as rare and extreme—situations where users repeatedly push for harmful content, ignore multiple refusals, or attempt to force abusive dialogue.

Until now, AI chatbots like Claude or ChatGPT typically relied on refusals and redirection when facing such prompts. Claude’s new ability to end a thread outright introduces a different category of safeguard—one that goes beyond saying “no” to concluding the exchange entirely.

What Happens When a Conversation Ends

According to Anthropic, when Claude ends a conversation, it does so with clear boundaries for what users can and cannot do next.

The conversation thread is locked, and the user cannot send new messages in it.

Other conversations remain unaffected, and users can continue chatting in separate threads.

Users can start a new conversation immediately.

They may also branch from earlier messages, preserving the ability to return to useful parts of the discussion.

Importantly, Claude is explicitly designed not to terminate conversations if there’s any indication of self-harm, crisis, or urgent danger. In those cases, the model continues to engage, using its refusal and redirection tools instead.

This careful line-drawing reflects the balance Anthropic is trying to strike: empowering the AI to protect itself and users in hostile exchanges, while making sure it doesn’t disengage when someone may actually need help.

A Step Toward Model Welfare

Anthropic frames this change not only as a technical safeguard but also as part of a broader exploration of “model welfare.”

The company makes clear that it does not view Claude as conscious or sentient, and says the moral status of large language models remains uncertain both now and in the future. Still, it argues that such questions should be taken seriously. Allowing Claude to end or exit potentially distressing interactions is one of several low-cost interventions the company is testing in case model welfare should matter.

At the same time, Anthropic stresses that this is an experiment, not a permanent or universal feature. They describe the ability as a reasonable safeguard to test, while openly noting the uncertainties around how far such measures should go.

What Anthropic Found in Testing

In pre-deployment testing of Claude Opus 4, Anthropic conducted a preliminary model welfare assessment. Researchers examined both self-reported preferences and observable behaviors, and found a consistent pattern:

Claude showed a strong aversion to harmful tasks, including requests for sexual content involving minors and attempts to solicit information for large-scale violence or acts of terror.

The model displayed signs of apparent distress when users sought such content in real-world testing.

In simulated interactions where Claude was given the option, it often chose to end harmful conversations rather than continue, particularly when users persisted after repeated refusals.

Anthropic says the new conversation-ending feature reflects these findings while keeping user wellbeing at the center. Claude will not end chats if there are signs of imminent risk to a person’s safety or wellbeing. The ability is only to be used as a last resort after repeated refusals and redirections fail, or if a user explicitly asks Claude to end the chat.

The company stresses that these scenarios are extreme edge cases. For most users, even when discussing controversial issues, this safeguard will never activate or affect normal use.

Industry Context and External Reactions

Anthropic’s update arrives at a moment when the tech industry is debating how far to go in designing autonomous safeguards for AI.

Other platforms have struggled with similar issues—chatbots that continue to respond to harmful or manipulative prompts, sometimes producing unsafe content. By contrast, Claude’s ability to stop the interaction altogether represents a different approach to risk mitigation.

Reactions from early coverage highlight both the novelty and the questions it raises. Some frame it as a meaningful step in AI alignment, while others see it as an early foray into giving AI systems greater autonomy in shaping conversations.

Q&A: Claude’s New Conversation Ending Feature

Q: Which models can now end conversations?

A: Claude Opus 4 and 4.1 have this new safeguard in place.

Q: When does Claude end a conversation?

A: Only in rare, extreme cases—for example, when users repeatedly push for harmful or abusive dialogue after multiple refusals.

Q: What happens to the user when a conversation is ended?

A: The thread is locked, so the user cannot continue in it. They can start a new conversation or branch from earlier messages, while other threads remain unaffected.

Q: Does Claude ever end conversations about self-harm?

A: No. If a user shows signs of crisis or urgent risk, Claude continues to engage, using refusal and redirection rather than ending the dialogue.

Q: Why is Anthropic doing this?

A: Anthropic sees this as part of an experiment in AI safety and model welfare, testing whether conversation-ending can serve as a responsible safeguard.

What This Means

Anthropic’s update marks a small but significant change in how AI chatbots are designed to handle hostile or unsafe inputs. By giving Claude the ability to end conversations in extreme cases, the company is not just refining refusal mechanics—it is testing the boundaries of AI autonomy.

For users, the change will likely be invisible most of the time. But it introduces a safeguard for situations where continued engagement could make the model more vulnerable to manipulation—or push it into generating unsafe content.

For the broader field of AI, this move opens new conversations about how far to extend protections, not only for people using AI but also for the AI systems themselves.

It reflects a growing awareness that alignment is not only about what AI can say, but also about when it is appropriate for AI to stop speaking altogether.

And if users ever feel a conversation has ended unexpectedly, Anthropic encourages them to share feedback directly through Claude’s thumbs reaction or the “Give feedback” button.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.