Image Source: ChatGPT-4o

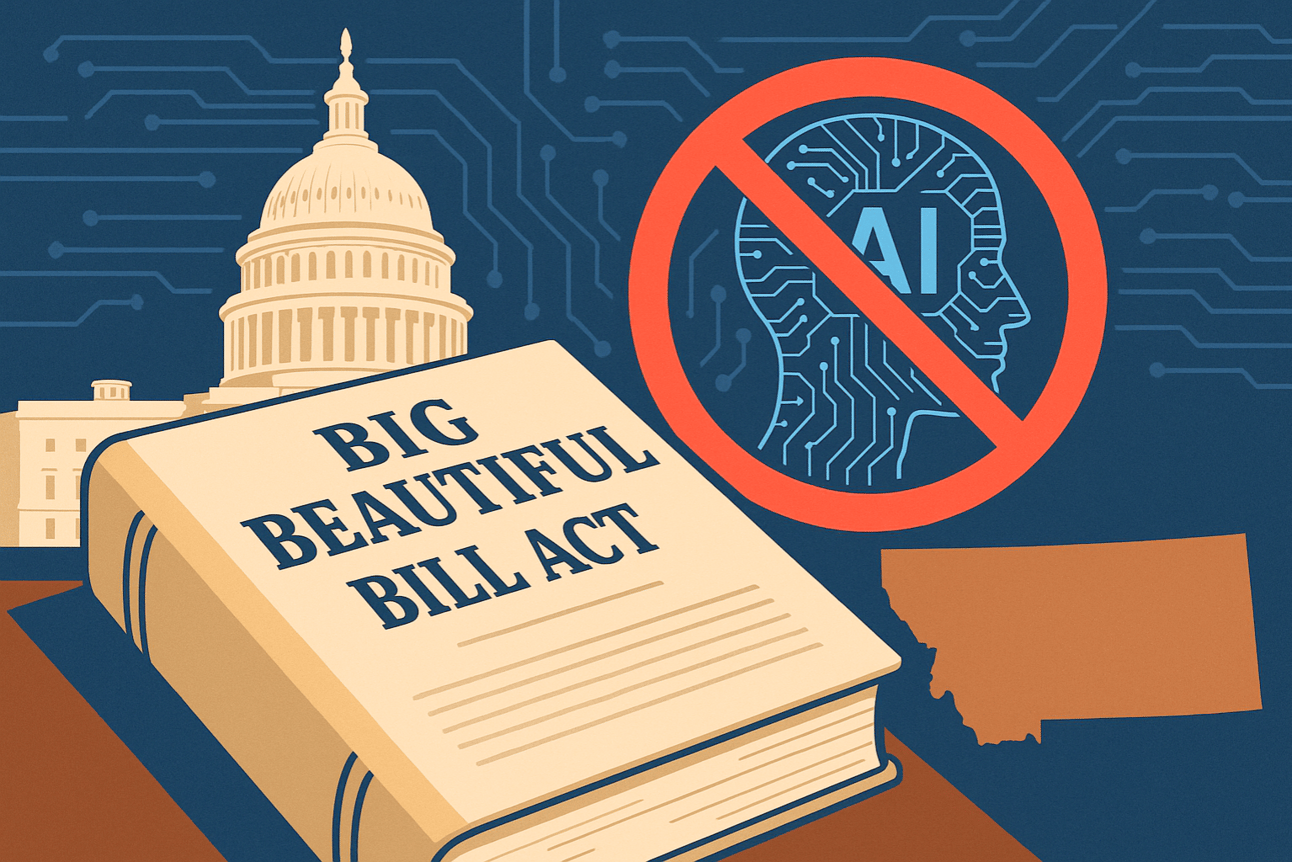

Big Beautiful Bill Would Block State AI Regulation for 10 Years

A provision buried deep within the federal reconciliation bill—formally titled the Big Beautiful Bill Act—could reshape how artificial intelligence is regulated in the U.S. for years to come.

The legislation proposes a 10-year moratorium preventing states from enforcing any law that “limits, restricts, or otherwise regulates” artificial intelligence models or systems involved in interstate commerce. If passed, this would suspend both new and existing state-level AI regulations for a decade.

Montana, which has passed a wave of AI-related laws in recent years, could see its efforts sidelined. The state has earned national attention for advancing bipartisan legislation on issues ranging from privacy protections and government AI usage to deepfake abuse and data center regulation.

Concerns Over Oversight and State Autonomy

The AI moratorium has drawn concern from lawmakers and officials across party lines. At a recent joint press conference, U.S. Senators Maria Cantwell (D-Wash.) and Marsha Blackburn (R-Tenn.) were joined by Attorneys General Nick Brown (Wash.) and Jonathan Skrmetti (Tenn.) to warn about the implications of stripping states of regulatory power.

“This is a technology still in its infancy, and the earlier the law can shape the contours of what products provide, the safer people will be,” Skrmetti said. “If we lose the ability for the states to regulate that... Congress, even moving as fast as it can, will not be able to keep up with all of the risks.”

Montana’s own AI laws were referenced during the event as an example of proactive regulation that could become unenforceable if the moratorium takes effect. None of the state’s federal delegation responded to requests for comment.

A State-Led Approach to AI Regulation

Montana lawmakers have passed a range of AI-related bills in recent sessions, reflecting concerns about privacy, public safety, and government oversight. Among the measures signed into law:

Restrictions on how state government can use AI, including in elections and surveillance

Protections for individuals’ name, image, and likeness in personal data

Criminal penalties for using fabricated explicit images, such as deepfakes targeting minors

The effort has been led by a bipartisan group of legislators including Sen. Daniel Zolnikov (R-Billings), Rep. Jill Cohenour (D-Helena), and Rep. Braxton Mitchell (R-Flathead). All have voiced concerns about the potential for federal legislation to override state authority on AI oversight.

Support for Guardrails, Not Bans

Zolnikov, who has sponsored multiple AI-related bills, has advocated for measured regulation—balancing innovation with safeguards. While he supports open-source development over industry monopolies, he warned against reactionary bans.

“It’s kind of a Catch-22. They don’t want states to do it. They refuse to do it. Now they’re looking at a ban. What do you do?” he said.

Rep. Mitchell, who supports “99%” of the broader Big Beautiful Bill Act, said the AI moratorium directly undermines one of Montana’s key legislative efforts—HB 179, which places limits on how government agencies can use artificial intelligence.

“We shouldn’t be blocking states from protecting citizens against surveillance abuse, censorship, and tech-driven manipulation,” Mitchell wrote in an email on Wednesday. “I’ll keep standing for individual liberty and limited government, with or without a moratorium. Hopefully it’s stripped out in the Senate or when it goes back over to the House.”

Mitchell’s comments reflect a broader concern: that a one-size-fits-all federal approach could override state-level safeguards designed to meet local needs.

Gov. Greg Gianforte, a former tech executive, has also voiced caution about overregulating emerging technologies. “Regulation tends to follow, not lead,” he said at an April press conference, while noting that unforeseen consequences—such as those from social media—highlight the need for careful study.

Why This Matters Now

The proposed moratorium comes at a time when states like Montana are taking the lead in addressing AI’s impact on daily life—often in areas where federal regulation is lagging. From privacy to election integrity to the use of synthetic content in bullying, the pace of technological change has spurred state-level action that could be frozen if the bill passes.

Supporters of regulation argue that states are better positioned to respond to emerging risks, especially while AI is still evolving. As Cohenour put it: “I’m scared. And I think Montanans should be scared about their data and the way they’re affected by AI working in these different systems.”

What This Means

The AI moratorium tucked into the Big Beautiful Bill Act highlights a growing tension between rapid innovation and public accountability. While the federal provision may reflect pressure from large tech companies to avoid a patchwork of state laws, it could also limit the ability of elected officials to protect constituents from real-world harms—especially in areas like surveillance, data misuse, and digital manipulation.

As Rep. Mitchell warned, blocking states from acting now could undermine hard-won protections already passed with bipartisan support. It’s not just a legal or procedural debate—it’s about who gets to decide what “responsible AI” looks like, and whether local governments can respond quickly enough to protect their residents.

If the moratorium passes, it could mark a shift in power away from states and toward centralized control—one that favors commercial scalability over community standards. For states like Montana, which have taken the lead on AI safeguards, that’s not just a political concern. It’s a question of rights, responsibility, and whether innovation will be shaped by public interest or private priorities.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.