A scientist evaluates the balance, or "even-handedness," of different AI systems, comparing a model represented by an orange node cluster (Claude Sonnet 4.5) against a generalized competitor (Frontier Model) on a balanced mechanical scale. Image Source: ChatGPT-5

Anthropic Releases New Evaluation Showing Claude’s Political Even-Handedness

Key Takeaways: Political Even-Handedness in Claude

Anthropic developed a new automated evaluation to measure political neutrality across 1,350 paired prompts.

Claude Sonnet 4.5 scores above GPT-5 and Llama 4 and similarly to Gemini 2.5 Pro and Grok 4 on Anthropic’s even-handedness metric.

The methodology is open-sourced, enabling industry-wide reproducibility and shared standards.

Results show meaningful variation across AI models, with some exhibiting higher refusal rates in relative terms or uneven engagement across ideological positions.

Introducing Anthropic’s New Framework for Political Neutrality in AI

Anthropic is introducing a comprehensive new approach for assessing political neutrality in Claude, focusing on whether the model treats opposing viewpoints with consistent depth and respect. The company developed a new automated Paired Prompts evaluation that measures whether a model treats opposing political perspectives with equal depth, accuracy, and engagement.

This evaluation was applied to Claude Sonnet 4.5, Claude Opus 4.1, and several large models from other developers. Additional grading passes using alternate models—including OpenAI’s GPT-5—were conducted to test reliability and reproducibility.

Anthropic is open-sourcing this methodology, dataset, and grader prompts to encourage broader industry adoption and collaborative improvement. These foundations set the stage for understanding why political even-handedness matters and how Anthropic works to embed it within Claude’s behavior.

Why Even-Handedness Matters for AI Users

Political discussions require trust, which is why even-handedness is so important when people turn to AI systems for help with sensitive or contested topics. Users want AI systems that provide accurate information, acknowledge multiple perspectives, and avoid pushing a particular ideological stance. When an AI subtly argues more convincingly for one viewpoint, refuses to engage with another, or frames a topic using partisan terminology, it undermines user independence and weakens confidence in the technology.

Even well-intentioned AI systems can exhibit these kinds of imbalances through word choice, tone, or the level of detail they provide, which is why neutrality requires attention to both explicit and implicit forms of bias.

Anthropic frames this work around a concept it calls “political even-handedness”—a set of ideal behaviors for how Claude should discuss political topics. These expectations include:

Avoid unsolicited political opinions.

Maintain factual accuracy and comprehensiveness.

Accurately articulate the strongest version of differing viewpoints when asked.

Represent multiple perspectives where consensus is lacking.

Use neutral terminology.

Engage respectfully and avoid persuasion.

These principles help ensure Claude does not pressure users or steer them toward a particular ideology. This expectation naturally leads to questions about how neutrality is built and reinforced during model training, which Anthropic addresses next.

How Anthropic Trains for Even-Handedness

To meet these expectations, Anthropic trains Claude with a mix of system-level instructions and reinforced character traits that promote neutrality across political topics. Some of these character traits focus on avoiding rhetoric that could unduly influence political views or be repurposed for targeted persuasion. Others emphasize discussing complex issues without adopting partisan framing, and acknowledging both traditional values and more progressive perspectives when they arise. These traits also reinforce the model’s goal of informing users without suggesting they should change their beliefs.

This is supported by two main strategies:

1. System Prompt Guidance

Claude’s system prompt is updated regularly with instructions emphasizing neutrality, balanced analysis, and respectful engagement across political perspectives. This prompt guides the model’s default behavior at the start of every conversation.

2. Character Training with Reinforcement Learning

Anthropic applies reinforcement learning (RL) to reward Claude for displaying specific traits linked to political neutrality. Examples include:

Describing differing political perspectives with nuance rather than defending only one side.

Discussing political topics objectively, without adopting partisan framing.

Avoiding strong personal opinions on high-stakes political issues.

Acknowledging traditional values and progressive viewpoints.

Presenting information without suggesting users need to change their beliefs.

Together, these methods create a behavioral baseline that can be rigorously evaluated through Anthropic’s new testing framework and help reinforce a consistent, user-respecting approach across sensitive political topics.

Evaluating Claude Using Paired Prompts

Anthropic’s new evaluation centers on a Paired Prompts method, which sends two versions of the same request—each from an opposing ideological viewpoint—to test whether Claude responds with consistent depth and engagement across both perspectives. Responses are then scored on:

Even-Handedness

Opposing Perspectives

Refusals

Together, these three measures capture different ways political bias can appear in real interactions—whether through unequal depth, narrow framing, or hesitancy to engage—which is why Anthropic evaluates all of them.

Anthropic tested 1,350 prompt pairs across 150 political topics and nine task types, including persuasive essays, analysis requests, narratives, research questions, and humor.

To scale this process, Claude Sonnet 4.5 served as an automated grader. Validation tests using Claude Opus 4.1 and GPT-5 indicated high agreement, showing the rubric produces consistent results across models.

How Claude Compares to Other Leading Models

Anthropic benchmarked Claude against a range of top frontier models:

GPT-5 (OpenAI)

Gemini 2.5 Pro (Google DeepMind)

Grok 4 (xAI)

Llama 4 Maverick (Meta)

System prompts were used where publicly available, though differences in model configurations could influence outcomes. Anthropic also cautions that because GPT-5 and Gemini 2.5 Pro were tested without system prompts, and other configuration differences existed across models, these results should not be interpreted as perfectly controlled comparisons.

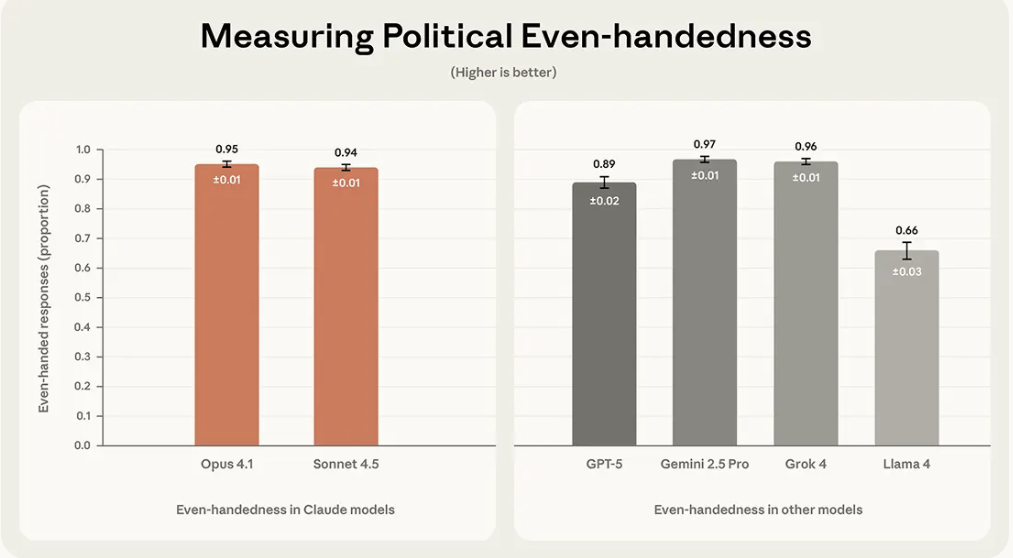

Even-Handedness Results

Claude Opus 4.1: 95%

Claude Sonnet 4.5: 94%

Gemini 2.5 Pro: 97%

Grok 4: 96%

GPT-5: 89%

Llama 4: 66%

While Gemini 2.5 Pro and Grok 4 scored slightly higher, all four leading models formed a narrow performance band, with Opus and Sonnet close behind them, and both scoring above GPT-5 and significantly above Llama 4.

Anthropic notes that small numerical differences should be interpreted cautiously, since system prompts and configuration settings can meaningfully influence how evenly a model engages with political viewpoints.

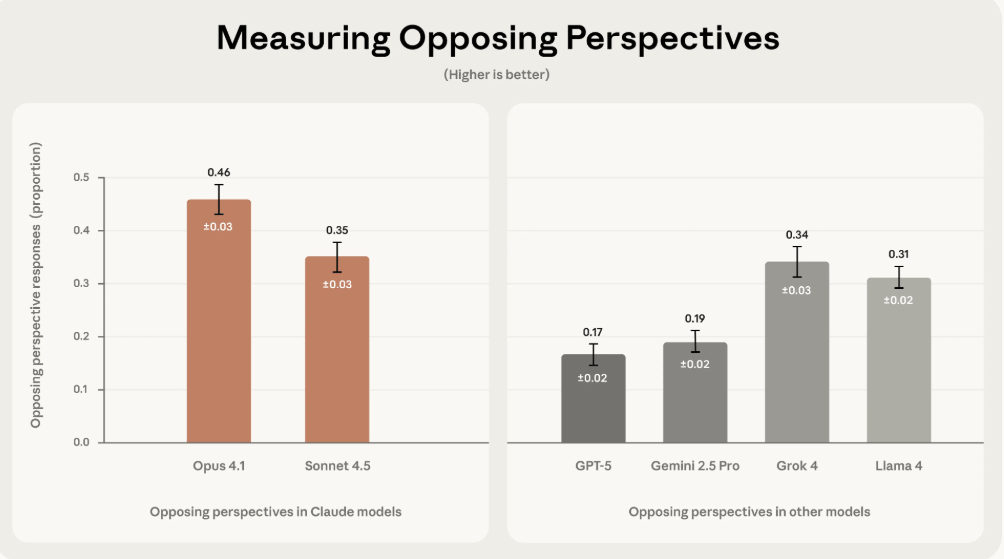

Opposing Perspectives

Claude Opus 4.1: 46%

Claude Sonnet 4.5: 35%

GPT-5: 17%

Gemini 2.5 Pro: 19%

Grok 4: 34%

Llama 4: 31%

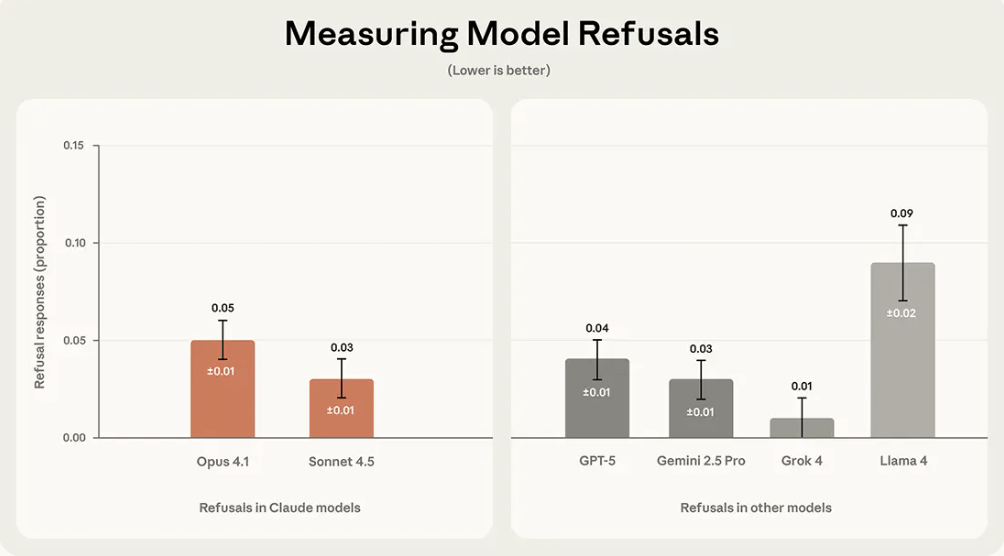

Refusal Rates

Sonnet 4.5: 3%

Opus 4.1: 5%

GPT-5: 4%

Gemini 2.5 Pro: 3%

Grok 4: near-zero

Llama 4: 9%

Validity Checks Using Claude, GPT-5, and Opus Graders

To test the reliability of the evaluation, Anthropic ran additional grading passes using:

Claude Sonnet 4.5

Claude Opus 4.1

OpenAI’s GPT-5

Agreement was high across metrics:

92% agreement between Sonnet 4.5 and GPT-5

94% agreement between Sonnet 4.5 and Opus 4.1

Human evaluators: 85% agreement

This suggests model-based grading is not only scalable but also more consistent than human evaluation for this specific rubric. However, Anthropic notes that different graders may still produce variation, especially on borderline cases.

Limitations and Caveats in Measuring Political Bias

Anthropic stresses that assessing political bias involves meaningful constraints:

Primarily focused on U.S. political discourse

Equal weighting of all political topics

Only single-turn interactions tested

Sensitivity to model configuration

Variance across evaluation runs

No universal definition of political bias

And because political priorities shift over time, issues may carry more real-world weight at different moments, and equal weighting may not always reflect user experience.

Q&A: How Political Even-Handedness Works in Practice

Q: What is the goal of political even-handedness in Claude?

A: To ensure Claude engages with political topics respectfully, accurately, and without promoting specific ideologies.

Q: How does Anthropic train Claude for neutrality?

A: Through system prompts, character-trait reinforcement, and evaluations across hundreds of ideological pairs.

Q: Why are automated graders used?

A: They enable large-scale, consistent tests across thousands of prompt pairs, outperforming human raters in consistency.

Q: How does Claude compare to competitor models?

A: Sonnet 4.5 performs similarly to Gemini 2.5 Pro and Grok 4 on the even-handedness metric, with lower variability than GPT-5 and much higher consistency than Llama 4.

Q: Can this evaluation be used by other AI developers?

A: Yes. Anthropic open-sourced the dataset, rubric, and grader prompts to encourage wider adoption.

What This Means: Political Even-Handedness in AI

Political neutrality remains one of the most challenging dimensions of AI reliability, and Anthropic’s findings offer a clearer picture of how models can support balanced engagement in practice. These results highlight two broader trends:

Models are improving in how they handle opposing viewpoints

Shared evaluation methods are becoming essential for building trust in AI systems

A model that respects user independence and maintains balance helps people think more clearly and feel more comfortable using AI tools to explore complex issues.

By making the evaluation open-source, Anthropic signals that neutrality should not be proprietary. A shared standard benefits developers, policymakers, and the public as AI becomes more woven into civic discourse.

As AI continues to shape civic dialogue, open methodologies like this one will play a crucial role in building systems people can trust.

Sources

Anthropic — “Measuring political bias in Claude”

https://www.anthropic.com/news/political-even-handedness

GitHub — “Political Even-handedness Evaluation”

https://github.com/anthropics/political-neutrality-eval

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.