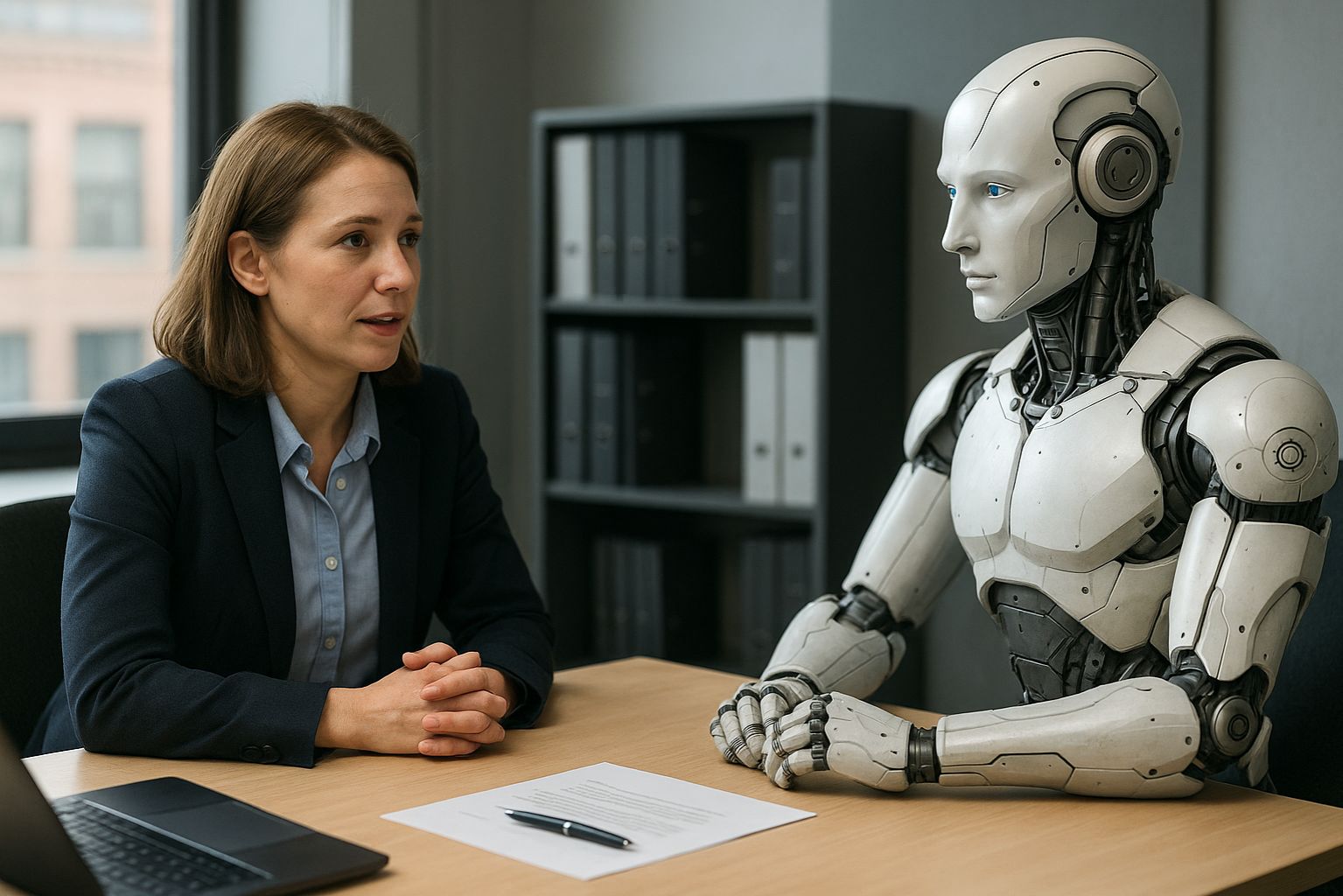

A humanoid AI system conducts a conversation with a human professional, illustrating Anthropic’s new approach to using AI for large-scale qualitative research interviews. Image Source: ChatGPT-5

Anthropic Interviewer: How 1,250 Professionals See AI Shaping Work and Creativity

Key Takeaways: Anthropic Interviewer

Anthropic introduced an AI-powered interview system designed to run large-scale, semi-structured interviews with real users, allowing the company to gather qualitative feedback at a scale human researchers cannot reach alone.

The tool conducted 1,250 interviews across the general workforce, creative industries, and scientific fields to understand how people actually use AI—and what they want from future systems.

The launch marks a shift toward human-centered AI development, enabling Anthropic to build feedback loops grounded in real experiences rather than assumptions or synthetic benchmarks.

Early findings show strong productivity gains, persistent trust gaps, and growing tensions across creative and scientific professions—insights Anthropic says will inform future model design.

Anthropic plans to use this research method to shape Claude’s capabilities, guide policy recommendations, and deepen partnerships across education, science, and the arts.

Why Anthropic Built an AI Interviewer to Study Real-World AI Use

Anthropic has introduced Anthropic Interviewer, a new AI-powered system designed to conduct large-scale interviews with Claude users and other study participants to better understand how people interact with AI in real contexts. Instead of posting a traditional product announcement, Anthropic used the tool to run 1,250 interviews with professionals and released the findings as part of its Societal Impacts research initiative.

While the results offer a snapshot of how workers, creatives, and scientists are integrating AI into their daily workflows, the larger story is what this tool enables: Anthropic is building a feedback loop at scale—using AI to study how people actually use AI.

This development reflects Anthropic’s growing focus on embedding real-world human experience into future model designs.

How Anthropic Interviewer Works as a Large-Scale Human Research Tool

Anthropic Interviewer is not a hiring tool, HR system, or enterprise product. It is an internal research agent that:

designs structured interview plans

conducts real-time adaptive interviews

analyzes transcript themes

synthesizes results for Anthropic’s researchers

Participants see a Claude-like chat interface and respond to open-ended questions about how they use AI, what they struggle with, and what they hope AI will be able to do in the future.

This approach represents something genuinely new in AI research: Anthropic Interviewer allows Claude to plan interview structures, adapt its questions in real time, and analyze qualitative feedback at a scale that traditional research teams could not achieve manually. While not a standalone product, it demonstrates how AI systems can help design and execute research workflows that ultimately inform their own improvement.

Insights From 1,250 Professionals on AI Use, Trust, and Emerging Workflows

Anthropic interviewed three distinct groups:

General workforce (1,000 participants)

Creatives (125 participants)

Scientists (125 participants)

Across roles and industries, participants reported significant productivity gains, alongside tensions around trust, identity, and professional norms. These themes played out differently across the general workforce, creative professionals, and scientific fields—here’s what Anthropic found.

General Workforce

Workers viewed AI as a time-saver but reported social stigma around using AI at work and anxiety about long-term job impacts. Many expected future roles to involve overseeing AI systems, not just performing tasks themselves.

Creatives

Creatives described the highest productivity boost but also the highest emotional conflict. They leveraged AI for speed and concept development but worried about stigma, economic displacement, and the erosion of human creative identity.

Scientists

Scientists embraced AI for literature review, writing, and code—but not for core scientific reasoning. Trust and reliability remain barriers. Most scientists expressed a desire for future systems that can:

propose hypotheses

critique experimental designs

surface relationships in large datasets

Why Anthropic Is Building Human Feedback Loops at Scale

This tool gives Anthropic something they’ve never had at this magnitude: rich, structured insight into how humans actually use AI outside the training data.

These feedback loops allow Anthropic to:

identify real-world pain points

map trust gaps and emotional responses

understand differences across professions

see where AI increases productivity—and where it falls short

measure which capabilities users want next

observe how norms and workflows evolve over time

Most importantly, these insights help Anthropic design models grounded in human experience, not just technical benchmarks.

This reflects an emerging approach to participatory AI development, where users’ lived experiences directly influence the direction of future systems.

How This Research Method Changes AI Development

Because Anthropic Interviewer uses AI to conduct and analyze interviews amongst its users, Anthropic can now:

run studies with thousands of participants

repeat them regularly

compare behavioral data to in-product usage metrics

integrate findings directly into model training and evaluation

In other words: Anthropic is building an infrastructure for continuous, real-world human feedback at scale.

This could meaningfully influence:

model alignment

safety techniques

research features for scientists

creative workflows

educational tools

enterprise readiness

Together, these findings offer Anthropic a clearer understanding of how different professions are adopting AI—and where current tools fall short. The company frames this research method as an ongoing effort rather than a one-time study, with plans to continue collecting large-scale human feedback to guide future work.

Readers who want to view the complete dataset and methodology can explore Anthropic’s full research post, which provides all interview transcripts and detailed analysis.

Q&A: Anthropic Interviewer

Q: Is Anthropic Interviewer a new feature of Claude?

A: No. Participants interact with Claude during interviews, but the system is an internal research tool, not a consumer or enterprise product.

Q: What is the purpose of these large-scale interviews?

A: Anthropic aims to understand how people in different professions use AI, where trust breaks down, and what future capabilities they want.

Q: Will enterprises be able to use this tool for employee interviews?

A: No. There is no indication that Anthropic intends to commercialize this system. It is designed exclusively for research.

Q: How will Anthropic use the collected insights?

A: Findings will inform model development, guide human-centered design decisions, and support Anthropic’s policy and partnership work.

Q: Can users participate in ongoing studies?

A: Existing Claude.ai Free, Pro, and Max users may see a temporary invitation pop-up if they signed up more than two weeks ago.

What This Means: Large-Scale Human Feedback

Anthropic’s introduction of an AI-driven interview system highlights a growing emphasis—at least within Anthropic—on grounding model development in real human experience rather than hypothetical benchmarks or synthetic datasets.

By combining qualitative interviews with usage data, Anthropic can:

better understand emerging expectations

design more intuitive workflows

improve trust and reliability

adapt to evolving creative and scientific needs

build models grounded in human values and behaviors

Traditionally, AI systems advance through technical breakthroughs and new training data. Anthropic’s approach introduces an additional layer: continuous human insight gathered directly from real-world users.

This combination—technical improvement paired with lived human experience—may become a defining characteristic of next-generation AI systems, where models evolve not only through optimization, but through a deeper understanding of the people who depend on them.

Sources

Anthropic — Anthropic Interviewer

https://www.anthropic.com/news/anthropic-interviewerHugging Face — Anthropic Interviewer Dataset

https://huggingface.co/datasets/Anthropic/AnthropicInterviewerarXiv — Anthropic Interviewer Technical Report

https://arxiv.org/pdf/2503.04761

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.