Image Source: ChatGPT-4o

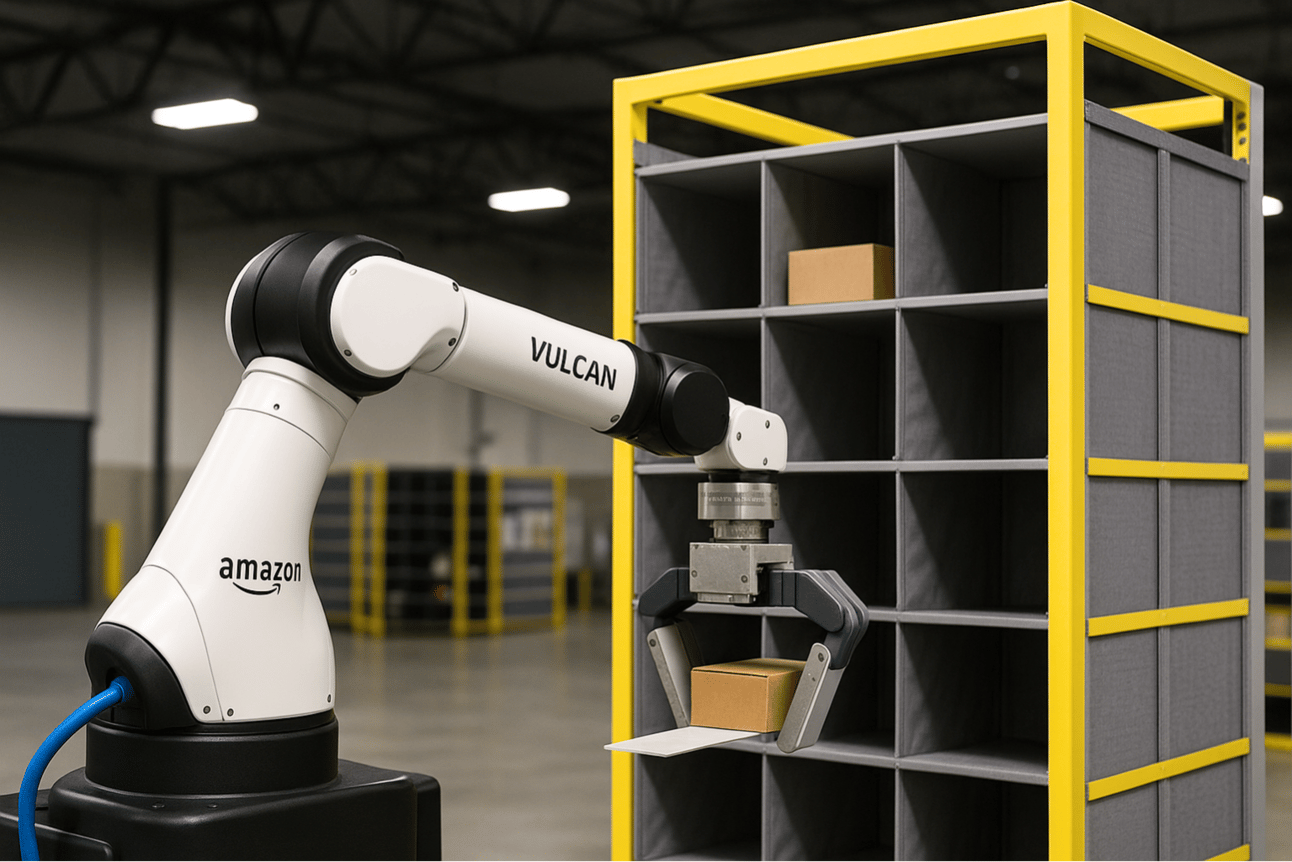

Amazon Unveils Vulcan: A Robot That Can Feel

Amazon has introduced Vulcan, its first robot equipped with a sense of touch—an innovation that aims to make warehouse operations faster, safer, and more ergonomic for employees. Debuted at the company’s Delivering the Future event in Dortmund, Germany, Vulcan represents a major leap in what robots can do in real-world environments.

From Vision to Touch: A Step Change in Robotics

Until now, most commercial robots relied heavily on sight and pre-programmed motions. Vulcan adds something long missing: the ability to feel. According to Aaron Parness, Amazon’s director of applied science, traditional industrial robots are often “numb and dumb,” either halting suddenly or pushing through obstacles because they can’t sense when they've made unexpected contact.

“Vulcan represents a fundamental leap forward in robotics,” said Parness. “It’s not just seeing the world, it’s feeling it.”

Real-World Impact in Fulfillment Centers

Already in use at facilities in Spokane, Washington, and Hamburg, Germany, Vulcan is helping Amazon employees pick and stow items with greater ease and safety. Front-line worker Kari Freitas Hardy said the technology has made her job easier and opened new technical opportunities for colleagues who now work more closely with robotics.

Vulcan is particularly effective at reaching the top and bottom rows of Amazon’s inventory pods—areas that usually require ladders or awkward bending. Automating those tasks reduces physical strain, improves efficiency, and allows employees to focus on mid-level shelves, where movements are more natural and ergonomic.

How Vulcan Works

Unlike earlier Amazon robots that rely on suction cups and computer vision alone, Vulcan is the first to incorporate touch sensitivity and force feedback. Its hardware includes:

“End of arm tooling” with a pressure-sensitive gripping system resembling a ruler attached to paddles

Force sensors that measure grip strength and contact force

Built-in conveyor belts that move items into storage compartments

Cameras that verify correct item selection and detect potential errors

This combination allows Vulcan to do more than simply grab and drop. It can shift nearby items to create space, grip products gently but securely, and avoid damaging anything during stowing or retrieval. Its design helps it pick or place items in crowded one-foot-square compartments—something that has challenged past automation efforts.

For item retrieval, Vulcan uses a camera-equipped suction arm that identifies the target object and confirms successful grabs, avoiding the accidental removal of nearby products—a problem engineers refer to as “co-extracting non-target items.”

Vulcan can handle about 75% of the diverse items stored in Amazon’s facilities, operating at speeds comparable to human workers. It can also recognize when an item exceeds its capabilities and call for human assistance, blending autonomy with collaboration.

“Vulcan works alongside our employees, and the combination is better than either on their own,” says Parness.

Built on Physical AI

What sets Vulcan apart isn’t just its hardware—it’s how it was trained to understand the physical world. Unlike many robots that learn through digital simulations, Vulcan was taught using real-world interactions, incorporating tactile feedback and force data to develop a kind of mechanical intuition.

Training began with thousands of hands-on examples: lifting socks, nudging boxes, handling fragile electronics. Each interaction helped the system learn how different materials respond to pressure, movement, and resistance. This gave Vulcan the ability to make fine adjustments based on what it touches, not just what it sees.

Vulcan’s AI uses a combination of stereo vision and force feedback to:

Recognize the shapes, sizes, and orientations of items

Estimate available space inside storage compartments

Adapt its grip based on the weight, texture, and fragility of an object

Because no simulation can fully replicate the messiness of the physical world, engineers trained Vulcan on live data from actual warehouse environments. That meant capturing subtle moments—like the shift of a slippery package or the resistance of a slightly overstuffed bin—and feeding those moments back into its learning model.

The result is a robot that doesn’t just follow instructions—it responds to its environment, learns from failed attempts, and gradually builds a more refined sense of how to act in the world. Much like a child learning to handle objects through touch, Vulcan becomes more capable with every task it completes.

Scaling the Future of Fulfillment

Vulcan joins Amazon’s broader robotics ecosystem, which already includes more than 750,000 robots deployed across its network. These systems handle everything from transporting carts (Titan, Hercules) to sorting packages (Cardinal, Robin). Together, they contribute to roughly 75% of all customer orders.

Meanwhile, these robots have created hundreds of new categories of jobs at Amazon, from robotic floor monitors to on-site reliability maintenance engineers. The company also offers training programs like Career Choice, which help employees transition into roles in robotics and other advanced technical fields.

The introduction of Vulcan builds on that foundation—but goes further by addressing previously unsolved problems, such as stowing in tight spaces or reducing the need for ladders and awkward reaches.

The technology is expected to roll out across Amazon sites in Europe and the U.S. in the coming years. Parness emphasized that Amazon’s goal isn’t to create flashy technology for its own sake, but to solve specific operational challenges.

“Our vision is to scale this technology across our network, enhancing operational efficiency, improving workplace safety, and supporting our employees by reducing physically demanding tasks,” he said.

What This Means

Vulcan reflects a deeper shift in how robots are being designed—not just to automate tasks, but to understand and adapt to physical environments. For Amazon, that means smarter systems that can handle more complex jobs without replacing human workers. For employees, it means safer conditions and the opportunity to grow into new, more technical roles.

This is automation that complements people rather than displacing them. It’s also a sign of where the future of fulfillment is heading: toward intelligent systems that can think, feel, and work in sync with the humans around them.

By teaching a robot to feel, Amazon is reshaping what it means for machines and people to work side by side.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiro’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.