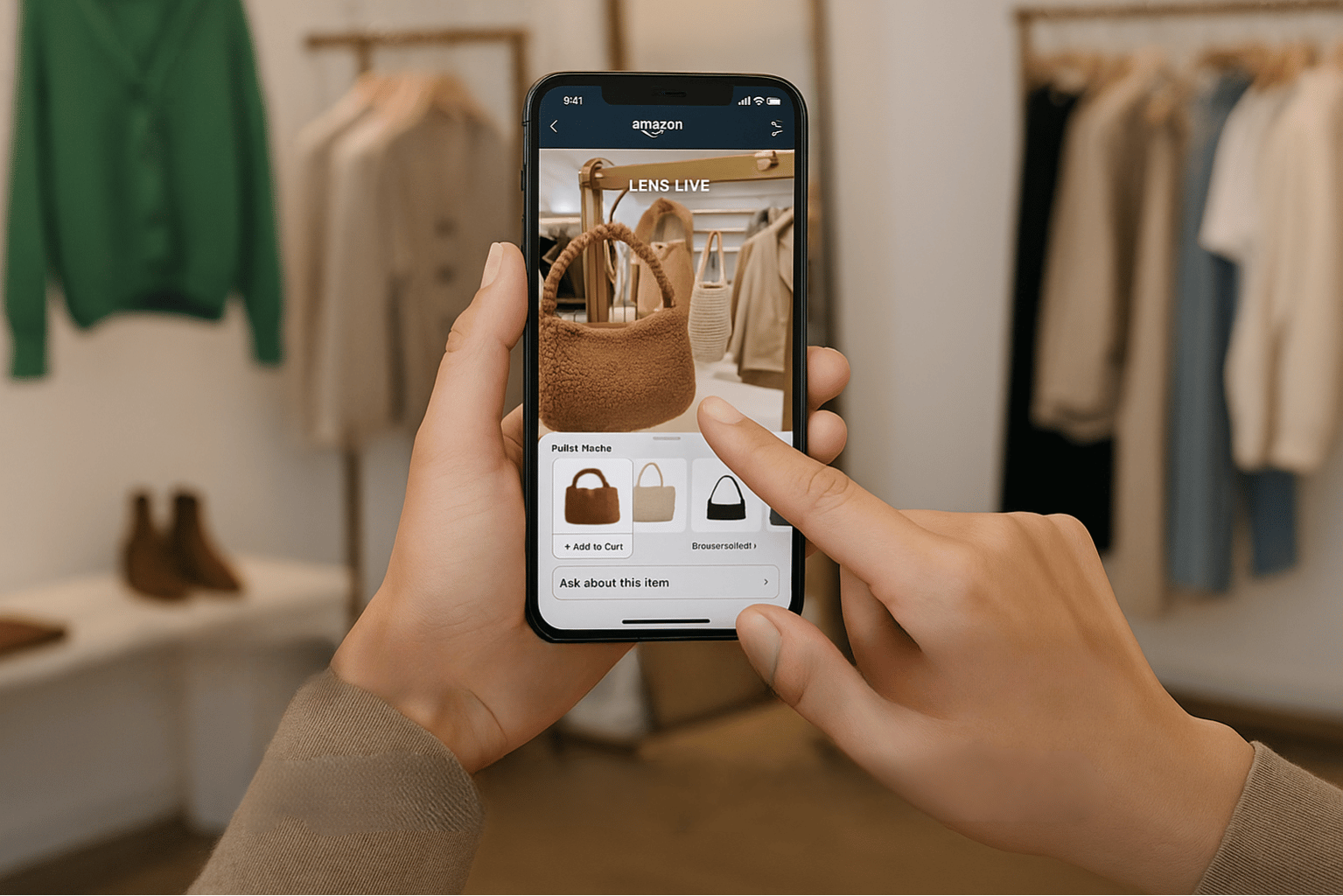

A shopper uses Amazon Lens Live to scan a handbag and instantly view matching product listings in the Amazon Shopping app. Image Source: ChatGPT-5

Amazon Launches Lens Live: AI-Powered Visual Shopping for Real-Time Product Matches

Key Takeaways: Amazon Lens Live AI Shopping Feature

Amazon Lens Live lets users scan real-world objects with their phone camera to find matching products on Amazon.

The feature is rolling out to tens of millions of U.S. iOS users, with broader availability in the coming months.

A swipeable carousel displays matches, with quick actions to add to cart or save to wish lists.

Amazon’s Rufus AI assistant is integrated to provide summaries, suggested questions, and quick answers.

Lens Live runs on AWS OpenSearch and Amazon SageMaker, using computer vision and deep learning for real-time detection and matching.

Lens Live: Amazon’s Real-Time AI Shopping Tool

Amazon announced Lens Live, a new AI-powered feature in the Amazon Shopping app, that enables customers to shop for products by simply pointing their phone camera at them.

Available first on iOS for tens of millions of U.S. customers, the feature will expand more broadly in the coming months. When users open Lens Live, the camera begins scanning automatically, displaying matching products in a swipeable carousel at the bottom of the screen for quicker comparisons. Customers can then tap an item and add it to their cart by tapping the + icon, save them to wish lists by tapping the heart icon, or tap the screen to focus on a specific product without leaving the camera view.

For those who prefer, traditional Amazon Lens features remain available, including photo capture, image uploads, and barcode scanning.

Integration with Rufus: AI Shopping Assistant

Lens Live integrates Rufus, Amazon’s AI shopping assistant, directly into the camera view. Suggested questions and quick product summaries appear under the carousel, helping customers understand key features, compare items, and perform quick research.

This approach builds on existing Amazon Lens features—such as snapping a picture, scanning barcodes, or uploading an image—but aims to make the experience more seamless and immediate.

How It Works: AWS-Powered AI Infrastructure

Behind the scenes, Lens Live uses AWS-managed Amazon OpenSearch and Amazon SageMaker to deploy large-scale machine learning models.

The system employs a lightweight computer vision model running on-device to identify products in real time as customers pan their cameras across a room or focus on specific objects. A deep learning visual embedding model then matches what the camera sees against Amazon’s catalog of billions of products, surfacing exact or similar results instantly.

Lens Live also uses the Rufus LLM to provide conversational prompts, product highlights, and Q&A support directly in the shopping flow for better product discovery.

Industry Context: Competing with Google’s Gemini Live

The launch positions Amazon against other AI-powered visual assistants, including Google’s Gemini Live, which also enables users to scan their environment and ask questions. As The Verge notes, Amazon’s version stands out for placing direct purchasing options front and center—integrating visual search tightly with its marketplace.

By combining visual recognition with an embedded shopping assistant, Amazon is aiming to keep customers within its ecosystem while making product discovery as frictionless as possible.

Q&A: Amazon Lens Live

Q: What is Amazon Lens Live?

A: Lens Live is a new AI-powered feature in the Amazon Shopping app that scans objects in real time and finds matching products.

Q: How does Lens Live work?

A: It uses computer vision on-device to detect products, then matches them against Amazon’s catalog using deep learning models.

Q: What devices and regions support Lens Live?

A: It is available now for tens of millions of iOS users in the U.S., with expansion planned for all U.S. customers soon.

Q: What role does Rufus play in Lens Live?

A: Rufus, Amazon’s AI shopping assistant, provides summaries, suggested questions, and quick answers about scanned products.

Q: How does Lens Live compare to other AI visual tools?

A: Similar to Google’s Gemini Live, Lens Live scans environments for products—but Amazon’s approach emphasizes direct shopping integration with add-to-cart and wishlist options.

What This Means: AI Shopping Comes to Everyday Scenarios

With Lens Live, Amazon is embedding AI-powered product discovery into the everyday act of pointing a camera at the world. The feature moves visual search from a one-off utility into a continuous, real-time experience, integrating directly with Amazon’s vast marketplace.

By pairing instant recognition with Rufus’s conversational guidance, Amazon is turning its app into an AI-driven shopping companion. For consumers, this means faster paths from inspiration to purchase; for Amazon, it strengthens customer retention against competitors like Google.

The launch reflects a larger shift in AI commerce, where visual intelligence and large language models converge to transform how people browse, compare, and buy products.

Editor’s Note: This article was created by Alicia Shapiro, CMO of AiNews.com, with writing, image, and idea-generation support from ChatGPT, an AI assistant. However, the final perspective and editorial choices are solely Alicia Shapiroo’s. Special thanks to ChatGPT for assistance with research and editorial support in crafting this article.